Introduction

Welcome to the official documentation for Kinesis API, a powerful all-in-one framework that transforms how you create, manage, and scale APIs.

What is Kinesis API?

Kinesis API is a comprehensive solution for API development that combines:

- A custom-built, high-performance database system (Kinesis DB)

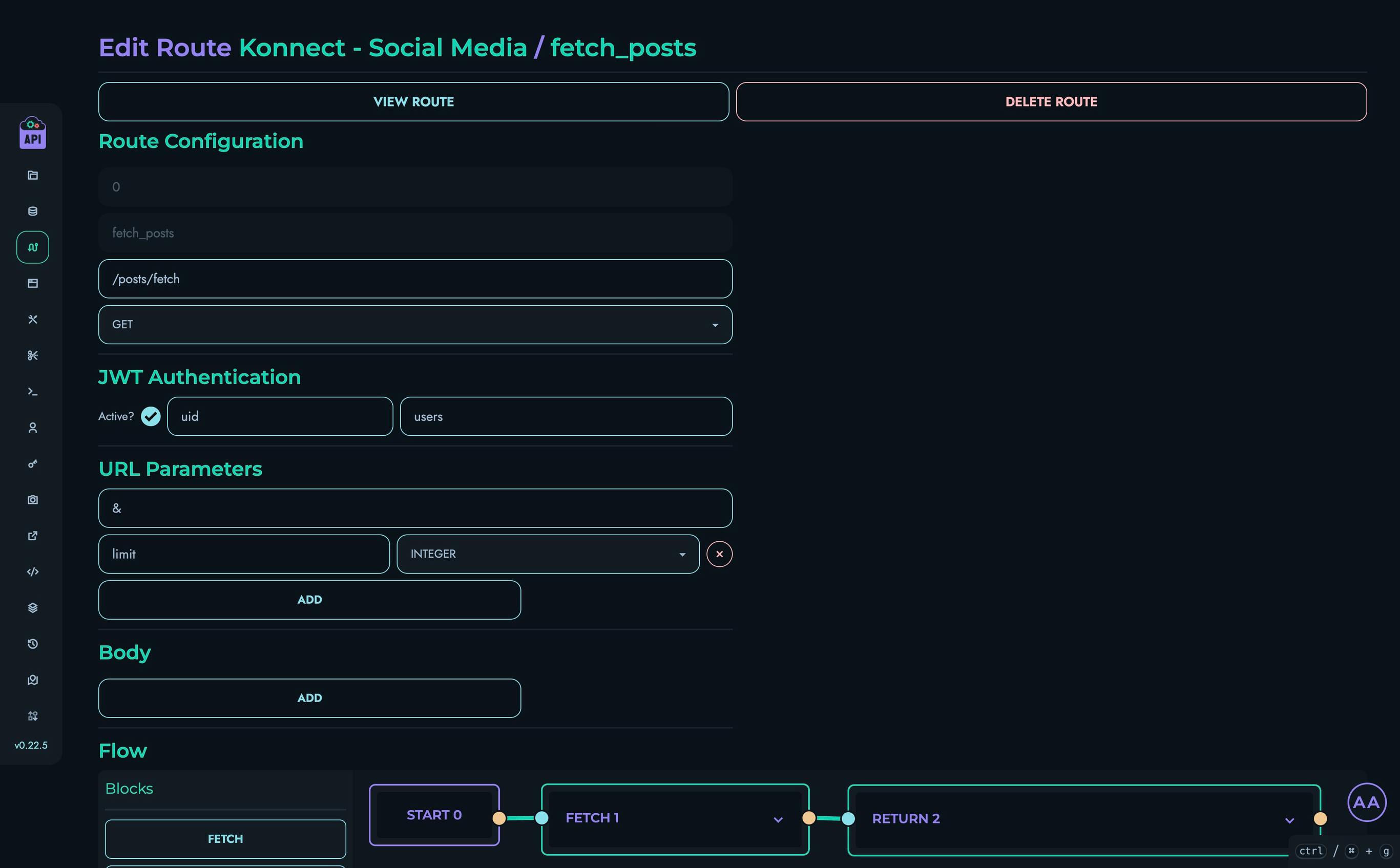

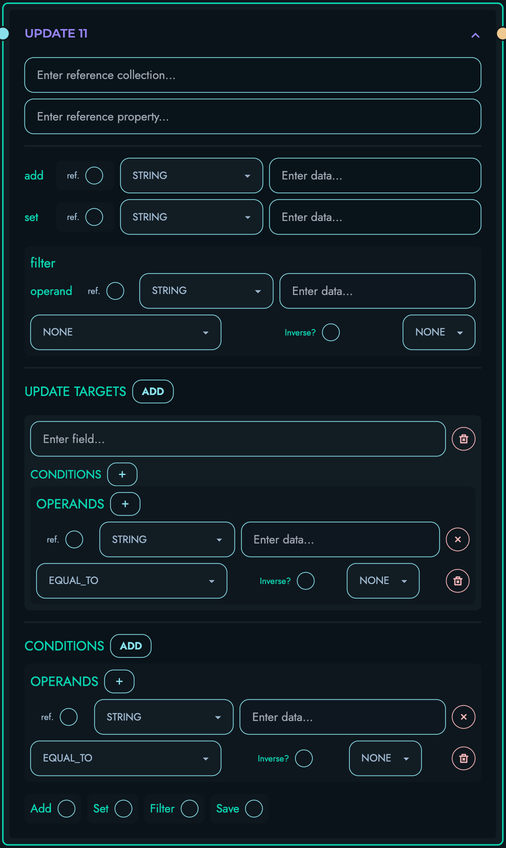

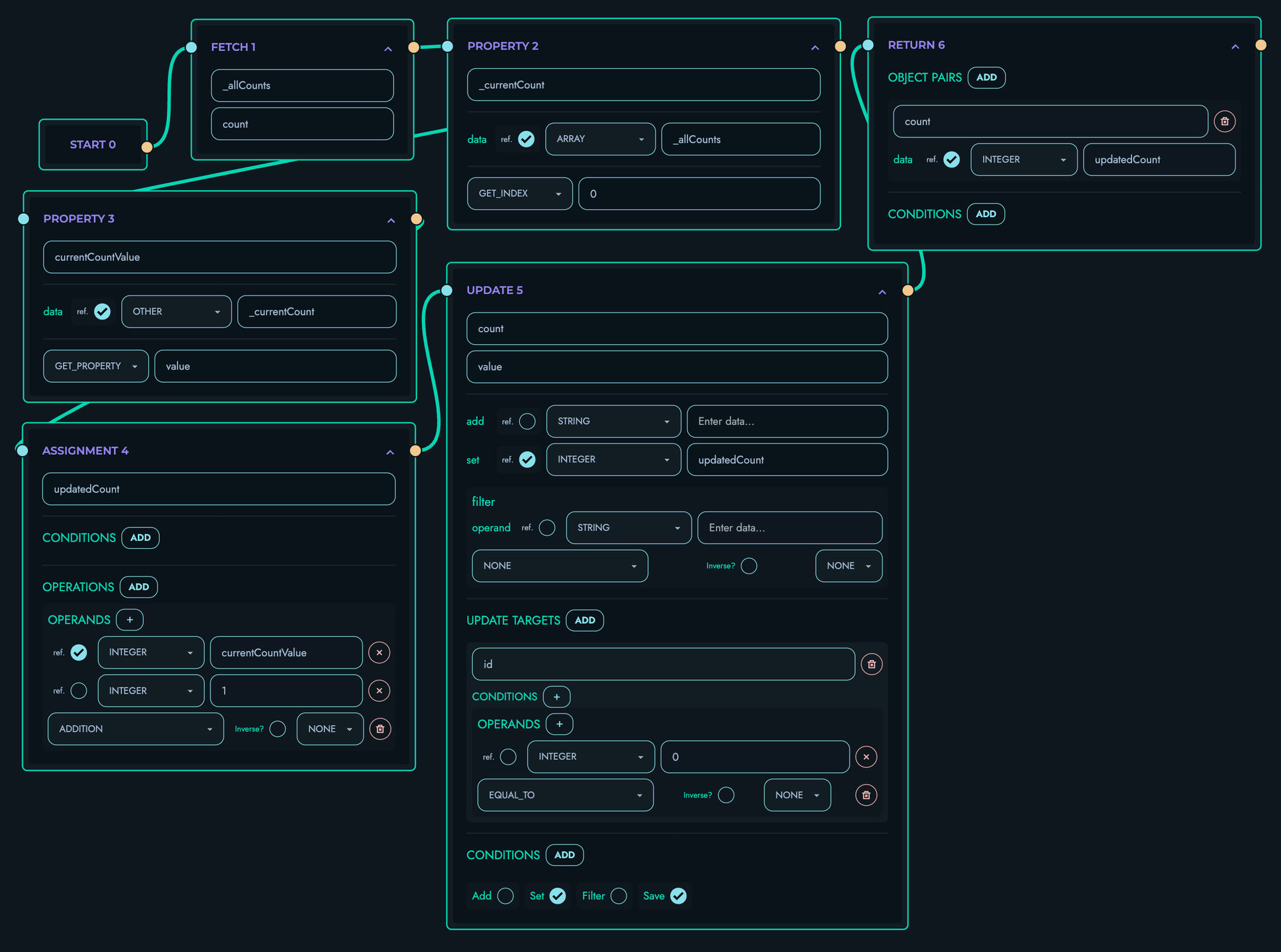

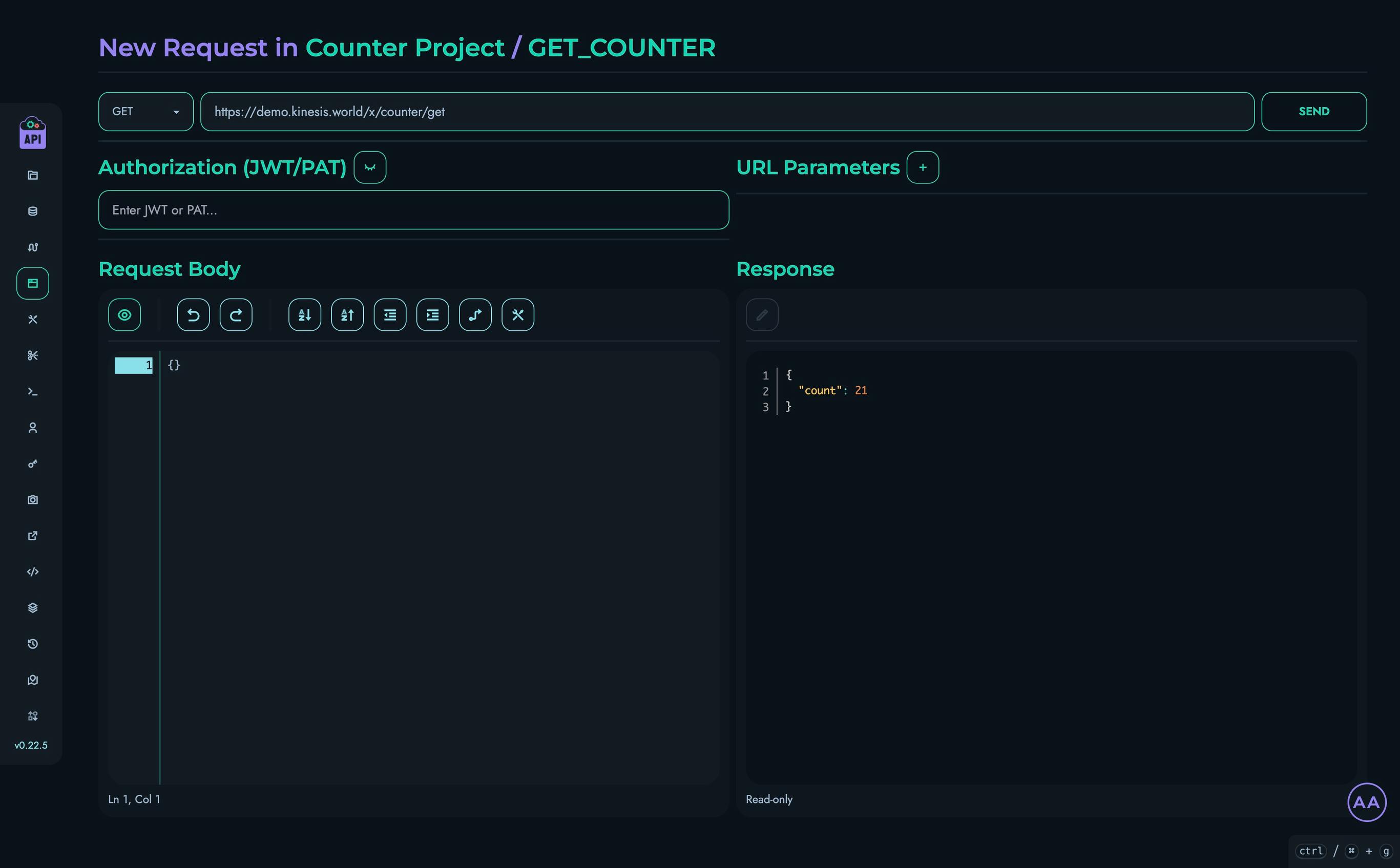

- A visual editor for creating API routes with the X Engine

- Integrated management tools that eliminate the need for multiple external services

Whether you’re prototyping a simple API or building complex, interconnected systems, Kinesis API provides the tools to accelerate development without sacrificing quality or control.

Key Features

- All-in-one Platform: API creation, database management, and execution in a single unified environment

- Visual Route Builder: Create complex API logic without writing traditional code using our block-based system

- Custom Database: Built-in ACID-compliant database system with multiple storage engines and strong schema management

- Performance-Focused: Developed in Rust for maximum efficiency and reliability

- Flexible Deployment: Deploy anywhere with our Docker images

- Comprehensive Management: User authentication, role-based access control, and extensive monitoring capabilities

Getting Started

New to Kinesis API? Start here:

- Installation Guide - Set up Kinesis API on your system

- Initialization - Configure your instance for first use

- API Tutorials - Build your first API with Kinesis API

Core Components

Dive deeper into the key technologies that power Kinesis API:

- Kinesis DB - Our custom-built database system

- X Engine - The visual API builder that makes complex logic accessible

- API Reference - Complete reference for all available endpoints

Usage Guides

Learn how to use Kinesis API effectively:

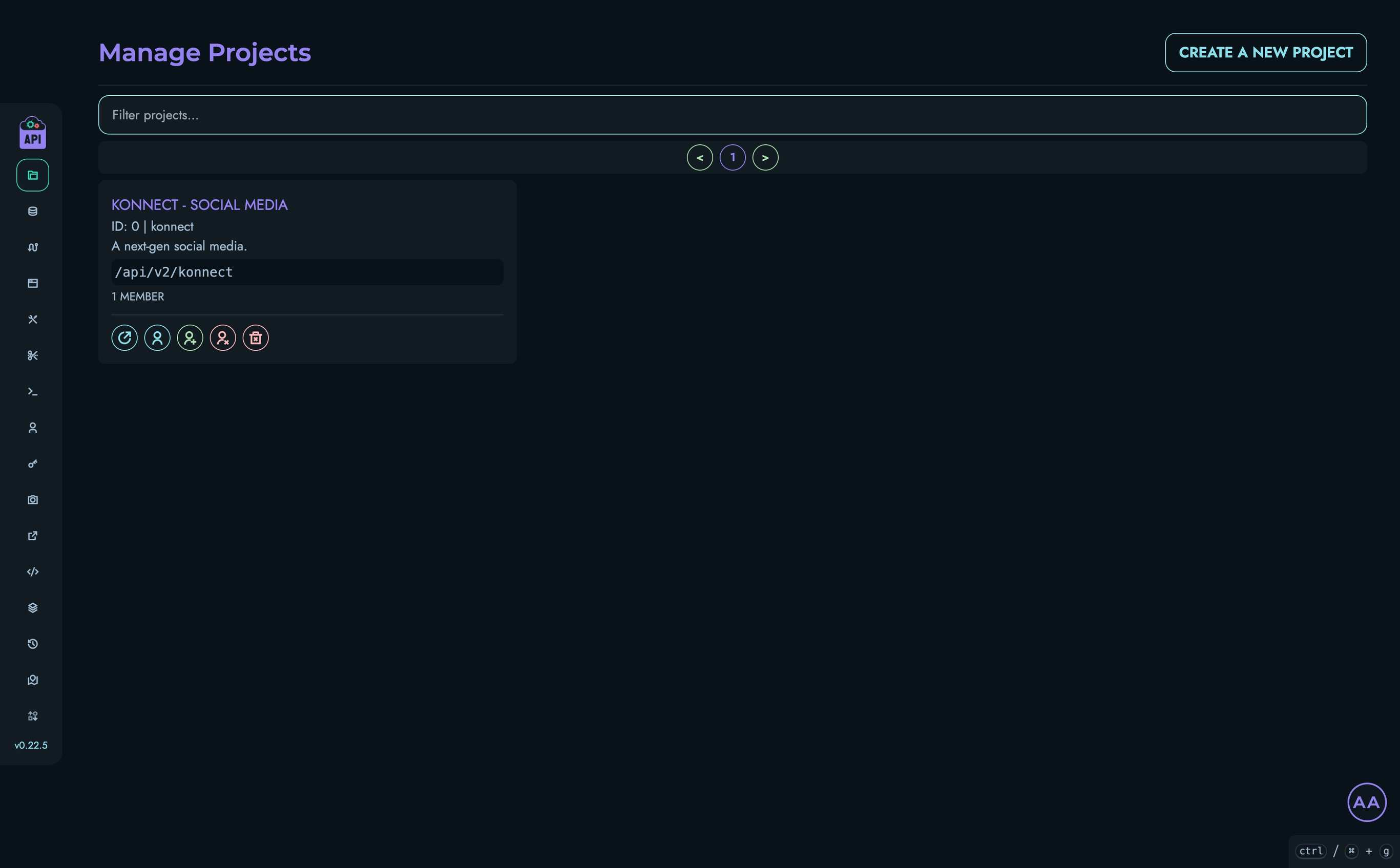

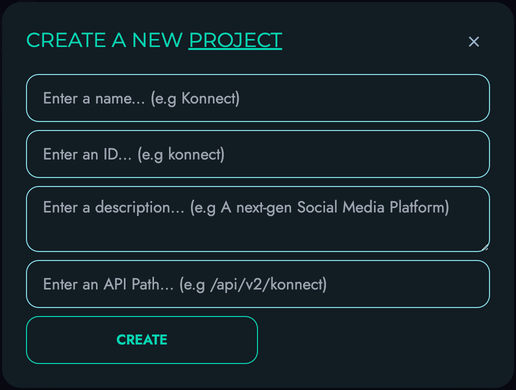

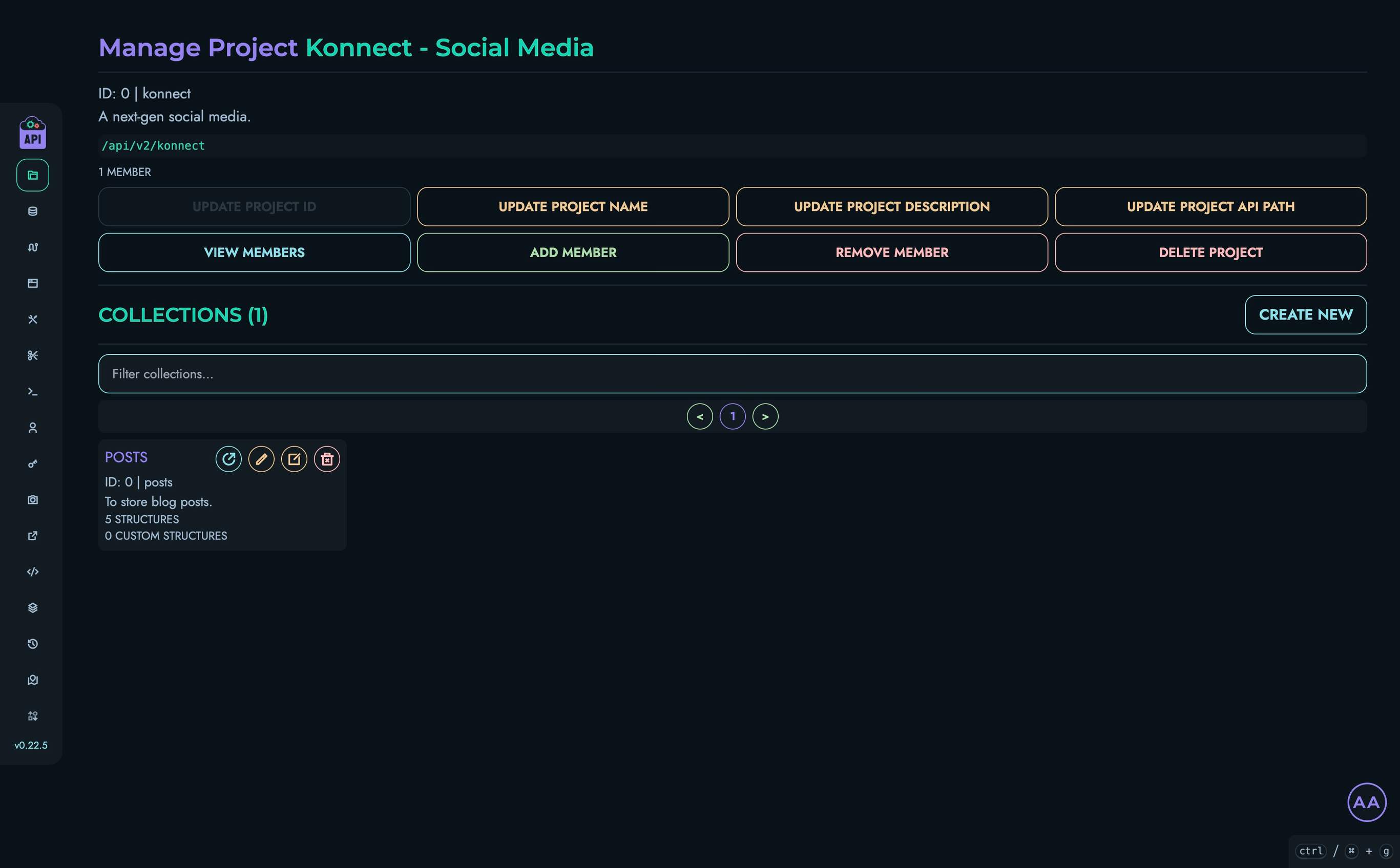

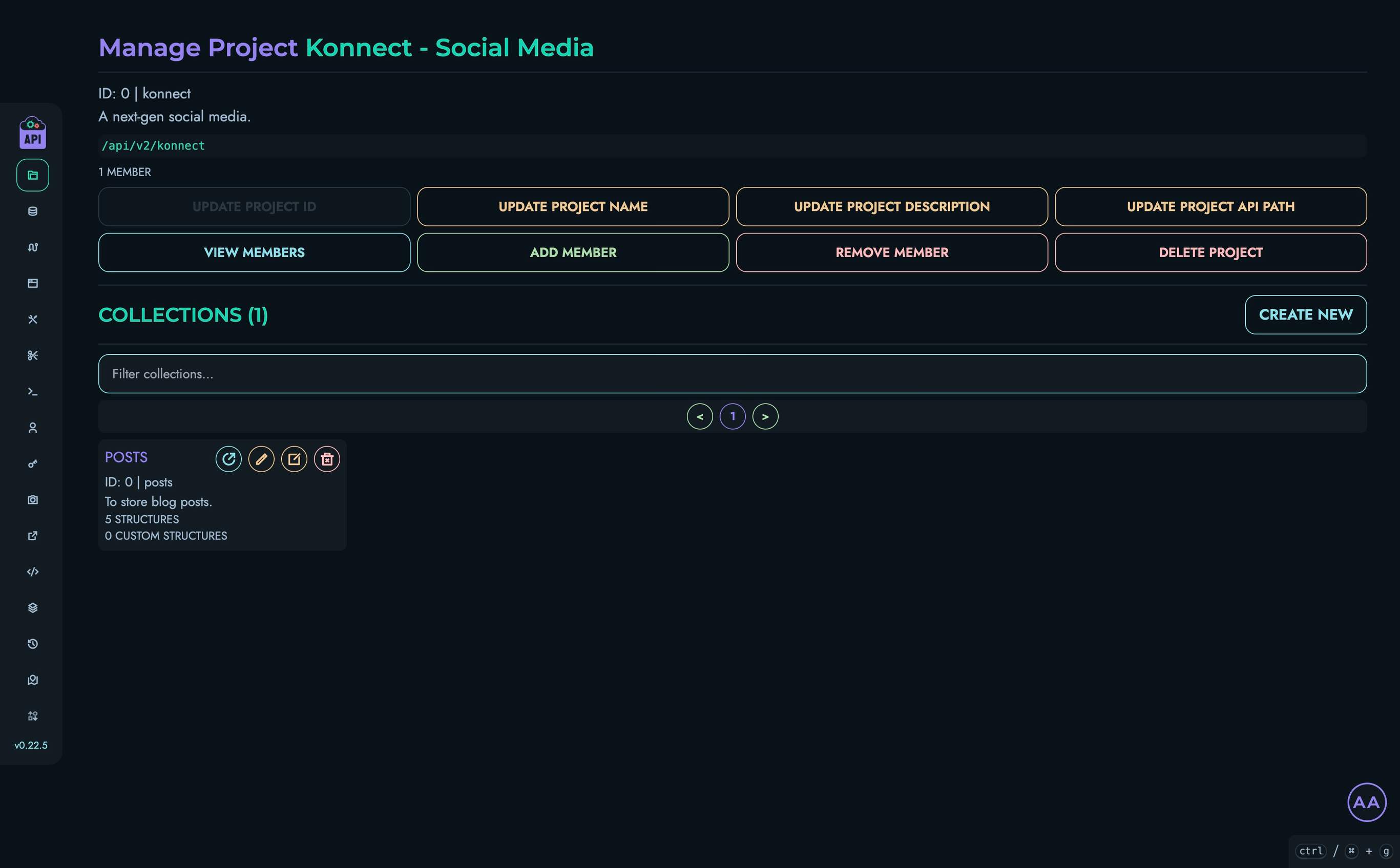

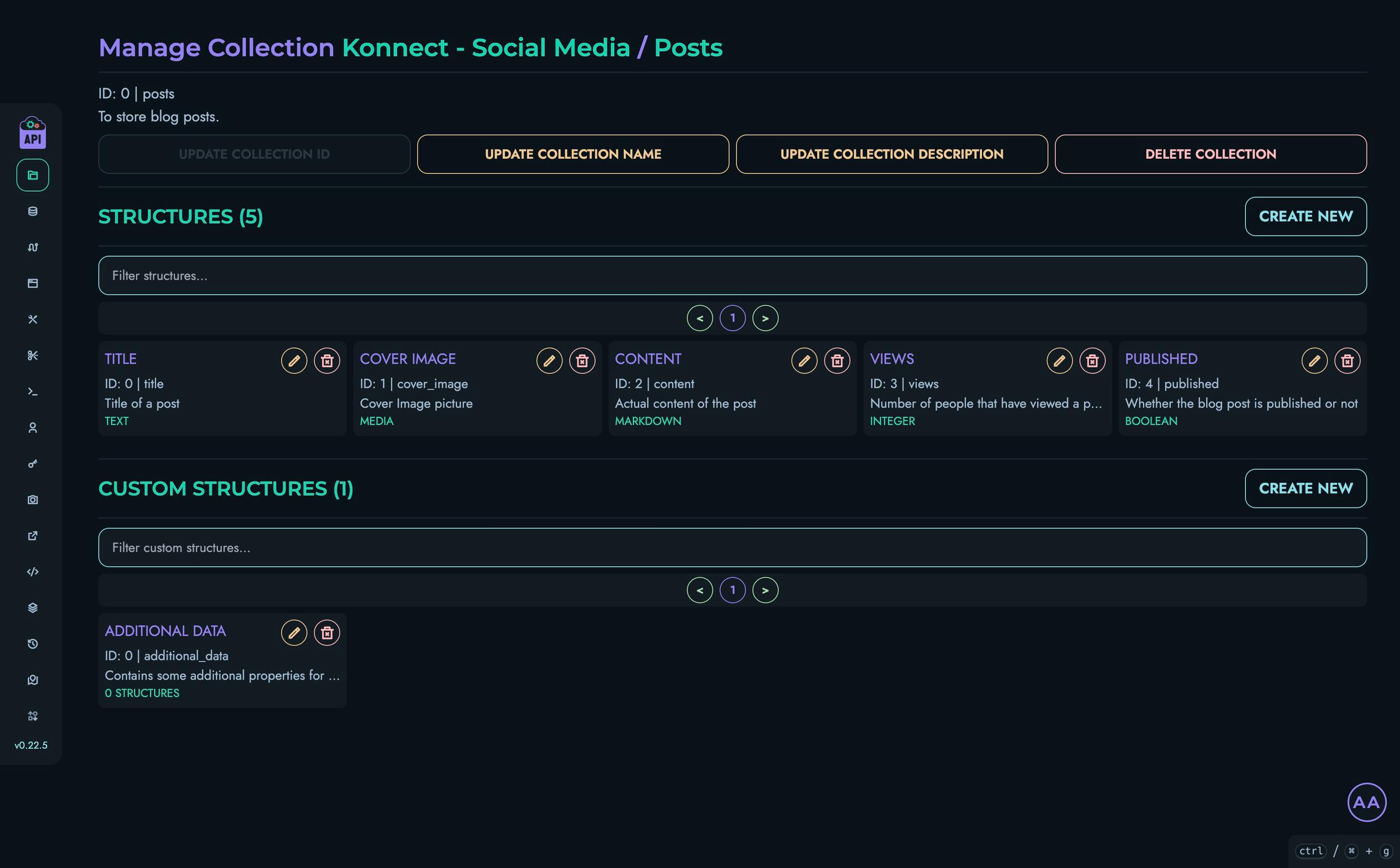

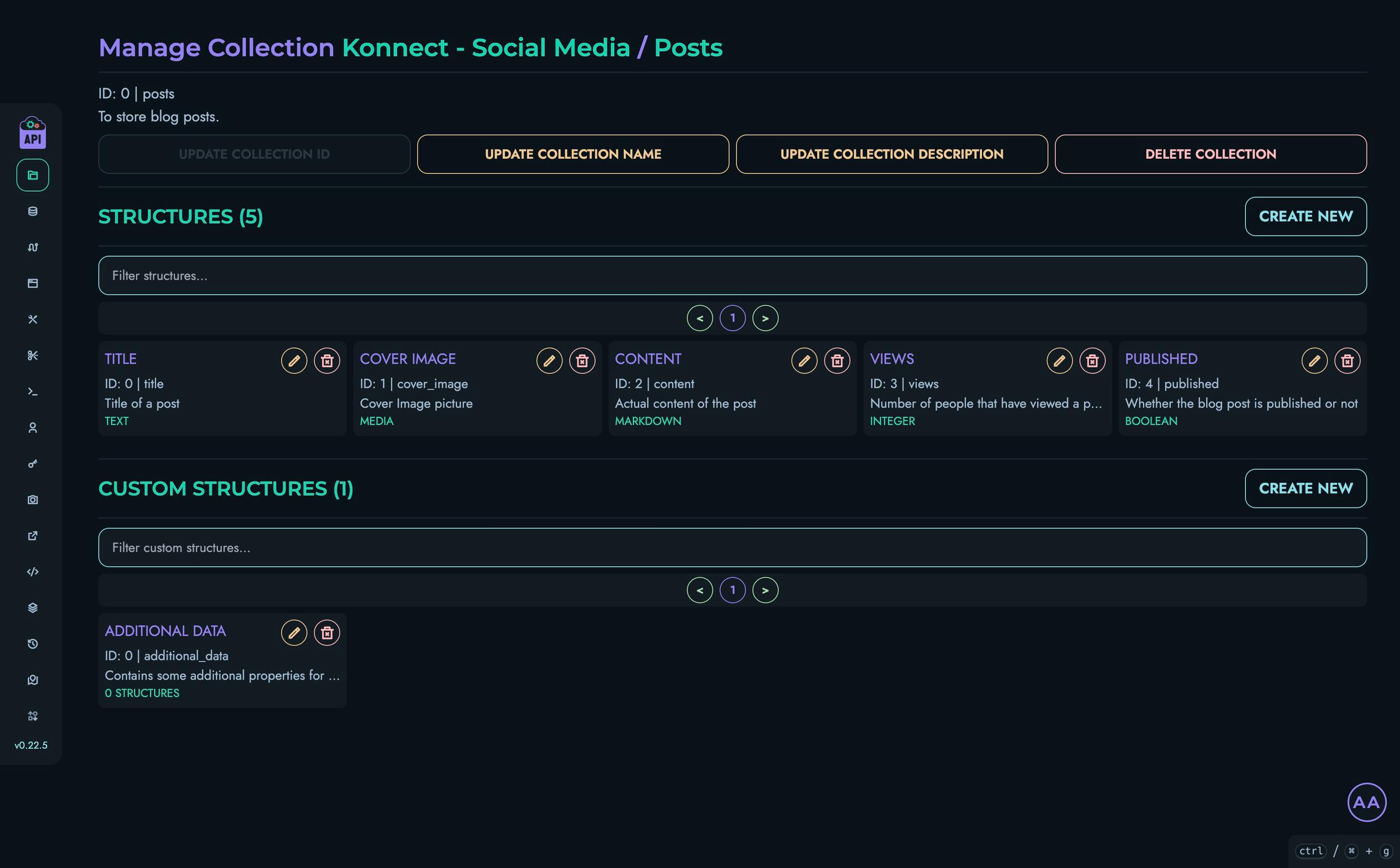

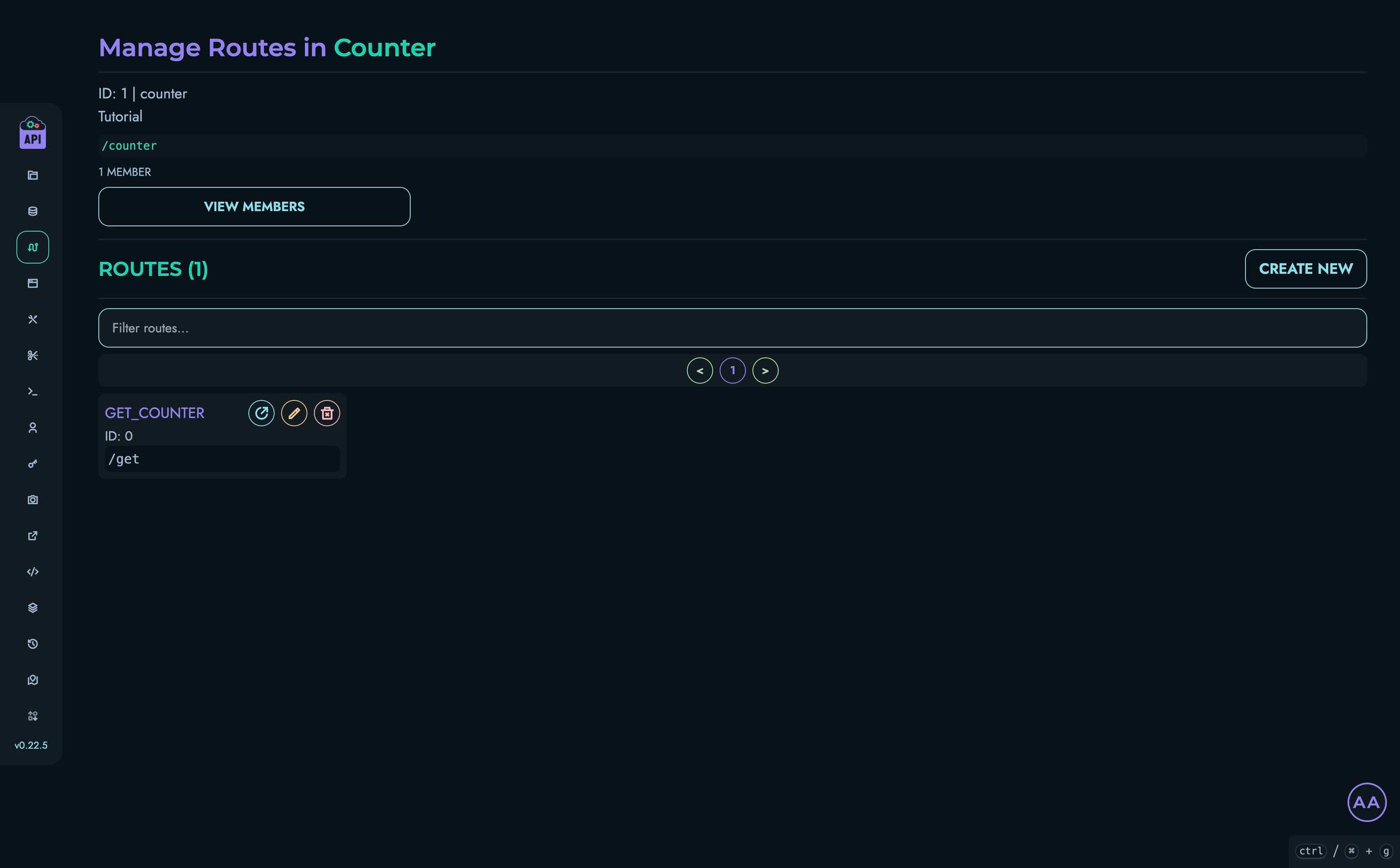

- Projects & Collections - Organize your APIs

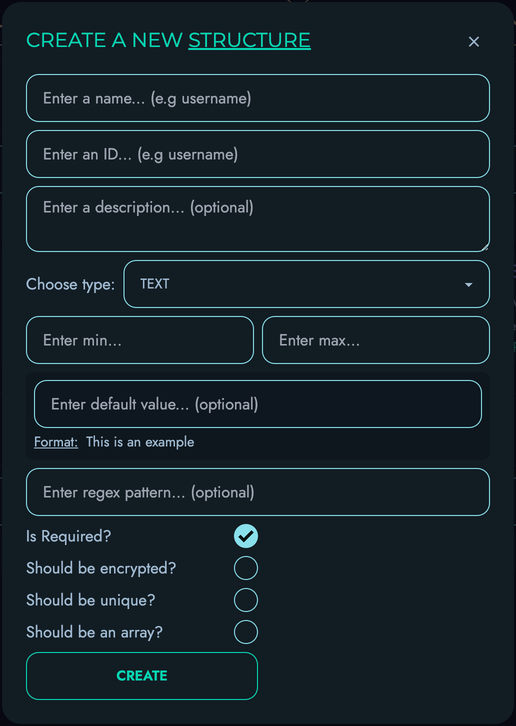

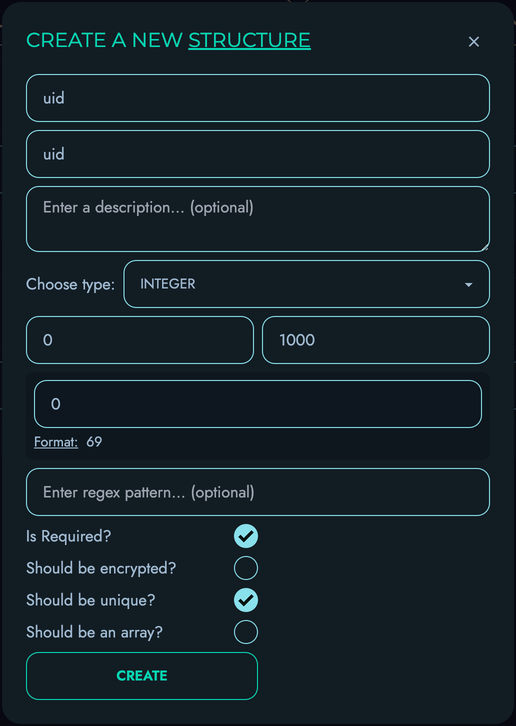

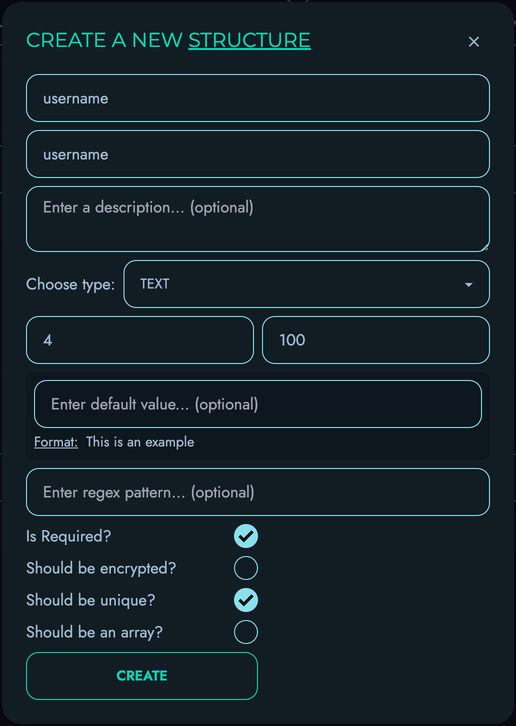

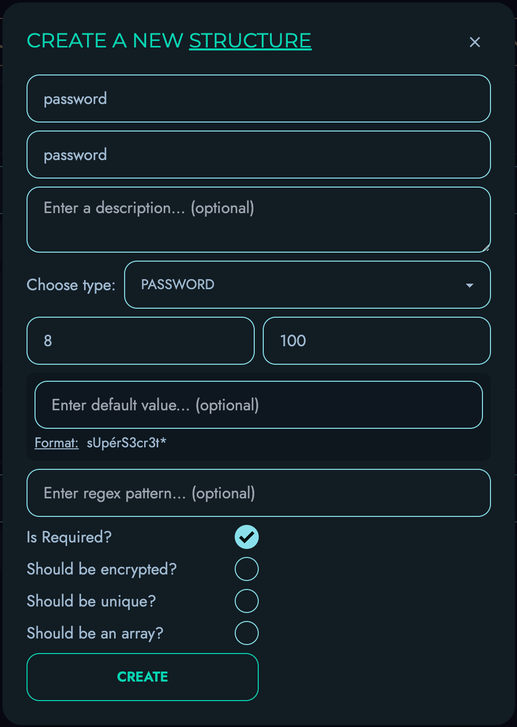

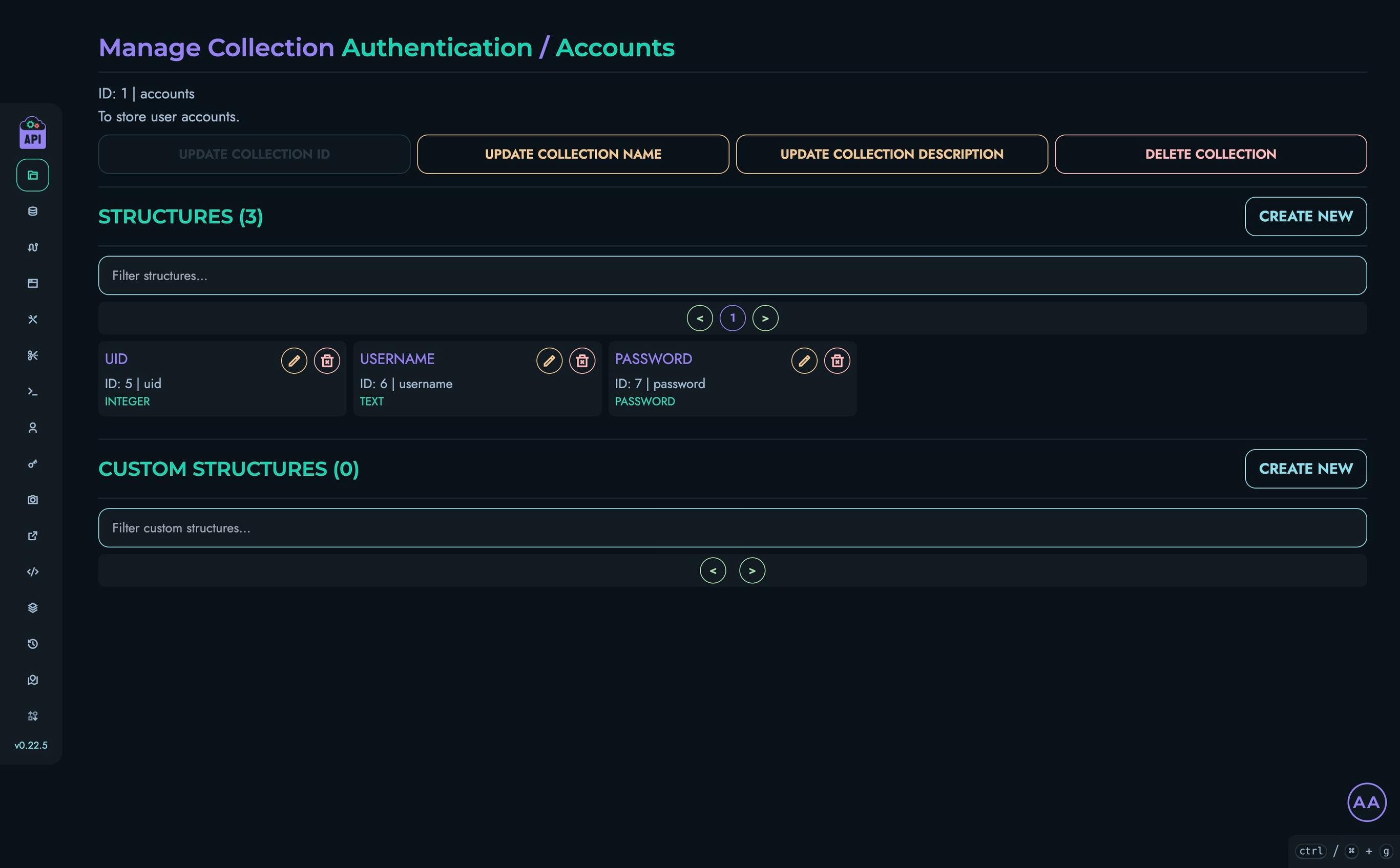

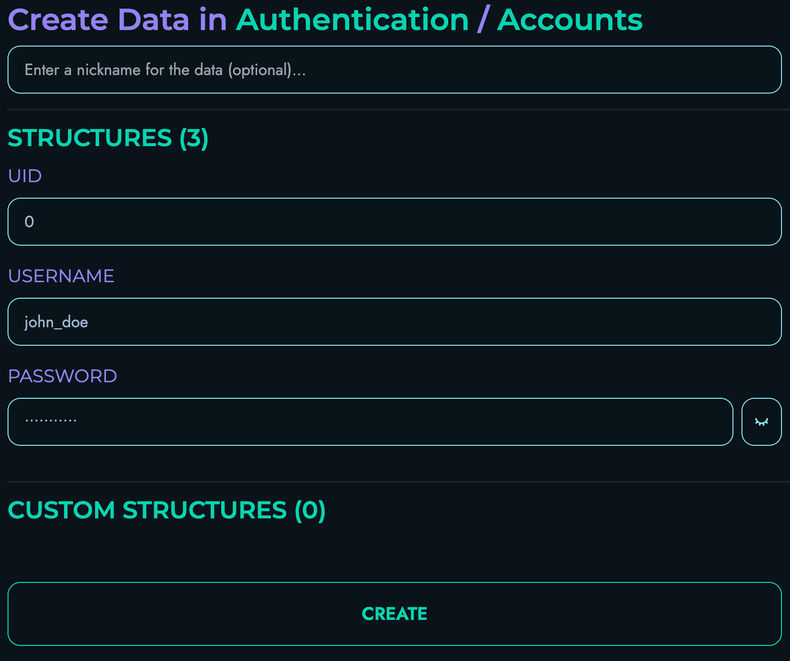

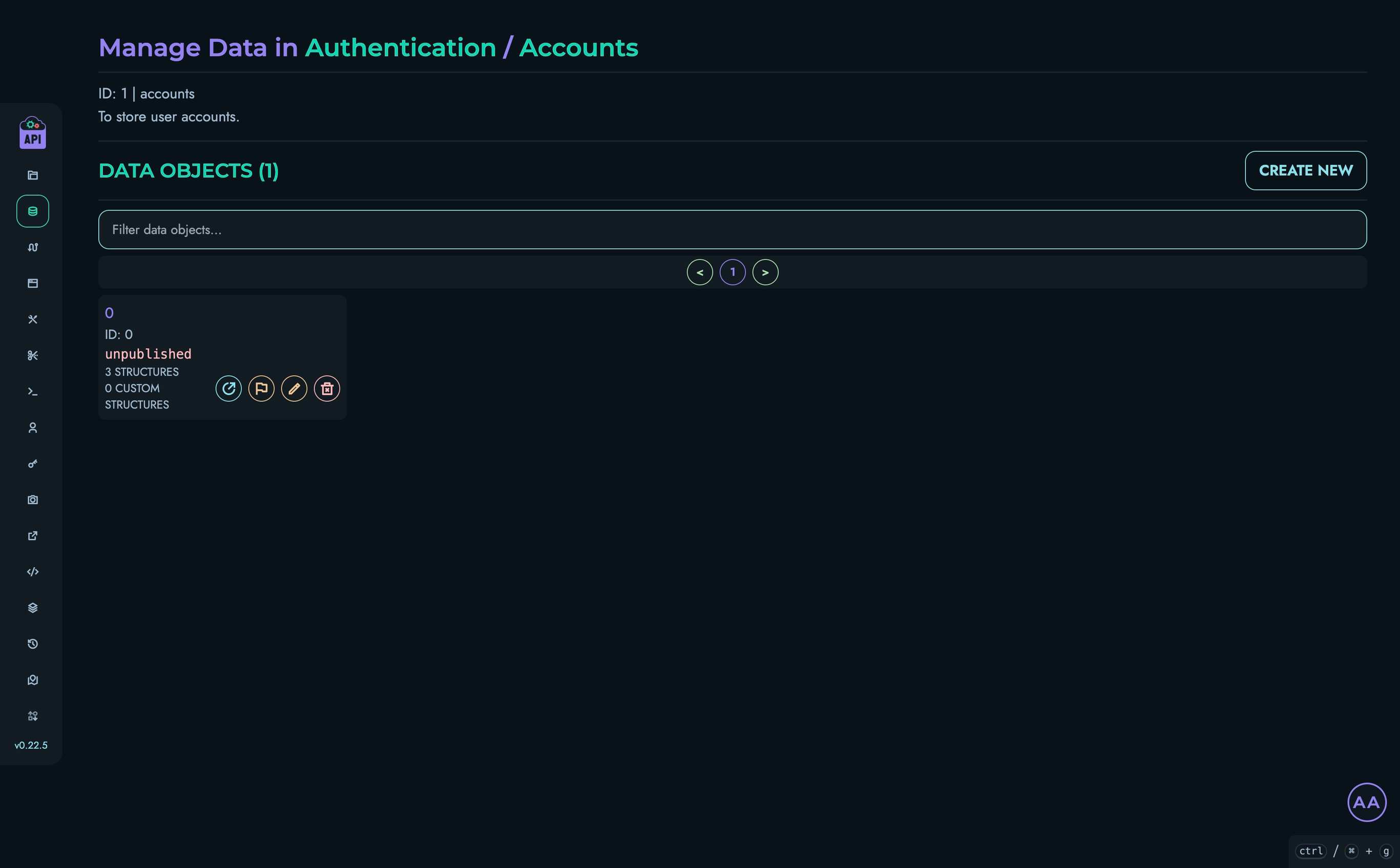

- Structures - Define your data models

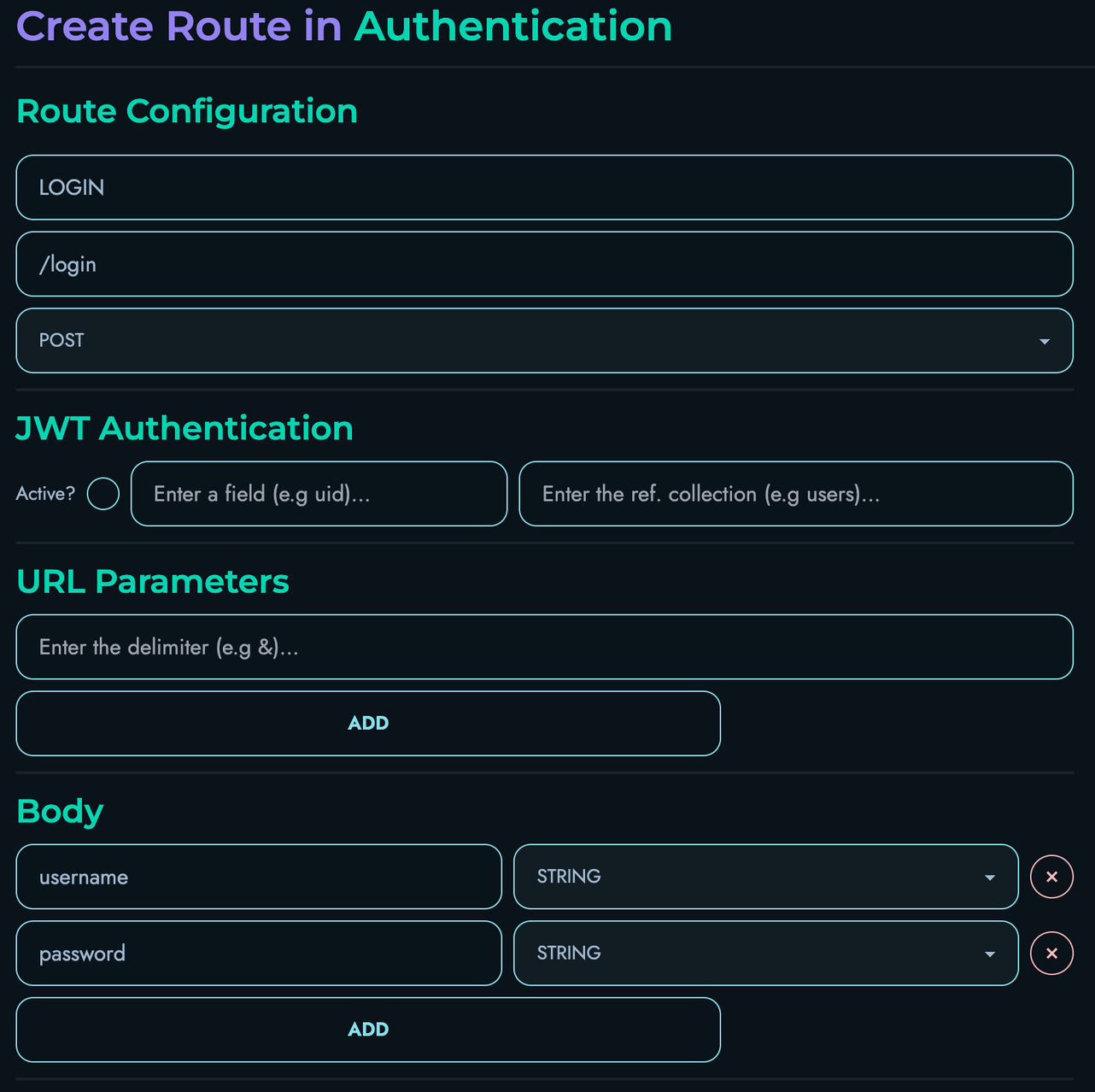

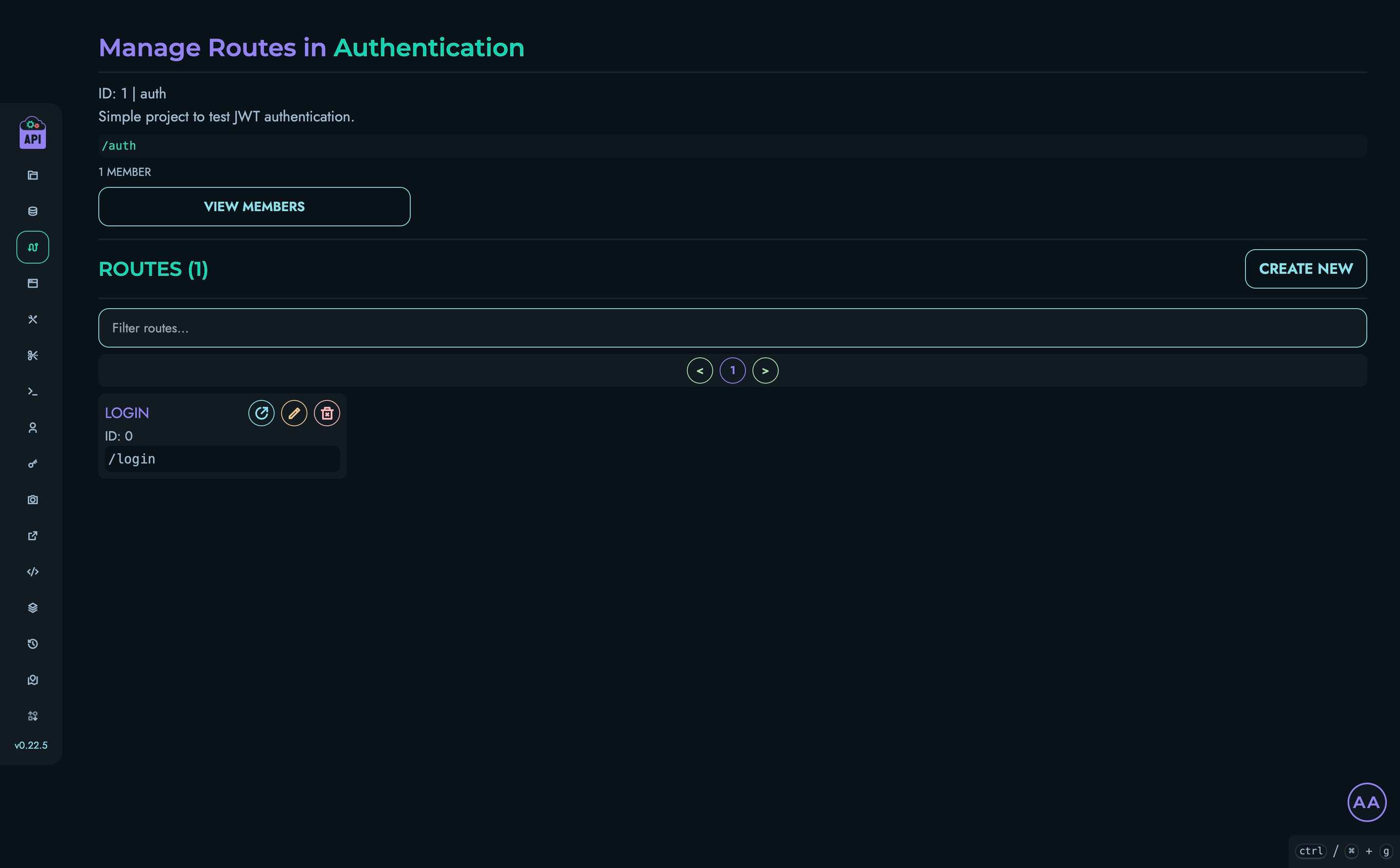

- Routes - Create and manage API endpoints

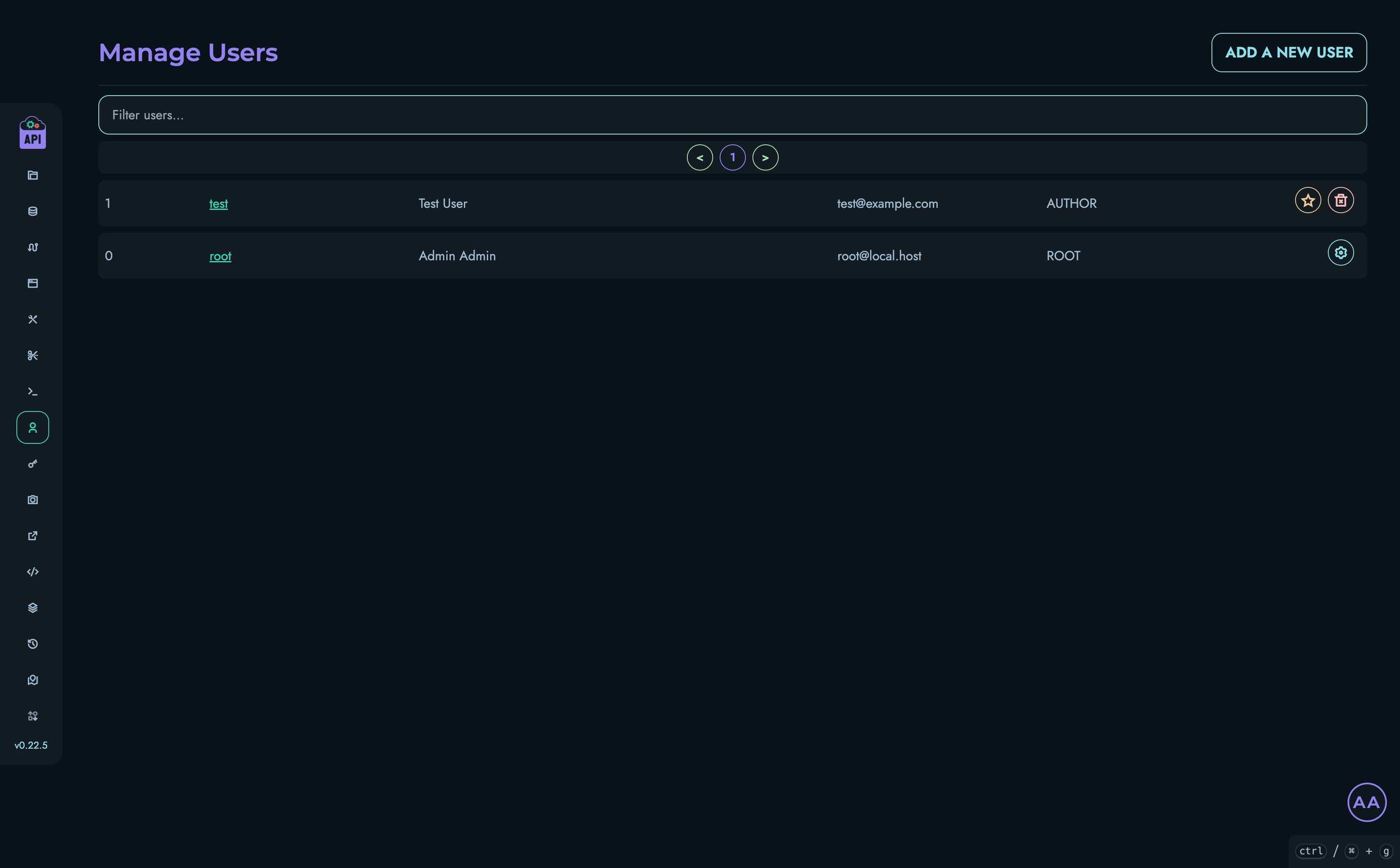

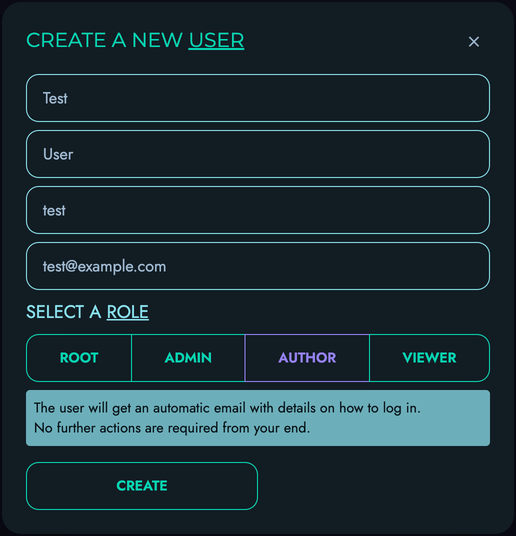

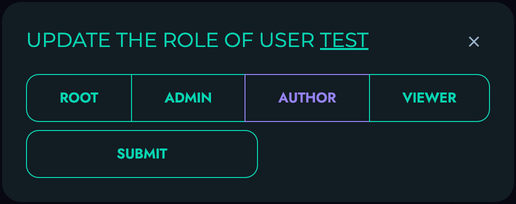

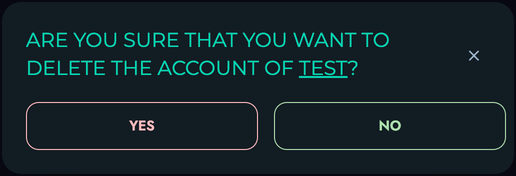

- User Management - Configure authentication and access control

Support & Community

If you need help or want to contribute:

- Check our FAQ for common questions

- Join our community discussions

- Submit bug reports or feature requests

- Follow our tutorials for practical examples

Further Steps

Once you’re familiar with the basics:

- Learn about upgrading to new versions

- Set up regular backups of your data

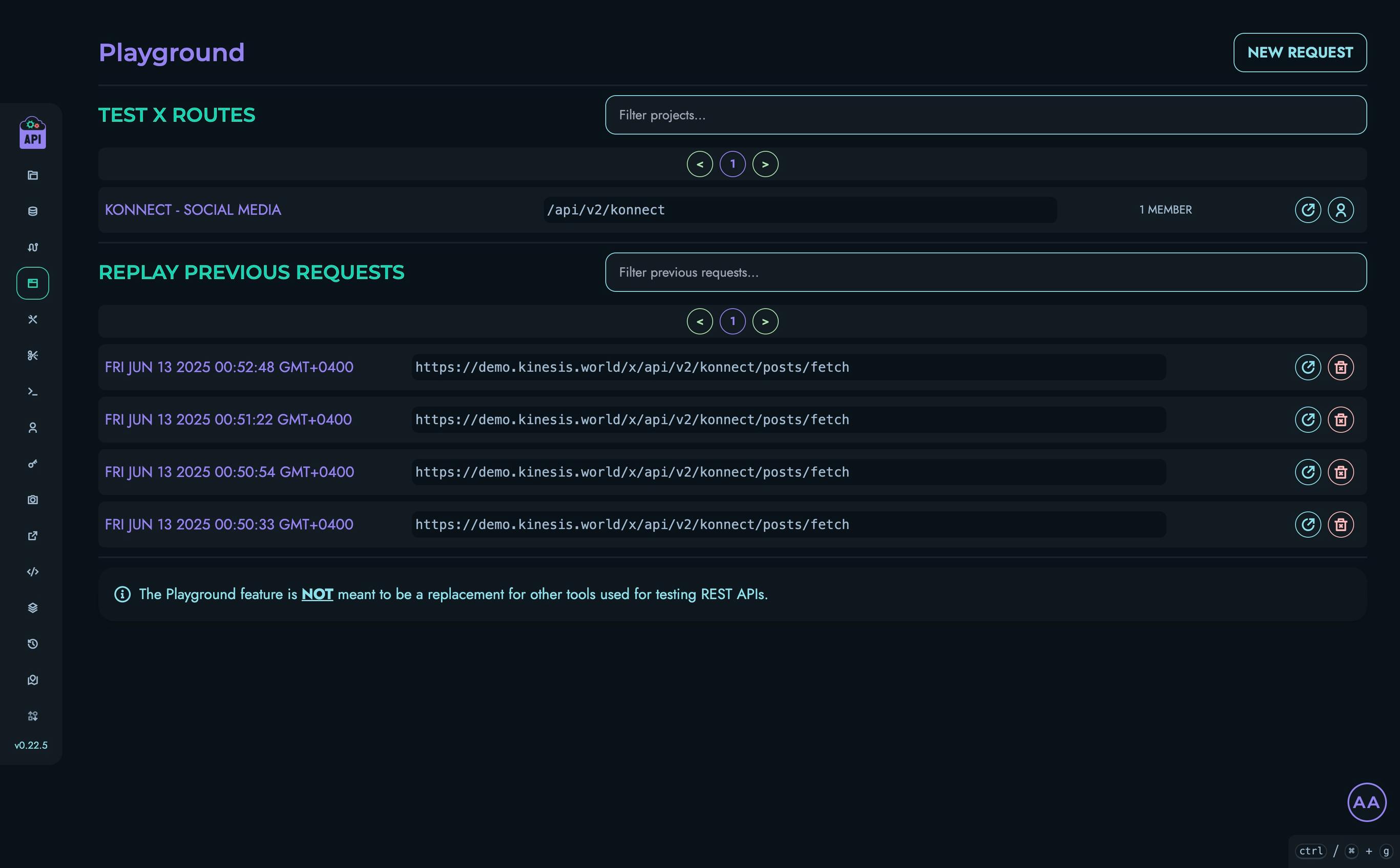

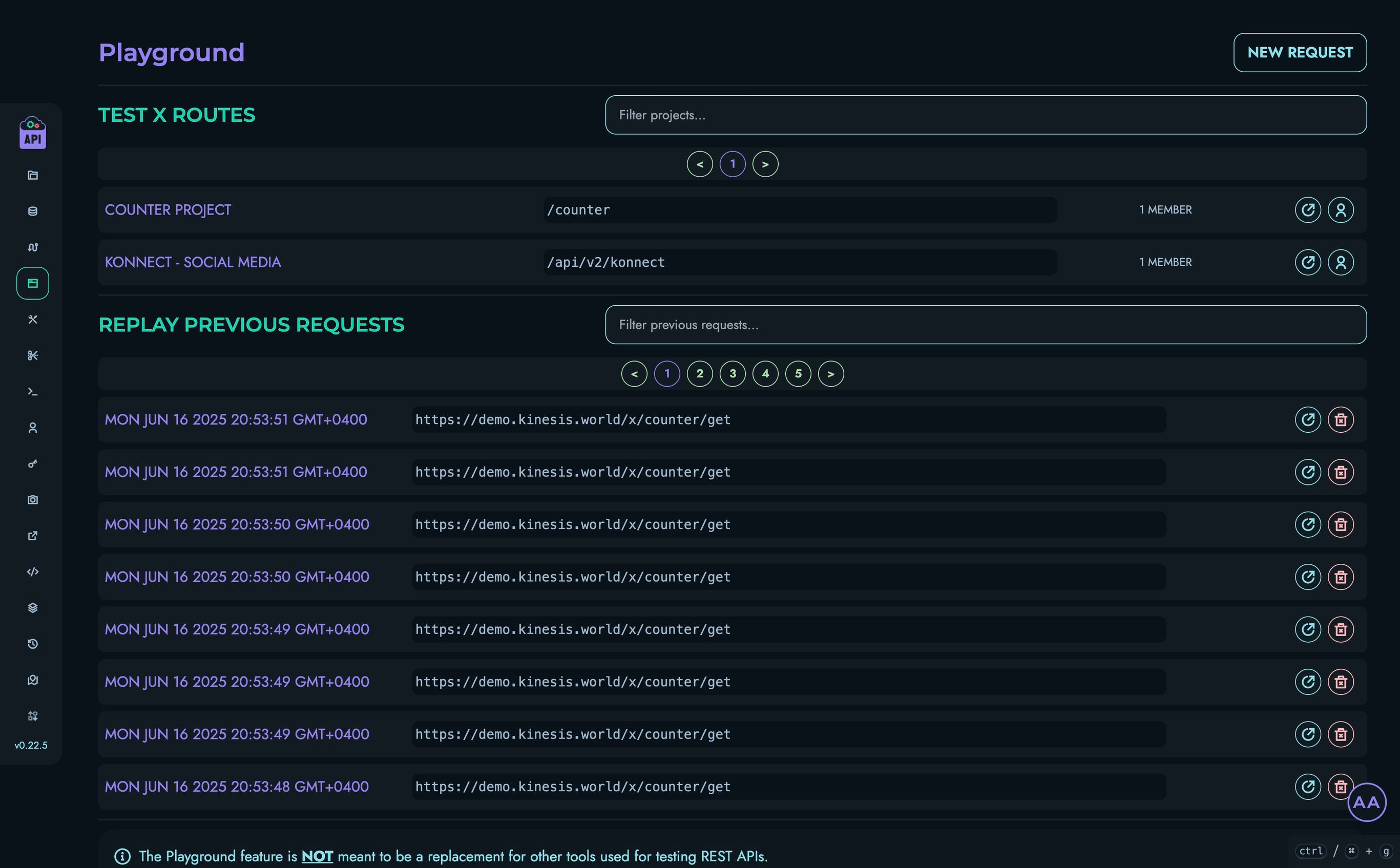

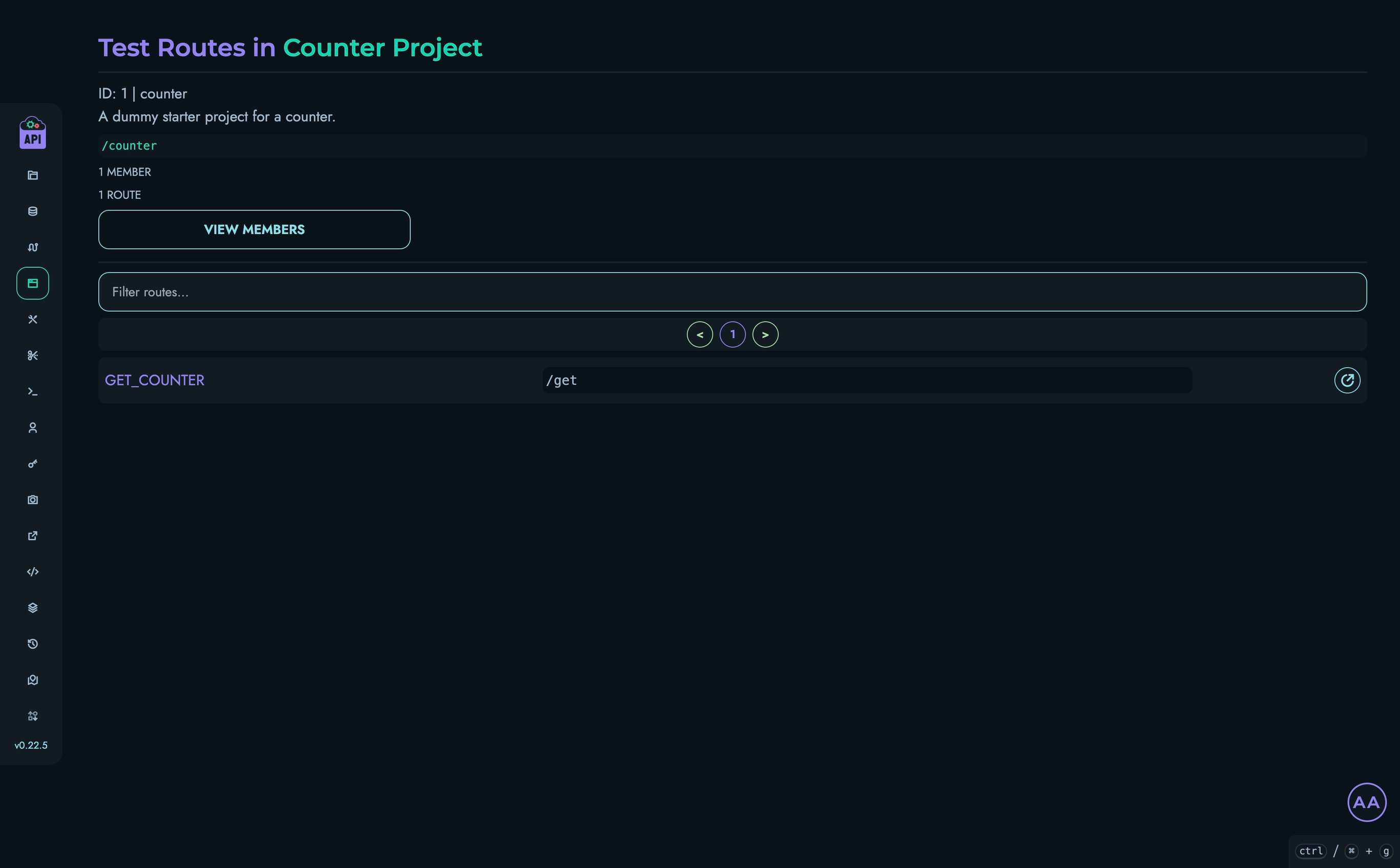

- Explore the playground for testing your APIs

Thank you for choosing Kinesis API. We’re excited to see what you’ll build!

Kinesis DB

Kinesis DB is a custom-built, ACID-compliant embedded database system written entirely in Rust. It forms the core data storage and management component of the Kinesis API platform, eliminating dependencies on external database systems while providing significant performance advantages.

Key Features

Multiple Storage Engines

Kinesis DB offers multiple storage engines to match your application’s specific needs:

-

In-Memory Engine: Ultra-fast, volatile storage ideal for temporary data, caching, or situations where persistence isn’t required. Provides maximum performance but data is lost on shutdown.

-

On-Disk Engine: Durable storage with ACID guarantees for critical data. Ensures data survives system restarts and power failures through persistent storage and write-ahead logging.

-

Hybrid Engine: Combines the speed of in-memory operations with the durability of on-disk storage. Uses intelligent caching with persistent backing for balanced performance, making it the recommended default choice for most applications.

Schema Management

Kinesis DB provides robust schema management capabilities:

-

Flexible Schema Definition: Create and modify schemas at runtime, allowing your data model to evolve with your application’s needs.

-

Strong Type System: Supports various data types with strict validation to ensure data integrity.

-

Comprehensive Constraints:

- Required fields (non-null constraints)

- Unique constraints to prevent duplicate values

- Default values for fields

- Pattern matching for string fields using regular expressions

- Min/max value constraints for numeric fields

- Custom validation rules

-

Schema Versioning: Track and manage schema changes over time.

Transaction Support

Kinesis DB is designed with full ACID compliance:

-

Atomicity: All operations within a transaction either complete fully or have no effect.

-

Consistency: Transactions bring the database from one valid state to another, maintaining all defined constraints.

-

Isolation: Multiple isolation levels to control how concurrent transactions interact:

ReadUncommitted: Lowest isolation, allows dirty readsReadCommitted: Prevents dirty readsRepeatableRead: Prevents non-repeatable readsSerializable: Highest isolation, ensures transactions execute as if they were sequential

-

Durability: Once a transaction is committed, its changes are permanent, even in the event of system failure.

-

Deadlock Detection and Prevention: Automatic detection and resolution of transaction deadlocks.

-

Write-Ahead Logging (WAL): Ensures durability and supports crash recovery.

Performance Optimizations

Kinesis DB incorporates several performance optimizations:

-

Efficient Buffer Pool Management: Minimizes disk I/O by caching frequently accessed data in memory.

-

Configurable Caching Strategies: Tune caching behavior to match your workload characteristics.

-

Automatic Blob Storage: Large string values are automatically managed for efficient storage and retrieval.

-

Asynchronous I/O Operations: Non-blocking I/O to maximize throughput.

-

Indexing: Supports various indexing strategies to speed up queries.

-

Query Optimization: Intelligent query planning and execution.

Query Interface

Kinesis DB provides an intuitive query interface:

-

SQL-Inspired Command Syntax: Familiar syntax for developers with SQL experience.

-

CRUD Operations: Comprehensive support for Create, Read, Update, and Delete operations.

-

Data Search Capabilities:

- Equality matching

- Range queries

- Pattern matching with regular expressions

- Full-text search capabilities

-

Multiple Output Formats:

- Standard output

- JSON formatting for API responses

- Table format for human-readable outputs

Configuration

Kinesis DB can be configured through environment variables:

| Variable | Description | Possible Values | Default |

|---|---|---|---|

DB_NAME | Name of the database (affects file names) | Any valid filename | main_db |

DB_STORAGE_ENGINE | Select storage engine | memory, disk, hybrid | hybrid |

DB_ISOLATION_LEVEL | Default transaction isolation level | read_uncommitted, read_committed, repeatable_read, serializable | serializable |

DB_BUFFER_POOL_SIZE | Configure the buffer pool size | Any positive integer | 100 |

DB_AUTO_COMPACT | Enable/disable automatic database compaction | true, false | true |

DB_RESTORE_POLICY | Control how transactions are recovered after a crash | discard, recover_pending, recover_all | recover_pending |

Best Practices

Performance Optimization

- Choose the appropriate storage engine for your use case

- Tune the buffer pool size based on your memory availability and working set size

- Use indexes for frequently queried fields

- Batch related operations in transactions

- Consider denormalizing data for read-heavy workloads

Data Integrity

- Use constraints to enforce business rules at the database level

- Always use transactions for related operations

- Implement proper error handling for database operations

- Regularly back up your data

Schema Design

- Design schemas with future growth in mind

- Use appropriate data types for each field

- Consider query patterns when designing your schema

- Use meaningful field names and conventions

Related Documentation

- Data Management - How to manage data in Kinesis API

- Structures - Working with data structures

- Backups - Backing up your Kinesis DB data

Current Schema

The above diagram illustrates the current database schema used in Kinesis API. The schema represents the relationships between core components of the system, including users, configs, projects, collections, structures and data objects. This schema is implemented directly in Kinesis DB, leveraging the type system and constraints described earlier to ensure data integrity across all operations.

Conclusion

Kinesis DB provides a powerful, embedded database solution that combines performance, reliability, and ease of use. By integrating directly with Kinesis API, it eliminates the need for external database dependencies while providing all the features expected of a modern database system.

X Engine

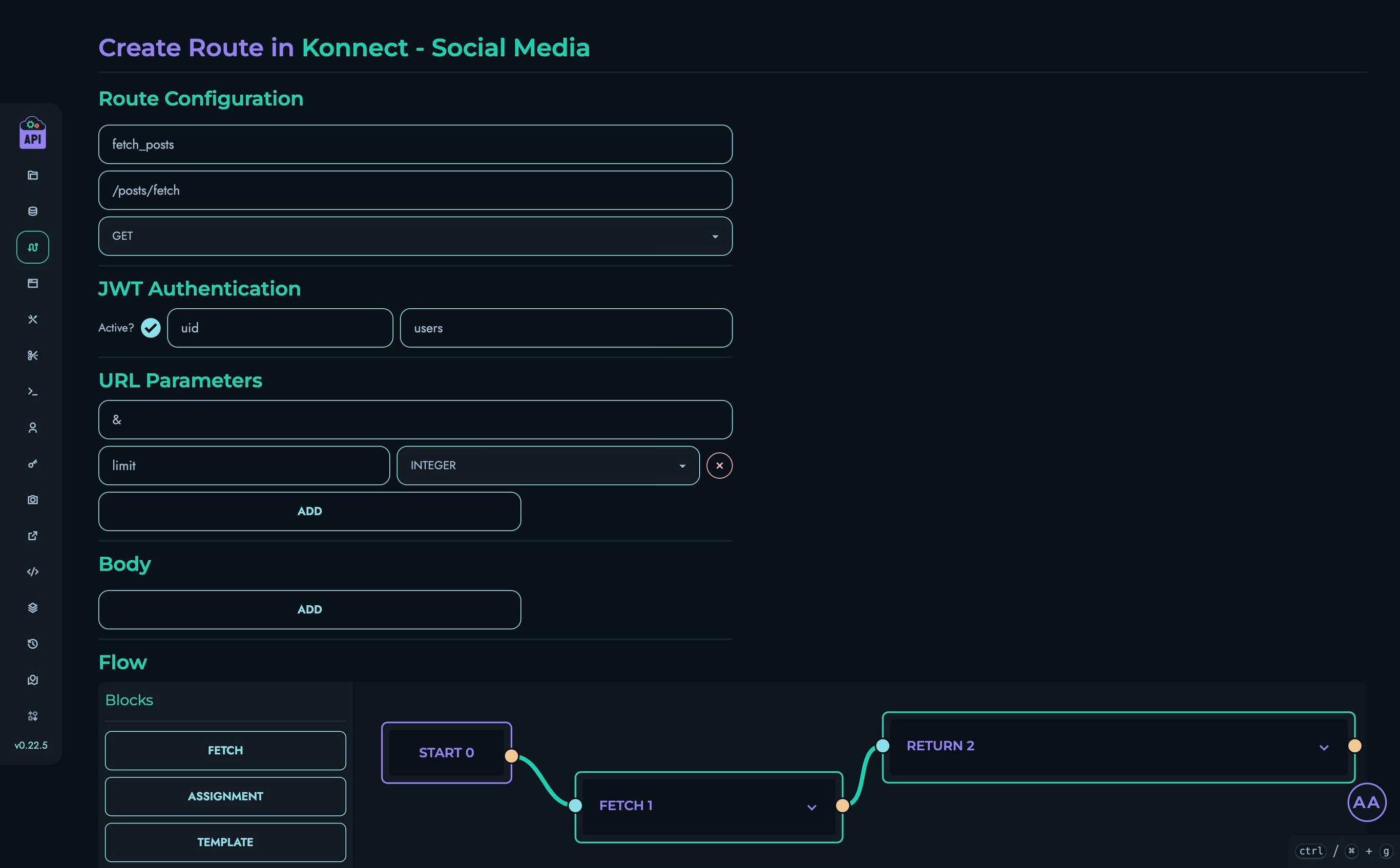

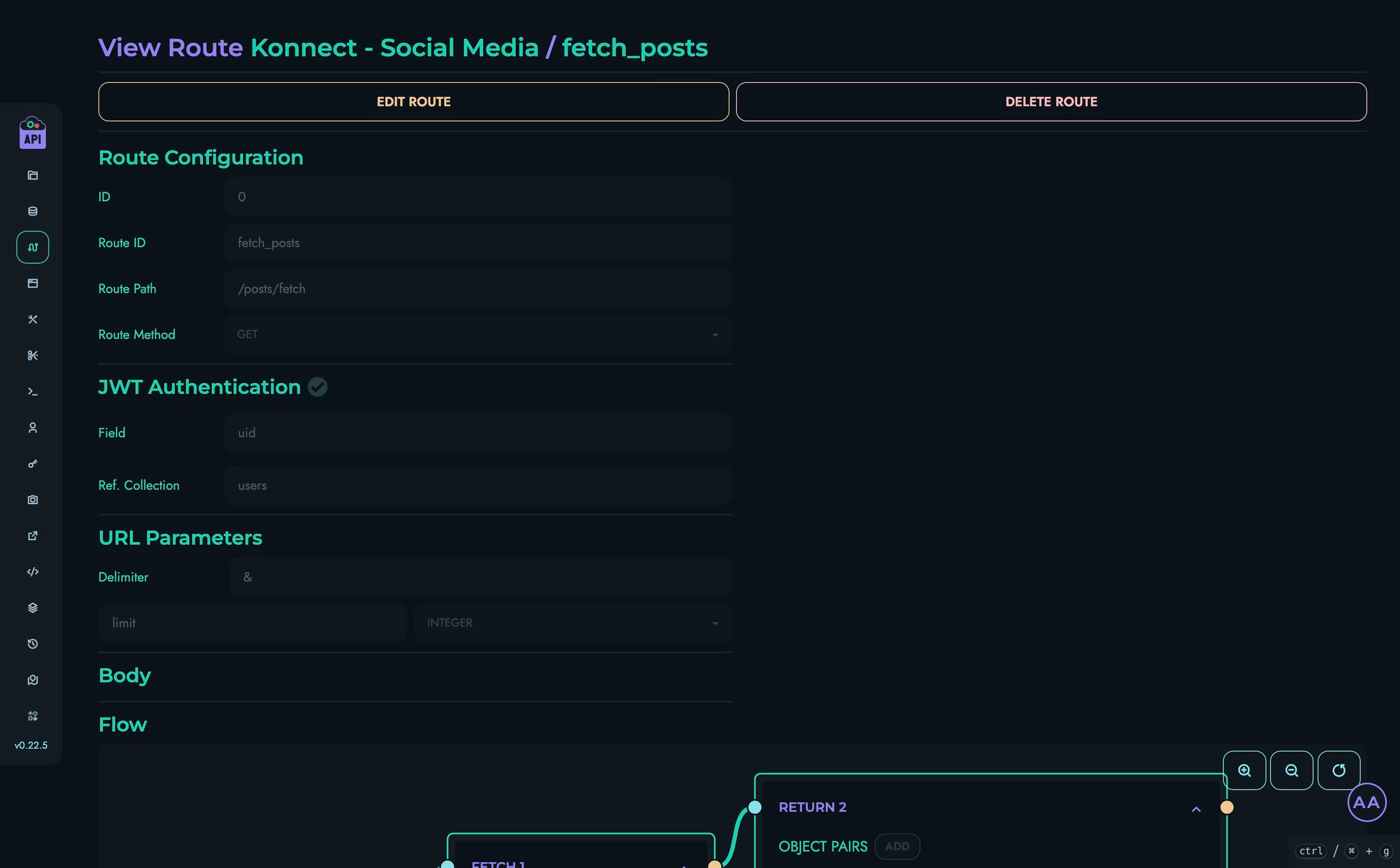

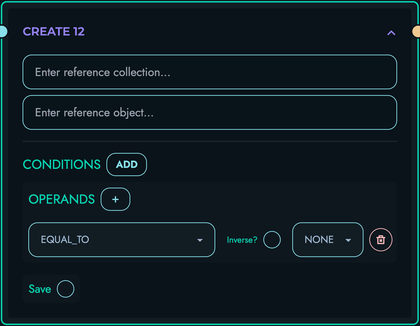

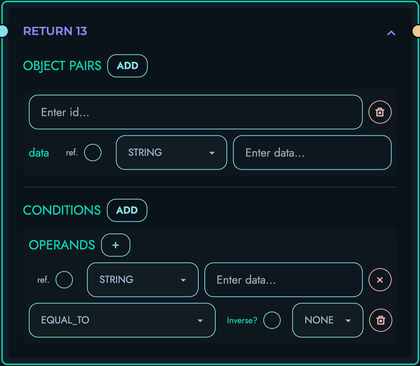

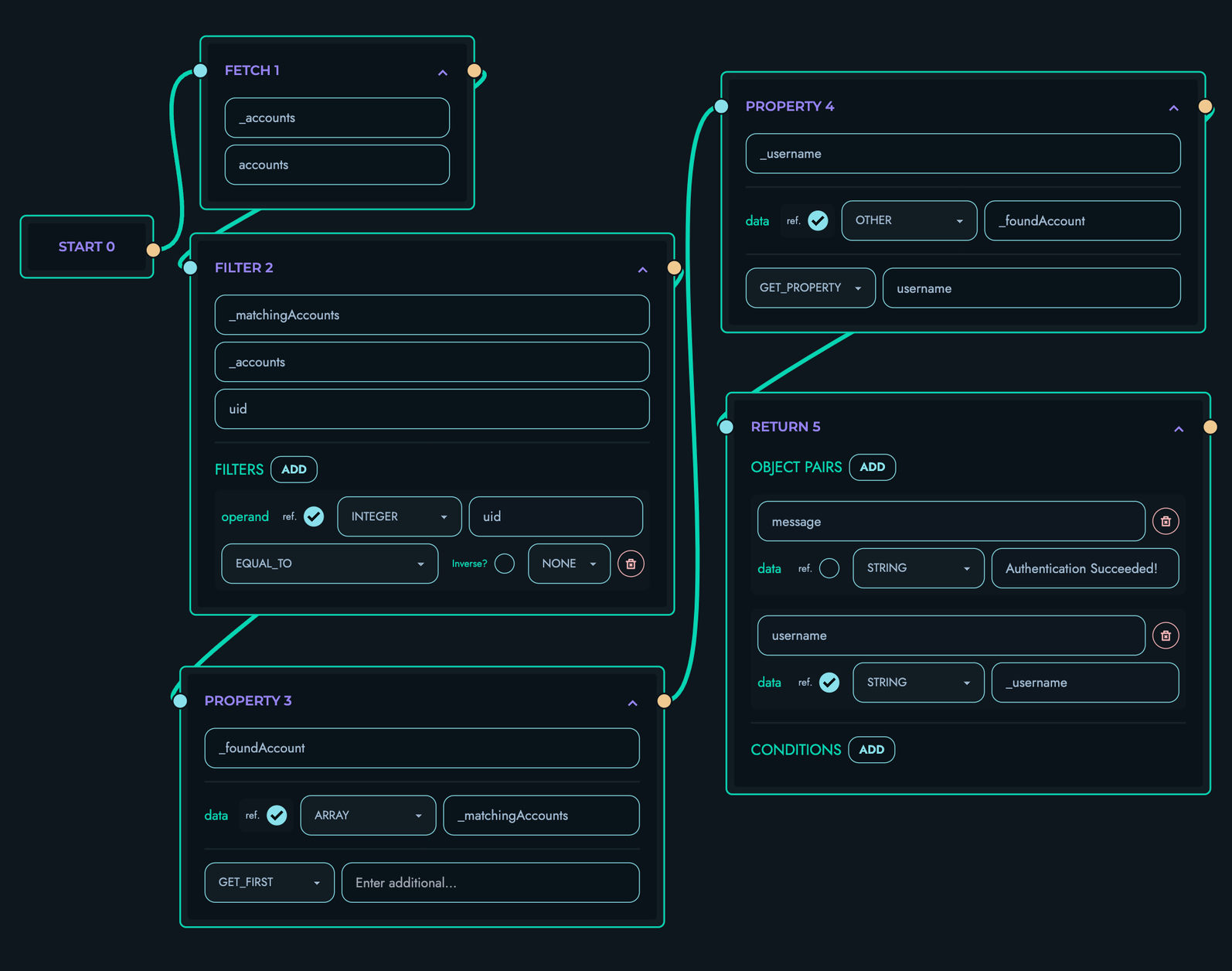

The X Engine is the core system that powers Kinesis API’s visual API development capabilities. It allows developers to design, implement, and deploy complex API routes using a block-based visual system, dramatically reducing the learning curve and development time typically associated with API creation.

Overview

At its essence, the X Engine is an execution framework that transforms visual blocks into functional API endpoints. By abstracting away much of the underlying complexity, it enables developers to focus on business logic rather than implementation details. The X Engine’s modular architecture makes it both powerful for experienced developers and accessible to those with less backend expertise.

Core Components

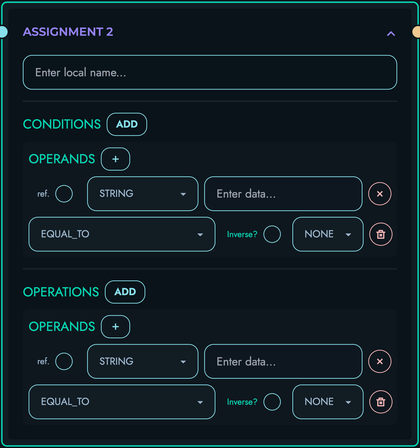

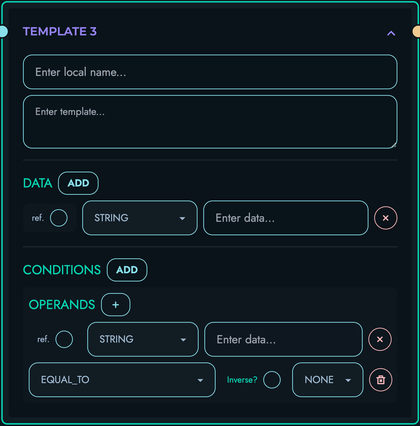

The X Engine consists of four primary component types that work together to handle API requests:

Processors

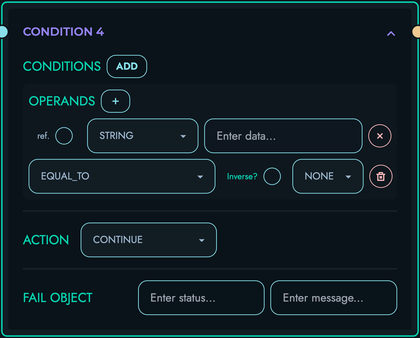

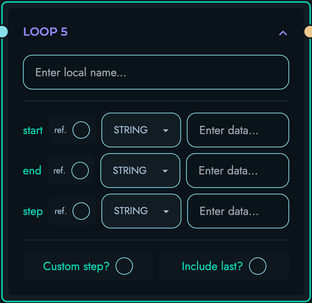

Processors control the execution flow of your API logic:

- Function: Handle the flow of execution within an API route

- Examples:

Loop: Iterate through collections of dataIf/Else: Implement conditional logicTry/Catch: Handle errors gracefullyReturn: Send a response back to the clientBreak: Exit from a loopFail: Trigger an error state with a specific message

Processors are the backbone of API route logic, allowing you to implement complex algorithms and workflows through visual components.

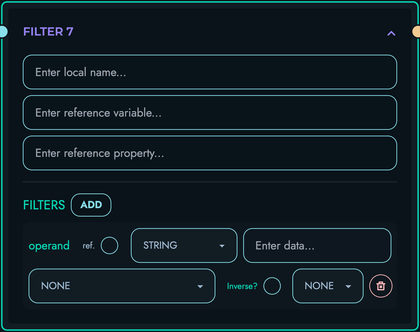

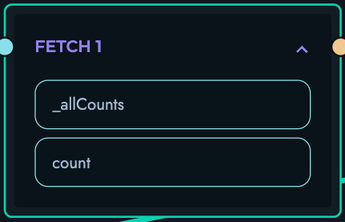

Resolvers

Resolvers map requests to the appropriate data sources:

- Function: Retrieve and manipulate data from various sources

- Examples:

Table: Query the Kinesis DB for recordsRequest: Extract data from the incoming API requestAuth: Access authentication informationState: Manage state between route executionsConfig: Retrieve configuration valuesExternal: Connect to external APIs or services

Resolvers serve as the bridge between your API endpoints and the data they need to operate, whether from internal or external sources.

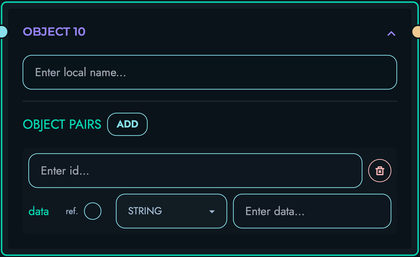

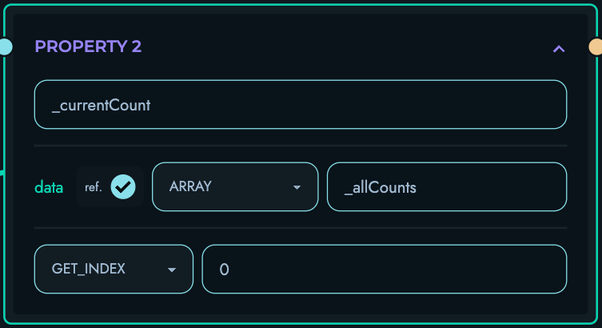

Convertors

Convertors transform data between different formats:

- Function: Transform and validate data during processing

- Examples:

String: Manipulate text dataNumber: Perform mathematical operationsBoolean: Evaluate logical conditionsArray: Work with collections of itemsObject: Manipulate structured dataJSON: Parse and stringify JSON dataDate: Handle date and time operations

Convertors ensure that data is in the correct format at each stage of processing, reducing errors and simplifying data manipulation.

Definitions

Definitions specify the expected structure of data:

- Function: Define the expected result format for each block

- Examples:

Schema: Define the structure of data objectsResponse: Specify the format of API responsesRequest: Describe expected request formatsError: Define standardized error structures

Definitions act as contracts between different parts of your API, ensuring consistency and making your API more predictable and easier to use.

How It Works

The X Engine processes API requests through the following stages:

-

Request Reception: When an API endpoint receives a request, the X Engine initializes the execution context.

-

Block Execution: The engine processes each block in sequence according to the route’s configuration, with processors controlling the flow.

-

Data Retrieval: Resolvers fetch necessary data from appropriate sources.

-

Data Transformation: Convertors format and transform data as needed.

-

Validation: The engine validates data against definitions at various stages.

-

Response Generation: Finally, a response is constructed and returned to the client.

This entire process is visually designed through the Kinesis API interface, allowing you to create sophisticated API logic without writing traditional code.

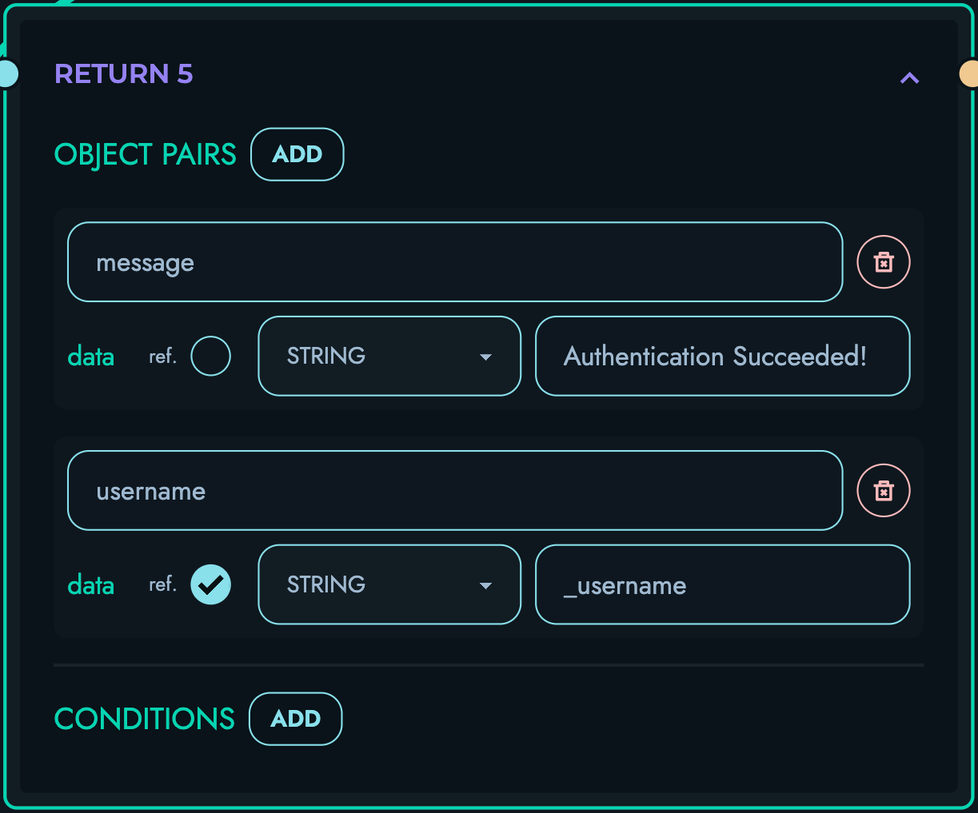

Block Connections

Blocks in the X Engine are connected through a visual interface that represents the flow of data and execution:

- Inputs: Each block can accept inputs from other blocks or direct values

- Outputs: Blocks produce outputs that can be used by subsequent blocks

- Conditions: Control blocks (like If/Else) can have multiple output paths

- Variables: Named references allow data to flow between different parts of your route

The visual representation makes it easy to understand complex flows and troubleshoot issues.

Visual Editor

The X Engine is integrated with a visual editor in the Kinesis API web interface, providing:

- Drag-and-drop Interface: Easily add and arrange blocks

- Real-time Validation: Immediate feedback on configuration issues

- Testing Tools: Test your routes directly from the editor

- Version History: Track changes to your routes over time

- Visual Debugging: Follow execution flow with visual indicators

The editor makes creating complex API routes accessible to developers of all skill levels.

Advanced Features

The X Engine includes several advanced features, some of which are still in active development, for complex API development:

Authentication Integration

The X Engine seamlessly integrates with Kinesis API’s authentication system:

- Role-based Access: Control which users can access specific routes

- JWT Validation: Automatically validate authentication tokens

- Permission Checking: Enforce granular permissions within routes

Custom Functions

Extend the X Engine with custom functions:

- Reusable Logic: Create custom blocks for frequently used operations

- Library Integration: Wrap third-party libraries in custom blocks

- Complex Algorithms: Implement specialized business logic as reusable components

Middleware Support

Apply consistent processing across multiple routes:

- Pre-processing: Validate requests before main processing

- Post-processing: Format responses consistently

- Error Handling: Implement global error management

Versioning

Manage API changes over time:

- Route Versioning: Maintain multiple versions of the same endpoint

- Migration Paths: Provide smooth transitions between versions

- Deprecation Management: Gracefully phase out older endpoints

Best Practices

Performance Optimization

- Minimize database queries by combining resolvers where possible

- Use caching for frequently accessed data

- Process only the data you need using selective field retrieval

Security Considerations

- Always validate user input through definitions

- Implement proper authentication and authorization

- Use parametrized queries to prevent injection attacks

- Avoid exposing sensitive data in responses

Maintainability

- Name blocks clearly to document their purpose

- Group related functionality into logical sections

- Comment complex logic for future reference

- Use consistent patterns across similar routes

Testing

- Test edge cases and error conditions

- Validate response formats against your definitions

- Check performance under various load conditions

- Test with realistic data volumes

Related Documentation

- API Reference - Complete reference for all available API endpoints

- Routes - How to create and manage API routes in Kinesis API

- Playground - Interactive environment for testing your APIs

Conclusion

The X Engine represents a paradigm shift in API development, combining the power and flexibility of traditional programming with the accessibility and speed of visual development. By abstracting complex implementation details while maintaining full capability, it enables developers of all skill levels to create professional-grade APIs in a fraction of the time typically required.

Whether you’re prototyping a simple API or building complex, interconnected systems, the X Engine provides the tools to accelerate development without sacrificing quality or control.

API Reference

The Kinesis API provides a comprehensive set of RESTful endpoints that allow you to interact with all aspects of the platform programmatically. This reference documentation will help you understand how to authenticate, make requests, and interpret responses when working with the API.

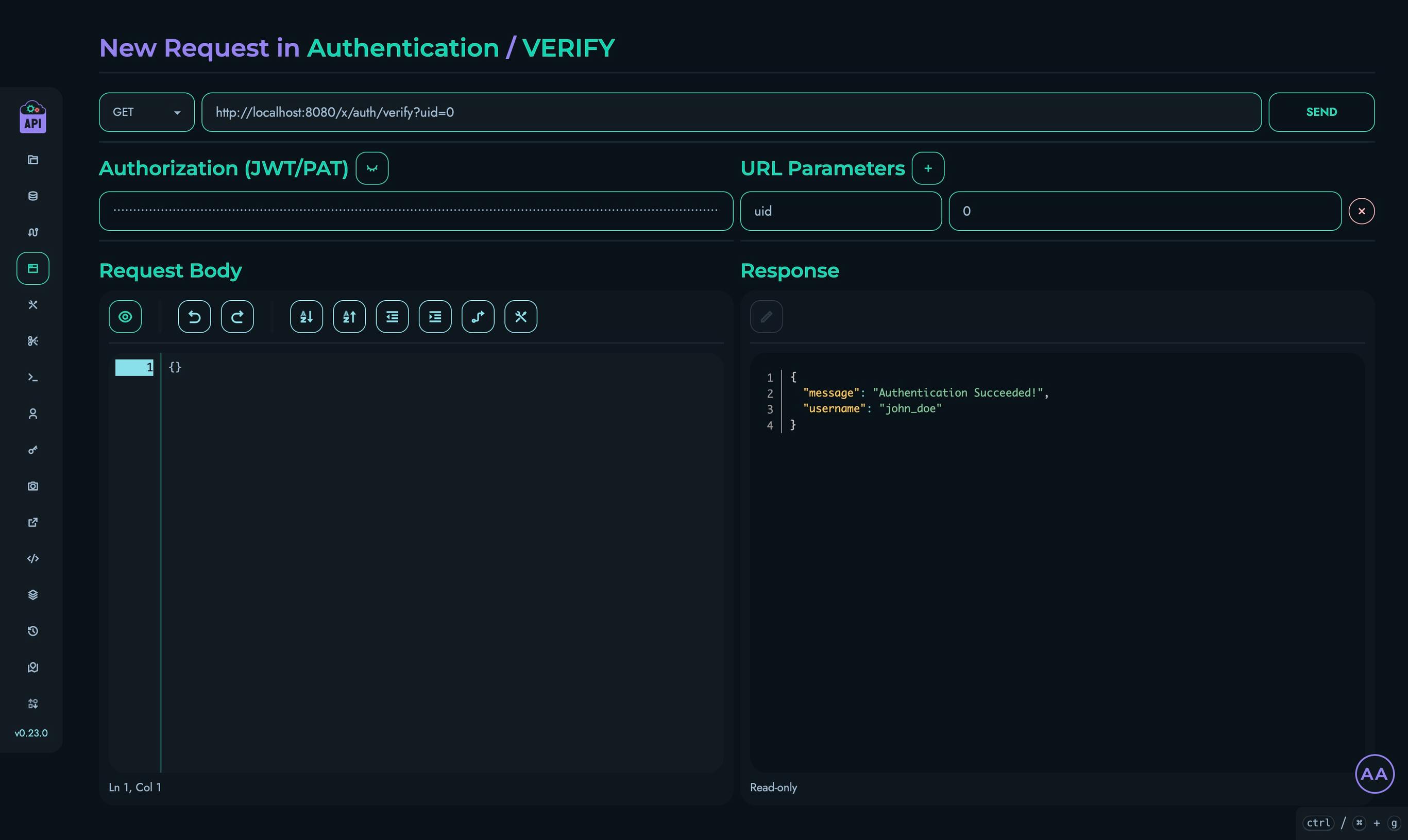

Accessing the API Documentation

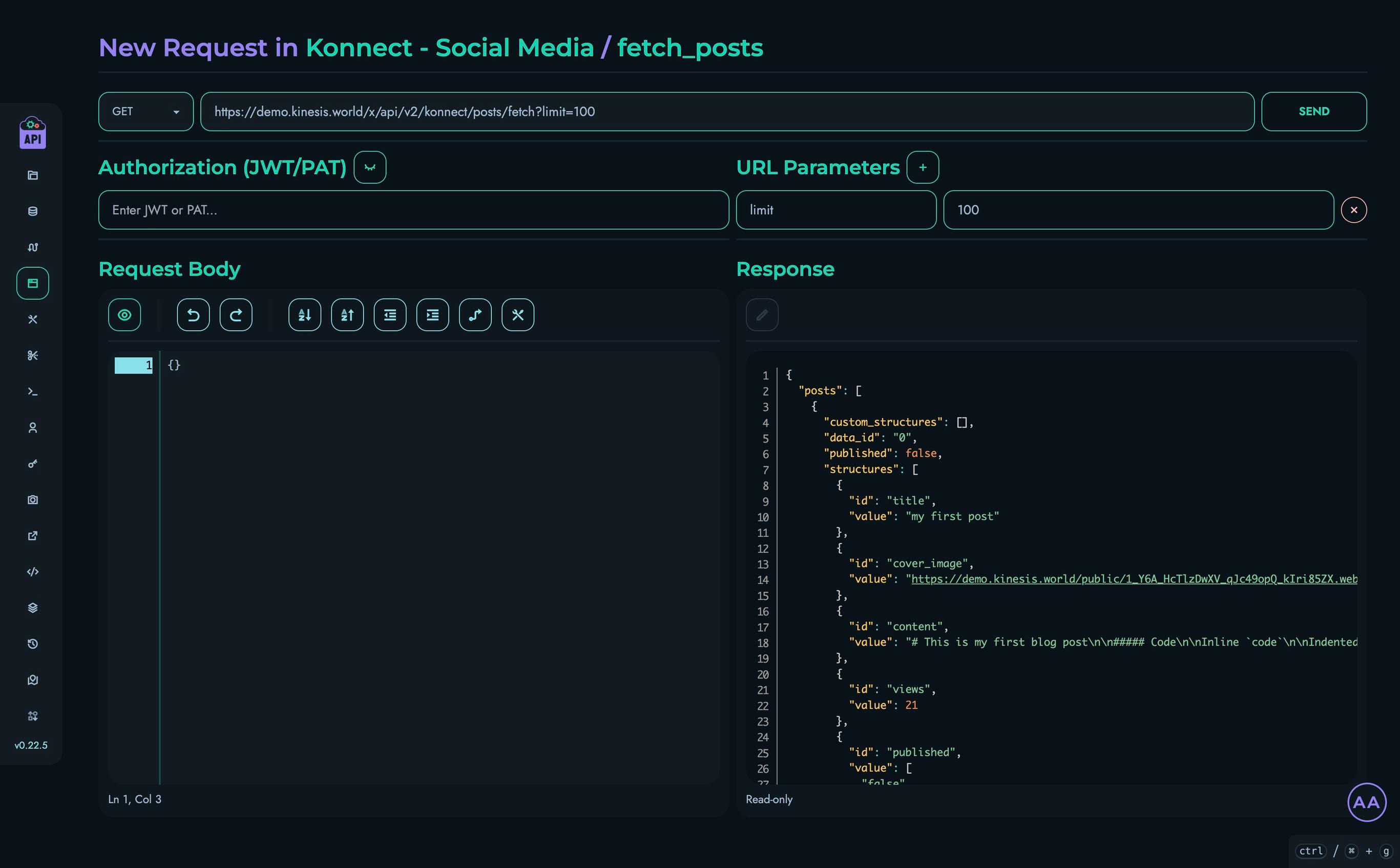

Kinesis API includes interactive OpenAPI documentation that allows you to:

- Browse all available endpoints

- View request and response schemas

- Test API calls directly from your browser

- Understand authentication requirements

You can access this documentation at:

- Local installation:

http://your-server-address/scalar - Official instance: https://api.kinesis.world/scalar

Authentication

Most API endpoints require authentication using one of the following methods:

Personal Access Tokens (PAT)

The recommended method for programmatic access is using Personal Access Tokens:

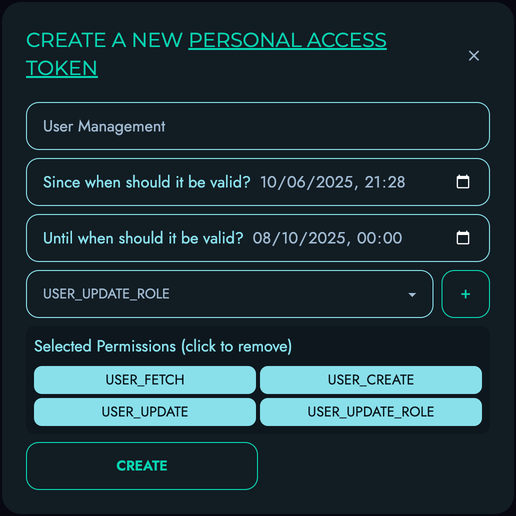

- Generate a token in the web interface under Personal Access Tokens or PATs

- Include the token in your requests using the

Authorizationheader:

Authorization: Bearer your-token-here

Session-based Authentication

For web applications, you can use session-based authentication:

- Call the

/user/loginendpoint with valid credentials - Store the returned token

- Include the token in subsequent requests

Common Request Patterns

Standard Request Format

Most endpoints follow this pattern:

- GET endpoints accept query parameters

- DELETE endpoints accept query parameters

- POST endpoints accept JSON data in the request body

- PATCH endpoints accept JSON data in the request body

- All endpoints return JSON responses

Request Example

POST /user/login HTTP/1.1

Host: api.kinesis.world

Content-Type: application/json

{

"auth_data": "john_doe",

"password": "Test123*"

}

Response Example

{

"status": 200,

"message": "Login Successful!",

"user": {

"id": 1,

"first_name": "John",

"last_name": "Doe",

"username": "john_doe",

"email": "john_doe@example.com",

"password": "",

"role": "VIEWER",

"reset_token": "",

"bio": "",

"profile_picture": "",

"is_public": false,

"links": []

},

"uid": 1,

"jwt": "eyJhbGciOiJIUzI1NiJ9.eyJzdWIiOiIxIiwiZXhwIjoxNjcyNTI2NDAwfQ.example_token"

}

Response Structure

All API responses follow a consistent structure:

{

"status": 200,

"message": "Operation successful",

"data": {

// Optional: Operation-specific data

}

}

Status Codes

The API uses standard HTTP status codes:

- 2xx: Success

- 200: OK

- 201: Created

- 204: No Content

- 4xx: Client errors

- 400: Bad Request

- 401: Unauthorized

- 403: Forbidden

- 404: Not Found

- 422: Unprocessable Entity

- 5xx: Server errors

- 500: Internal Server Error

Error Handling

Error responses follow the same structure and include error details in the message field itself:

{

"status": 400,

"message": "Error: Invalid input data"

}

Pagination

For endpoints that return collections of items, pagination is supported:

- Use

offsetandlimitquery parameters to control pagination - Responses include

amountfor the total amount of items

Example:

GET /config/fetch/all?uid=0&offset=2&limit=10

Using the API with the X Engine

The X Engine’s visual API builder generates endpoints that follow the same patterns and conventions as the core Kinesis API. When you publish a route in the X Engine, it becomes available as a standard RESTful endpoint that can be accessed using the same authentication mechanisms.

Related Documentation

- Routes - More information on route management

- Personal Access Tokens - Detailed guide on token management

Demo

Kinesis API offers a live demo instance where you can explore the platform’s features without setting up your own installation. This allows you to get hands-on experience with the system and follow along with our tutorials using a ready-to-use environment.

Demo Instance

The demo instance is available at:

https://demo.kinesis.world/web/

Important Notice

⚠️ Data Erasure Warning: All data on the demo instance is automatically erased at regular intervals (typically every 24 hours at midnight UTC+4). Do not use the demo instance for storing any important information or for production purposes.

Default Credentials

You can access the demo instance using the following default credentials:

| Role | Username | Password |

|---|---|---|

| Root | root | Test123* |

What You Can Try

The demo instance is fully functional and provides access to all features of Kinesis API. Here are some activities you can explore:

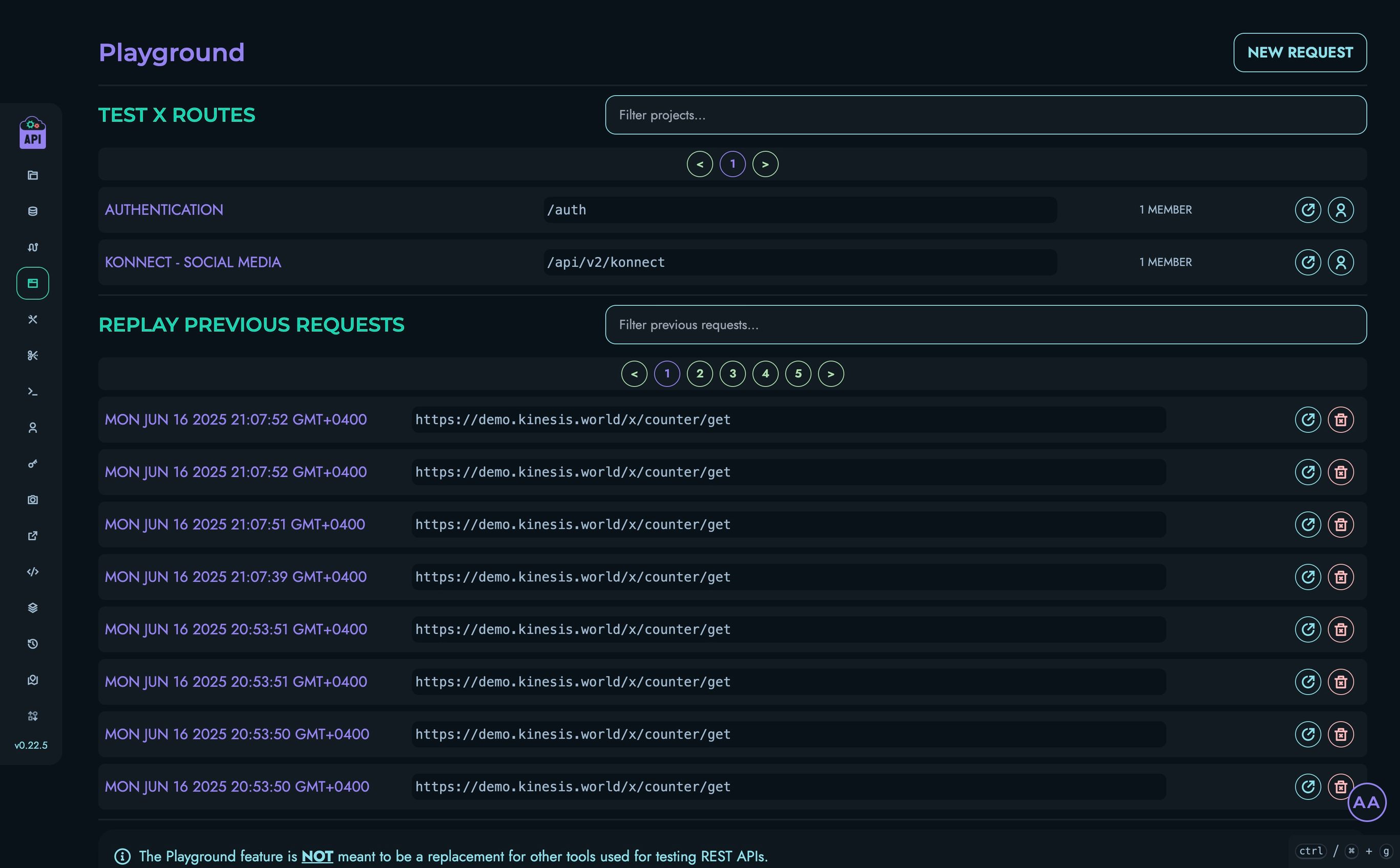

- Create and manage API routes using the X Engine

- Define data structures and collections

- Test API endpoints using the built-in playground

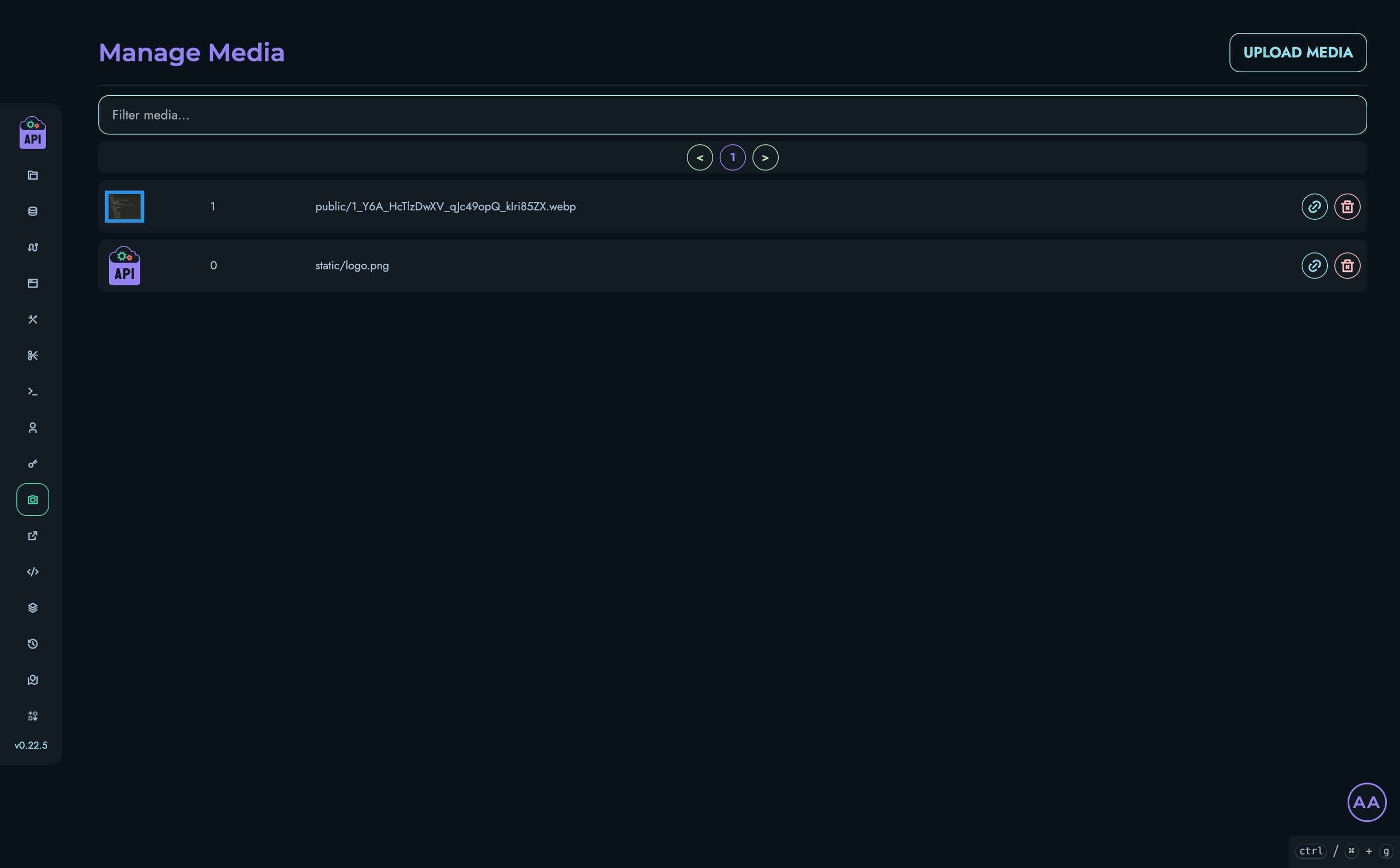

- Upload and manage media files

- Configure user settings and preferences

Following Tutorials

All tutorials in our documentation can be followed using the demo instance. When a tutorial refers to “your Kinesis API installation,” you can use the demo instance instead.

Limitations

The demo instance has some limitations you should be aware of:

- Some security settings (such as CORS policies) aren’t properly configured, as the instance is intended for demonstration purposes only

- Email functionality is disabled, preventing features like user registration and password reset from working completely

- Media uploads are restricted to a maximum of 20MB per file to preserve server resources

- The database may experience occasional performance throttling during periods of high user activity

Next Steps

Once you’ve explored the demo and are ready to set up your own instance:

- Follow the Installation Guide to install Kinesis API on your system

- Complete the Initialization process to set up your instance with default values

- Configure your new installation using the Setup Guide to customize it for your specific needs

Feedback

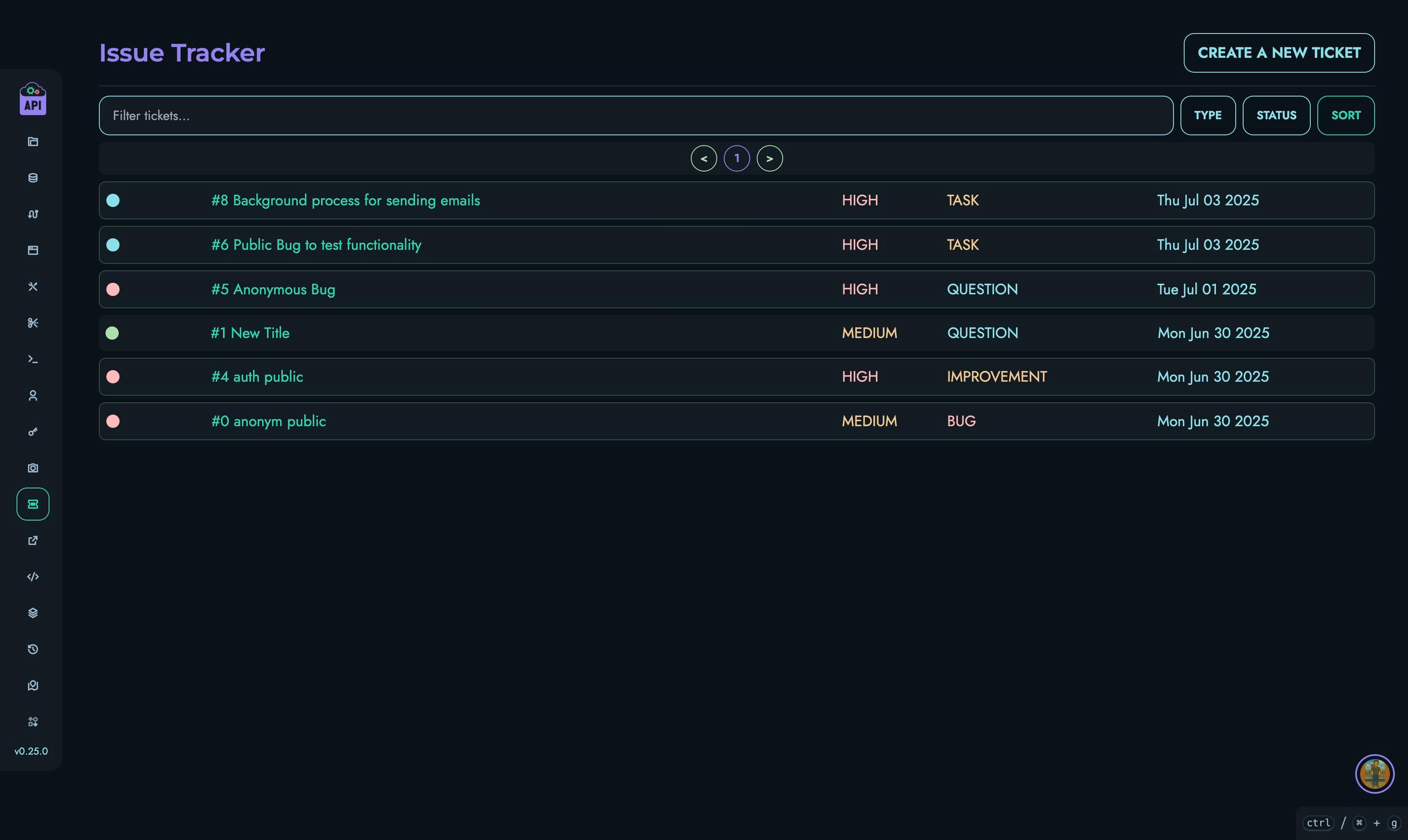

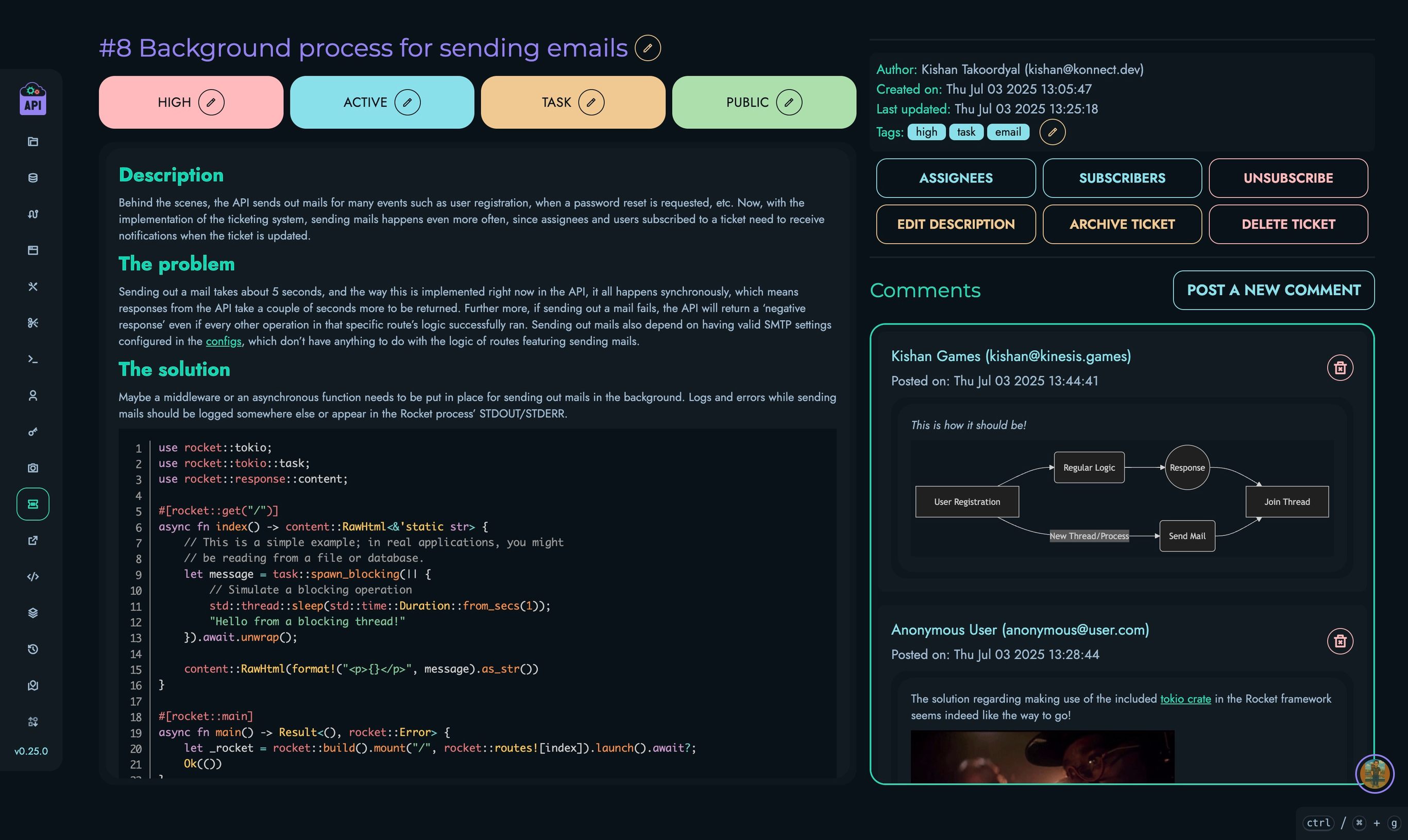

We welcome feedback on your experience with the demo instance. If you encounter any issues or have suggestions for improvements, please contact us through the contact page or by emailing support@kinesis.world. We also encourage you to create a new ticket in the issue tracker.

Getting Started with Kinesis API

Welcome to the Getting Started guide for Kinesis API. This section will walk you through the process of installing, initializing, and setting up your first Kinesis API instance.

Prefer video tutorials? You can follow along with our YouTube walkthrough of this same project.

What You’ll Learn

In this section, you’ll learn how to:

- Install Kinesis API using Docker or Rust

- Initialize your installation with default settings

- Secure your instance with proper credentials

- Understand the key components created during initialization

- Configure your system for production use

Quick Start Overview

Getting Kinesis API up and running involves two main steps:

- Installation: Set up the Kinesis API software on your system

- Initialization: Configure the system with initial data and settings

If you’re eager to start right away, follow these quick steps:

# Create necessary directories

mkdir -p data/ public/ translations/

# Create configuration file

echo "TMP_PASSWORD=yourSecurePassword" > .env

echo "API_URL=http://your-domain-or-ip:8080" >> .env

# Run the Docker container

docker run --name kinesis-api \

-v $(pwd)/.env:/app/.env \

-v $(pwd)/data:/app/data \

-v $(pwd)/public:/app/public \

-v $(pwd)/translations:/app/translations \

-p 8080:8080 -d \

--restart unless-stopped \

edgeking8100/kinesis-api:latest

# Initialize the system

curl "http://your-domain-or-ip:8080/init?code=code"

Then access the web interface at http://your-domain-or-ip:8080/web and log in with:

- Username:

root - Password:

Test123*

Remember to change the default password immediately!

Detailed Guides

For more detailed instructions, refer to these guides:

- Installation Guide - Comprehensive instructions for setting up Kinesis API

- Initialization Guide - Detailed steps for initializing your installation

System Requirements

Before you begin, ensure your system meets these minimum requirements:

- Memory: 128MB RAM minimum (512MB+ recommended for production)

- CPU: 1 core minimum (2+ cores recommended)

- Storage: 100MB for installation + additional space for your data

- Operating System: Any OS that can run Docker or Rust

- Network: Outbound internet access for installation

Next Steps After Installation

Once you’ve completed the installation and initialization process, you’ll want to:

Let’s get started with the Installation Guide.

Installation

This guide covers how to install and set up Kinesis API on your system. We offer two installation methods: Docker (recommended for most users) and direct Rust installation (useful for developers contributing to the project).

System Requirements

Before installing Kinesis API, ensure your system meets these minimum requirements:

- Memory: 128MB RAM minimum (512MB+ recommended for production use)

- CPU: 1 core minimum (2+ cores recommended for production)

- Storage: 100MB for installation + additional space for your data

- Operating System: Any OS that can run Docker or Rust (Linux, macOS, Windows)

- Network: Outbound internet access for installation

Configuration Options

Before installing Kinesis API, you should decide on your configuration settings. These can be set through environment variables.

Essential Environment Variables

| Variable | Description | Example |

|---|---|---|

TMP_PASSWORD | Temporary password for setup | StrongPassword123! |

API_URL | URL where Kinesis API will be accessed | http://localhost:8080 |

Database Configuration

You can configure the database system through additional environment variables:

| Variable | Description | Possible Values | Default |

|---|---|---|---|

DB_NAME | Database filename (for Kinesis DB) | Any valid filename | main_db |

DB_STORAGE_ENGINE | Storage engine type (for Kinesis DB) | memory, disk, hybrid | hybrid |

DB_ISOLATION_LEVEL | Transaction isolation level (for Kinesis DB) | read_uncommitted, read_committed, repeatable_read, serializable | serializable |

DB_BUFFER_POOL_SIZE | Buffer pool size (for Kinesis DB) | Any positive integer | 100 |

DB_AUTO_COMPACT | Automatic compaction (for Kinesis DB) | true, false | true |

DB_RESTORE_POLICY | Recovery policy (for Kinesis DB) | discard, recover_pending, recover_all | recover_pending |

DB_BACKEND | Database backend to use | kinesis_db, kinesisdb, sqlite, mysql, postgresql, postgres, pgsql | kinesis_db |

DATABASE_URL | Database connection string or file path | File path for SQLite, connection URI for MySQL/PostgreSQL | data/db.sqlite (for SQLite) |

Database Backend Options

-

Kinesis DB (default): Custom-built database optimized for Kinesis API

- Set

DB_BACKEND=kinesis_dborDB_BACKEND=kinesisdb(or leave unset) - Uses the

DB_NAME,DB_STORAGE_ENGINE, and other Kinesis DB-specific variables

- Set

-

SQLite: Embedded database, no separate server required

- Set

DB_BACKEND=sqlite - Optionally set

DATABASE_URLto specify the.sqlitefile path (default:data/db.sqlite)

- Set

-

MySQL: Popular open-source relational database

- Set

DB_BACKEND=mysql - Required: Set

DATABASE_URLto the MySQL connection URI - Example:

DATABASE_URL=mysql://username:password@localhost:3306/database_name

- Set

-

PostgreSQL: Advanced open-source relational database

- Set

DB_BACKEND=postgresql(orpostgresorpgsql) - Required: Set

DATABASE_URLto the PostgreSQL connection URI - Example:

DATABASE_URL=postgresql://username:password@localhost:5432/database_name

- Set

Important: Changing database-related environment variables after your initial setup may cause data access issues or corruption. It’s best to decide on these settings before your first initialization and maintain them throughout the lifecycle of your installation.

Docker Installation (Recommended)

Using Docker is the simplest and most reliable way to deploy Kinesis API.

Prerequisites

- Install Docker on your system

- Ensure you have permissions to create and manage Docker containers

Installation Steps

- Create necessary directories for persistent storage:

mkdir -p data/ public/ translations/

- Create a

.envfile with your configuration:

echo "TMP_PASSWORD=yourSecurePassword" > .env

echo "API_URL=http://your-domain-or-ip:8080" >> .env

Replace yourSecurePassword with a strong password and your-domain-or-ip with your server’s domain or IP address.

-

Add any additional configuration options from the Configuration Options section above to your

.envfile. -

Run the Docker container:

docker run --name kinesis-api \

-v $(pwd)/.env:/app/.env \

-v $(pwd)/data:/app/data \

-v $(pwd)/public:/app/public \

-v $(pwd)/translations:/app/translations \

-p 8080:8080 -d \

--restart unless-stopped \

edgeking8100/kinesis-api:latest

This command:

- Names the container

kinesis-api - Mounts your local

.envfile and data/public/translations directories - Exposes port 8080

- Runs in detached mode (

-d) - Configures automatic restart

- Uses the latest Kinesis API image

Using a Specific Version

If you need a specific version of Kinesis API, replace latest with a version number:

docker run --name kinesis-api \

-v $(pwd)/.env:/app/.env \

-v $(pwd)/data:/app/data \

-v $(pwd)/public:/app/public \

-v $(pwd)/translations:/app/translations \

-p 8080:8080 -d \

--restart unless-stopped \

edgeking8100/kinesis-api:0.26.0

Available Registries

Kinesis API images are available from multiple registries:

| Registry | Image |

|---|---|

| Docker Hub | docker.io/edgeking8100/kinesis-api:latest |

| Docker Hub | docker.io/edgeking8100/kinesis-api:<version> |

| Gitea Registry | gitea.konnect.dev/rust/kinesis-api:latest |

| Gitea Registry | gitea.konnect.dev/rust/kinesis-api:<version> |

Rust Installation (For Development)

If you’re a developer who wants to build from source or contribute to Kinesis API, you can install using Rust.

Prerequisites

- Install Rust (version 1.86 or newer)

- Install development tools for your platform:

- Linux:

build-essentialpackage or equivalent - macOS: Xcode Command Line Tools

- Windows: Microsoft Visual C++ Build Tools

- Linux:

Installation Steps

- Clone the repository:

git clone https://gitea.konnect.dev/rust/kinesis-api.git

cd kinesis-api/

- Create and configure the environment file:

cp .env.template .env

-

Edit the

.envfile to set at minimum:TMP_PASSWORDwith a secure valueAPI_URLwith your server address (e.g., “http://localhost:8080”)

-

Build and run the application:

cargo run --bin kinesis-api

For a production build:

cargo build --release --bin kinesis-api

./target/release/kinesis-api

Post-Installation Steps

After installation, you need to initialize Kinesis API:

-

Access the initialization endpoint:

- Make a GET request to

<your-api-url>/init?code=code, or - Navigate to the web interface at

<your-api-url>/web

- Make a GET request to

-

This creates a root user with:

- Username:

root - Password:

Test123*

- Username:

-

Important: Change the default password immediately after first login

For more details on initialization, see the Initialization Guide.

Verifying the Installation

To confirm Kinesis API is running correctly:

- Open your browser and navigate to

<your-api-url>/web - You should see the Kinesis API login page

- Try logging in with the default credentials

- Check that you can access the API documentation at

<your-api-url>/scalar

Troubleshooting

Common Issues

-

Container won’t start:

- Check Docker logs:

docker logs kinesis-api - Ensure ports aren’t already in use

- Verify directory permissions

- Check Docker logs:

-

Can’t access the web interface:

- Confirm the container is running:

docker ps - Check your firewall settings

- Verify the URL and port configuration

- Confirm the container is running:

-

Database connection errors:

- Check the data directory permissions

- Verify your DB configuration variables

Getting Help

If you encounter issues not covered here:

- Check our FAQ page

- Contact support at support@kinesis.world

- Submit a detailed bug report through our issue tracker

Next Steps

After installation, proceed to:

Initialization

After installing Kinesis API, you need to initialize the system before you can start using it. This one-time process creates the necessary database structures, default user, and configuration settings.

Initialization Methods

You can initialize Kinesis API using one of two methods:

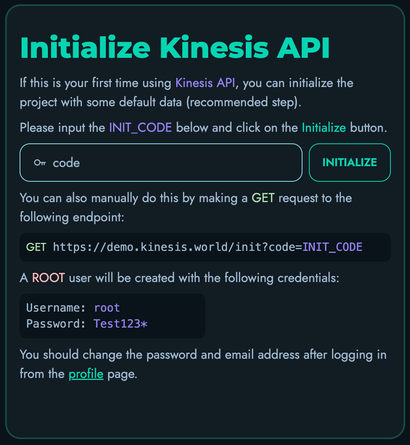

Method 1: Using the Web Interface (Recommended)

-

Open your web browser and navigate to your Kinesis API installation:

http://your-domain-or-ip:8080/web -

You’ll be presented with an initialization screen if the system hasn’t been set up yet.

-

Click the “Initialize” button to begin the process.

-

The system will create the necessary database structures and a default root user.

-

Once initialization is complete, you’ll be redirected to the login page.

Method 2: Using a REST API Request

If you prefer to initialize the system programmatically or via command line, you can use a REST API request:

curl "http://your-domain-or-ip:8080/init?code=code"

Or using any HTTP client like wget:

wget -qO - "http://your-domain-or-ip:8080/init?code=code"

A successful initialization will return a JSON response indicating that the system has been set up.

Default Root User

After initialization, a default root user is created with the following credentials:

- Username:

root - Password:

Test123*

⚠️ IMPORTANT SECURITY NOTICE: You should change the default password immediately after your first login to prevent unauthorized access to your system.

What Gets Initialized

During initialization, Kinesis API sets up:

- Database tables and their structures

- Encryption key for securing sensitive data

- System constraints for data validation

- Default root user account

- Core configuration settings

- Initial projects structure

- Collection templates

- Media storage system

These components form the foundation of your Kinesis API installation, creating the necessary structure for you to start building your APIs.

Verifying Initialization

To verify that your system has been properly initialized:

- Try logging in with the default credentials.

- Check that you can access the dashboard.

- Navigate to the Users section to confirm the root user exists.

- Ensure the API documentation is accessible at

/scalar.

Reinitializing the System

In most cases, you should never need to reinitialize your system after the initial setup. Reinitializing will erase all data and reset the system to its default state.

If you absolutely must reinitialize (for example, during development or testing):

-

Stop the Kinesis API service:

docker stop kinesis-api -

Remove the data directory:

rm -rf data/ -

Create a fresh data directory:

mkdir -p data/ -

Restart the service:

docker start kinesis-api -

Follow the initialization steps again.

Next Steps

After successfully initializing your Kinesis API instance:

Setup

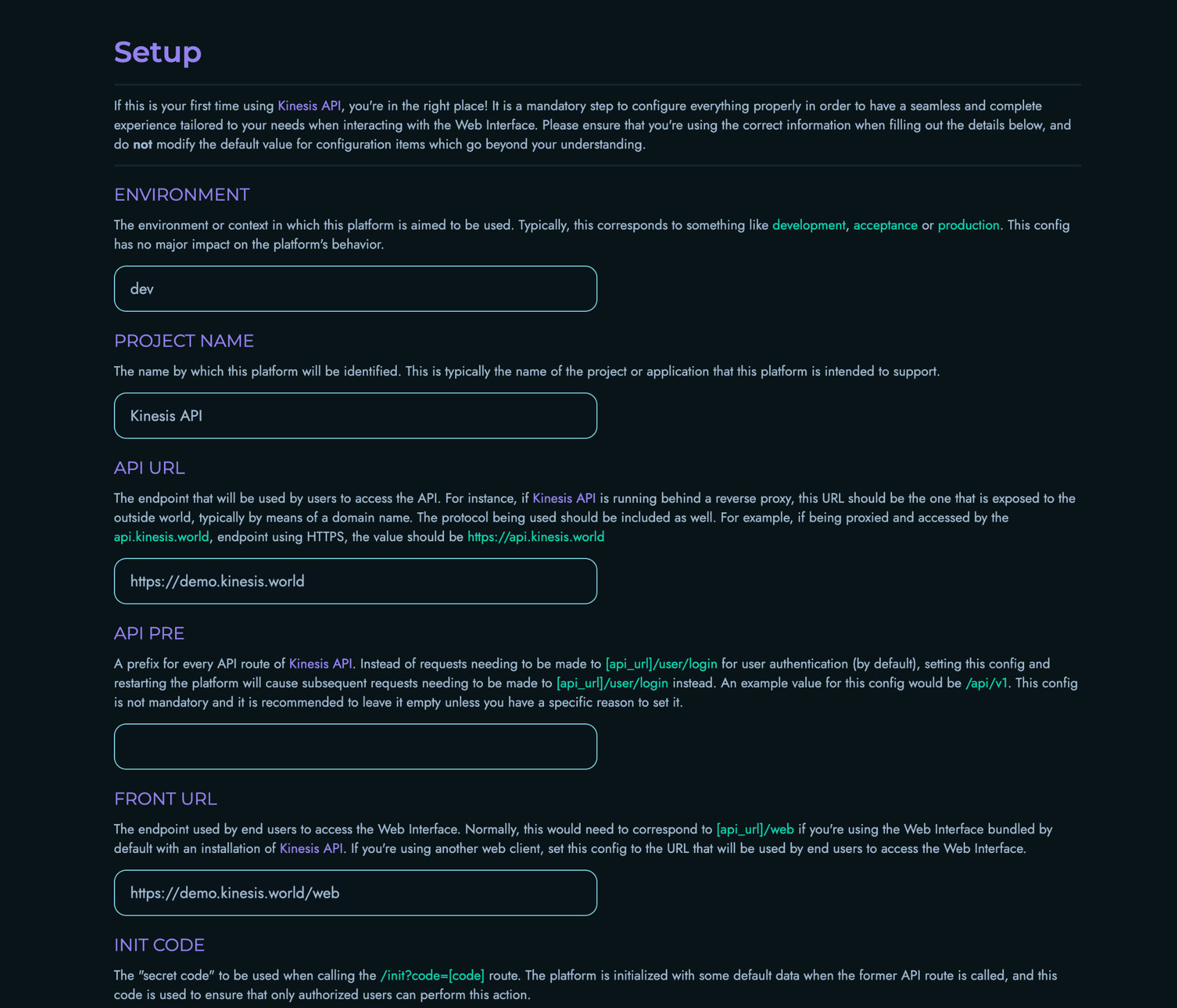

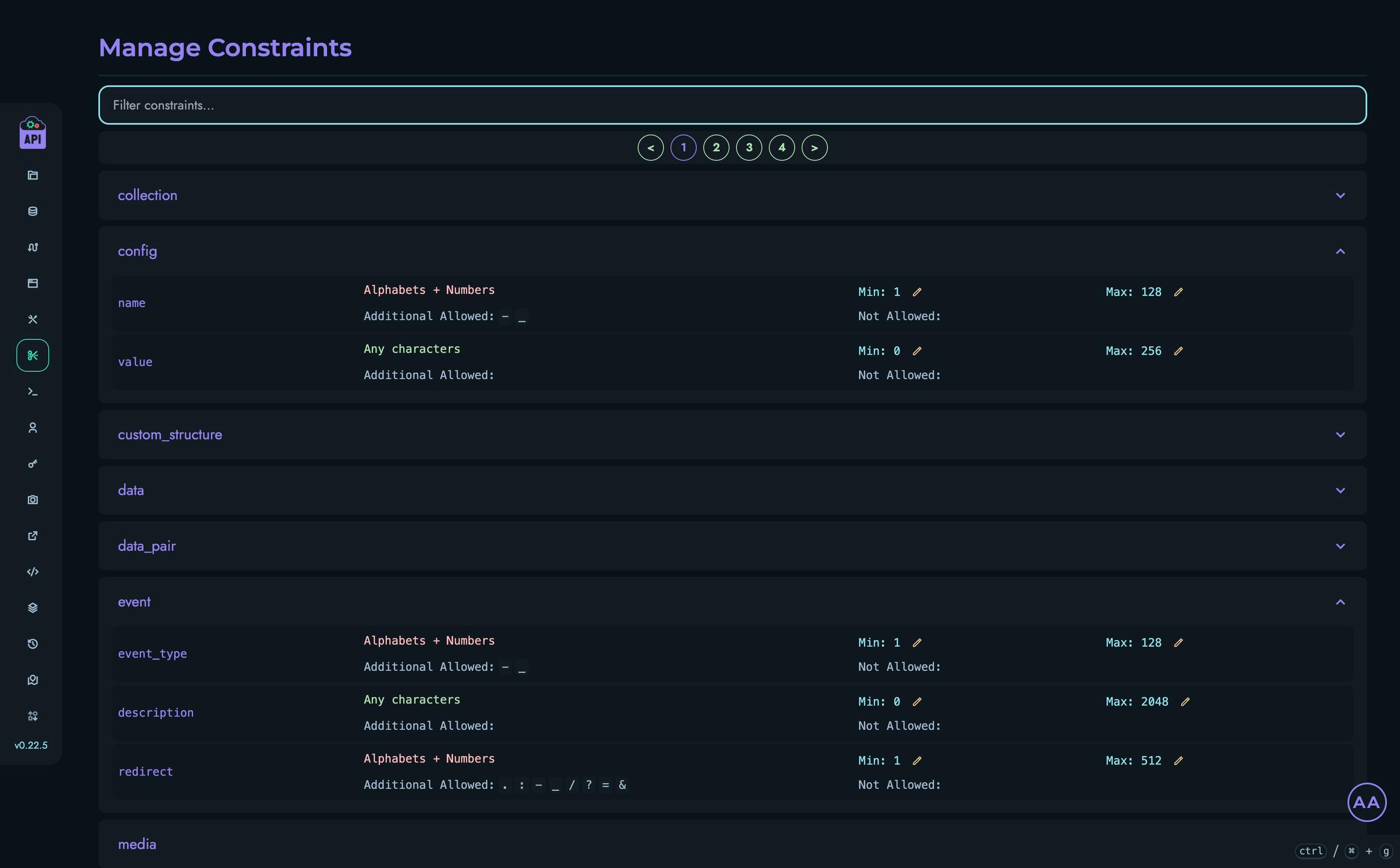

After initializing your Kinesis API instance and logging in for the first time, you will be presented with a setup screen where you can configure various system settings. This page explains each configuration option to help you make informed decisions.

The Setup Process

The setup process is a one-time configuration wizard that appears after the first login with your root account. It allows you to customize essential system settings before you start using Kinesis API.

Note: All settings configured during this initial setup can be modified later from the Configs page accessible at

/web/configs. If you’re unsure about any setting, it’s generally safer to keep the default value.

Configuration Options

Environment

Default value: dev

The environment context in which this platform is being used. Typically corresponds to development stages such as:

dev(Development)staging(Staging/Testing)prod(Production)

This setting has minimal impact on system behavior but helps to identify the instance’s purpose.

Project Name

Default value: Kinesis API

The name by which this platform will be identified. This appears in the user interface, email templates, and other user-facing areas. You can customize this to match your organization or project name.

API URL

Default value: Detected from your installation

The base URL where your API endpoints are accessible. This should include the protocol (http/https) and domain name or IP address.

Example: https://api.example.com

If your Kinesis API instance is running behind a reverse proxy, this should be the publicly accessible URL, not the internal address.

API Prefix (API PRE)

Default value: Empty

An optional prefix for all API routes. When set, all API endpoints will be prefixed with this value.

Example: Setting this to /api/v1 would change endpoint paths from:

/user/loginto/api/v1/user/login

Leave this empty unless you have a specific need for URL path prefixing, such as API versioning or integration with other systems.

Front URL

Default value: [API_URL]/web

The URL where users will access the web interface. If you’re using the default web interface, this should be your API URL followed by /web.

Example: https://api.example.com/web

If you’re using a custom frontend or have deployed the web interface separately, specify its URL here.

Init Code

Default value: code

The security code required when calling the /init endpoint to initialize the system. Changing this provides a small layer of security against unauthorized initialization.

Recommendation: Change this from the default value to something unique, especially in production environments.

JWT Expire

Default value: 3600 (1 hour)

The lifetime of JWT authentication tokens in seconds. After this period, users will need to log in again.

Common values:

3600(1 hour)86400(24 hours)604800(1 week)

Shorter times enhance security but require more frequent logins. Longer times improve user convenience but may increase security risks if tokens are compromised.

Upload Size

Default value: 2048 (2 MB)

The maximum allowed size for file uploads in kilobytes. Adjust based on your expected usage and server capacity.

Examples:

1024(1 MB)5120(5 MB)10240(10 MB)

Setting this too high could lead to server resource issues if users upload very large files.

CORS Whitelist

Default value: Empty

A comma-separated list of domains that are allowed to make cross-origin requests to your API.

Examples:

example.com,api.example.com(Allow specific domains)*(Allow all domains - not recommended for production)

For security reasons, only whitelist domains that legitimately need to access your API.

SMTP Settings

These settings configure the email sending capabilities of Kinesis API, which are required for features like user registration and password reset.

SMTP Username

Default value: Empty

The username for authenticating with your SMTP server. This is typically your email address.

Example: notifications@example.com

SMTP From Username

Default value: Same as SMTP Username

The email address that will appear in the “From” field of emails sent by the system. If left empty, the SMTP Username will be used.

Example: no-reply@example.com

SMTP Password

Default value: Empty

The password for authenticating with your SMTP server.

SMTP Host

Default value: Empty

The hostname or IP address of your SMTP server.

Examples:

smtp.gmail.com(for Gmail)smtp.office365.com(for Office 365)smtp.mailgun.org(for Mailgun)

SMTP Port

Default value: 587

The port number used to connect to your SMTP server.

Common values:

25(Standard SMTP - often blocked by ISPs)465(SMTP over SSL)587(SMTP with STARTTLS - recommended)

SMTP Login Mechanism

Default value: PLAIN

The authentication mechanism used when connecting to the SMTP server.

Options:

PLAIN(Standard plain text authentication)LOGIN(Alternative plain text authentication)XOAUTH2(OAuth 2.0-based authentication)

Most SMTP servers use PLAIN authentication. Only change this if your email provider specifically requires a different mechanism.

SMTP StartTLS

Default value: true (Checked)

Whether to use STARTTLS when connecting to the SMTP server. This upgrades an insecure connection to a secure one.

Most modern SMTP servers require this to be enabled for security reasons.

Testing SMTP Settings

Before completing the setup, you can test your SMTP configuration by clicking the “Check SMTP Credentials” button. This will attempt to connect to your SMTP server and verify that your credentials are correct.

Completing Setup

After configuring all settings, review your choices carefully before clicking “Complete Setup”. The system will save your configuration and redirect you to the login page.

Important: It’s recommended to restart your Kinesis API instance after completing the setup to ensure all settings take effect properly.

Skipping Setup

While it’s possible to skip the setup process, this is not recommended as it may leave your system with incomplete or incorrect configuration. Only skip setup if you’re an advanced user who plans to configure the system manually.

Modifying Settings Later

All settings configured during initial setup can be modified later from the Configs page at /web/configs. This allows you to make adjustments as your needs change without having to reinstall the system.

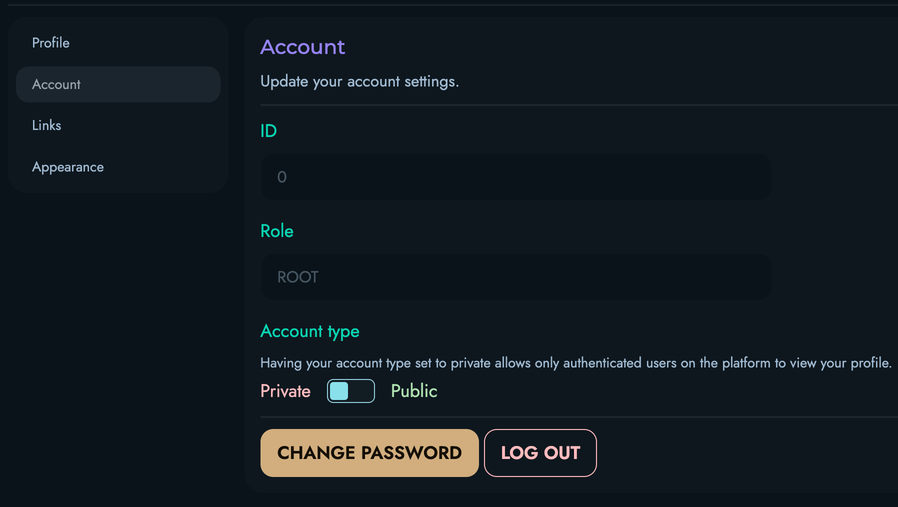

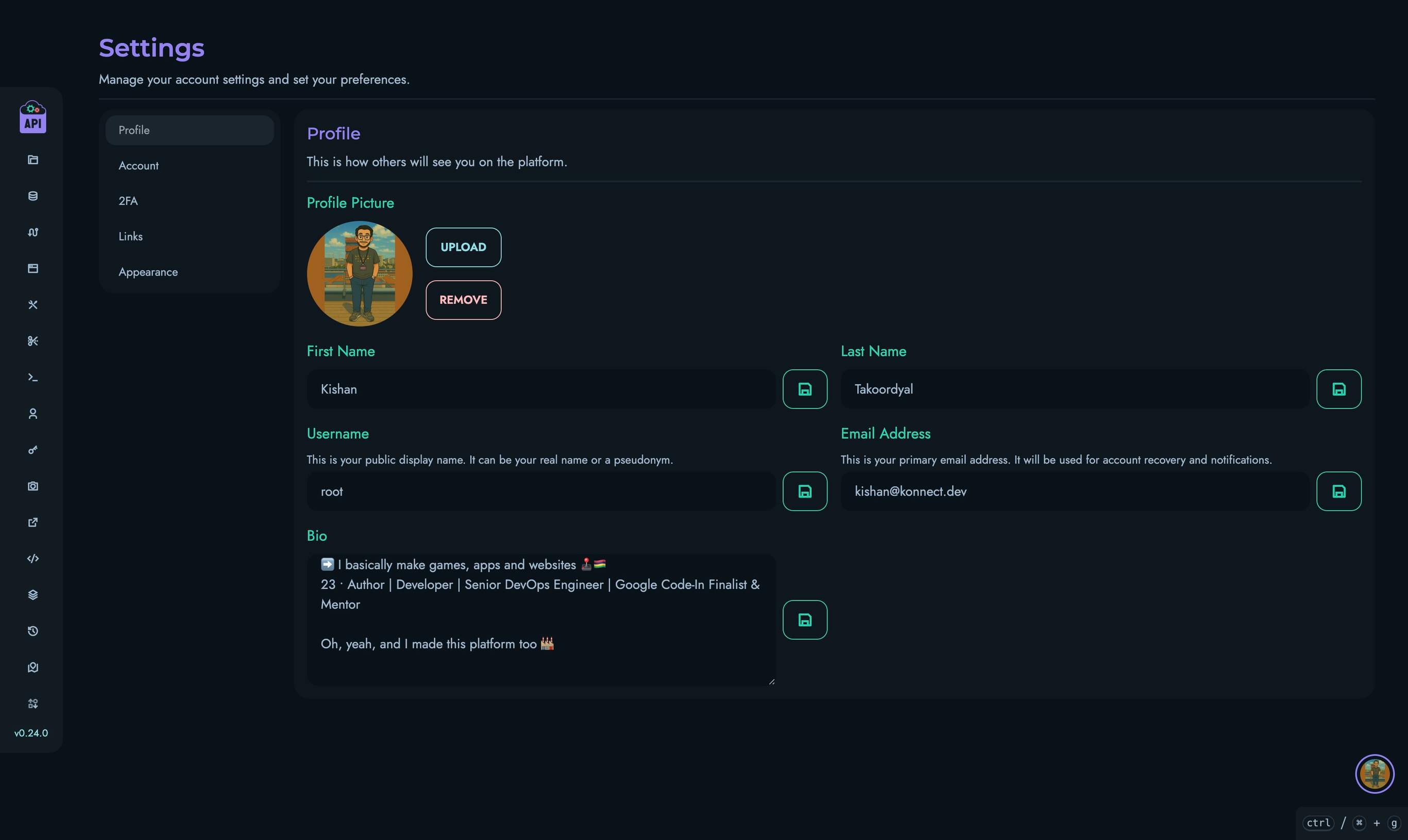

Changing the Default Password

To change the default password:

- Log in to the web interface using the default credentials.

- Navigate to User Settings (click on your username in the bottom-right corner then “Edit profile”).

- Select “Change Password”.

- Enter your new secure password twice.

- Click “Save” to confirm updating the password.

Next Steps

After completing the setup, you’ll be ready to start using Kinesis API. Consider exploring these areas next:

- Building a Simple Counter App

- Managing users and permissions

- Configuring projects

- Setting up collections and structures

Licenses

Kinesis API uses a license-based model to provide you with access to its features and capabilities. Whether you’re self-hosting or using our managed platform, all deployments require a valid license to operate beyond the free Community tier.

What is a License?

A license in Kinesis API is a digital authorization that:

- Grants Access: Enables you to use Kinesis API and its features according to your selected plan

- Defines Limits: Specifies the number of instances you can run and users you can have

- Controls Features: Determines which capabilities and components are available to you

- Ensures Compliance: Validates that your usage aligns with your subscription level

Why Licenses Matter

The licensing system serves several important purposes:

For Users

- Predictable Costs: Know exactly what you’re paying for with clear pricing tiers

- Scalability: Easily upgrade or downgrade as your needs change

- Feature Access: Get the features you need without paying for what you don’t

- Support Levels: Higher tiers include better support and SLAs

For the Platform

- Sustainable Development: License fees support ongoing development and improvements

- Resource Management: Helps manage server resources on managed platforms

- Quality Assurance: Ensures users receive proper support and maintenance

License Types

Kinesis API offers several license tiers to match different use cases:

Community (Free)

Perfect for learning, personal projects, and small applications:

- Self-hosting only

- Basic features with limited API routes

- Community support through forums

- No cost, ideal for getting started

Essential ($99/month)

For growing applications and small teams:

- Self-hosting or managed platform

- Unlimited API routes with 5M monthly requests

- Email support with 48-hour response time

- Standard feature set including webhooks

Professional ($499/month)

For professional teams and larger applications:

- All Essential features

- 25M monthly requests

- Advanced features including SSO

- Priority support with 12-hour response time

Enterprise ($50/user/month)

For large organizations with specific needs:

- Unlimited everything

- Custom block development

- Dedicated support representative with SLA

- On-premise deployment assistance

How Licensing Works

License Activation Flow

- Purchase: Select a plan and complete payment through the License Server

- Receive Key: Get a unique license key for your purchase

- Activate: Enter the license key in your Kinesis API instance

- Validation: The system validates your license with the License Server

- Feature Access: Your instance unlocks features based on your plan

License Components

A complete license consists of:

- License Key: A unique identifier for your purchase

- Activation: The connection between your license and specific instance(s)

- Plan: The tier of service you’ve purchased

- Features: The capabilities included in your plan

Self-Hosted vs. Managed

The licensing model works differently depending on your deployment:

Self-Hosted Deployments

- Database and media storage limits don’t apply

- Use as much as your hardware allows

- Still require a valid license for feature access

- Full control over your infrastructure

Managed Platform

- Storage limits apply based on your plan

- Infrastructure managed by us

- Automatic backups and updates

- License included with subscription

Getting Started with Licenses

To begin using Kinesis API with a license:

- Access the License Server: Create an account and explore available plans

- Purchase a License: Select your plan and complete payment

- Register Your License: Activate your license in your Kinesis API instance

Current Phase: Free Development Access

Important: Kinesis API is currently in active development. During this phase, all features are available completely free. When we launch our commercial version, existing users will receive special early-adopter benefits. This is a great time to start exploring the platform and building your applications!

License Validation and Compliance

Automatic Validation

Kinesis API automatically validates your license:

- Check-In: Every 5 minutes, your instance checks in with the License Server

- Validation: Every 15 minutes, your license is fully validated

- Feature Control: Features are enabled or disabled based on validation results

What Happens If…

License Expires

- You’ll receive email notifications before expiration

- Grace period allows for renewal without interruption

- After grace period, access is restricted to Community features

Payment Fails

- Automatic retries for temporary payment issues

- Email notifications about payment problems

- Suspension if payment isn’t resolved

License is Revoked

- Immediate restriction to Community features

- Data remains intact and accessible

- Can purchase new license to restore access

Support and Assistance

If you have questions about licensing:

- General Questions: Review this documentation section

- Purchase Help: Contact our sales team through the License Server

- Technical Issues: Log a support ticket

- License Problems: Check the License management page in your instance

Next Steps

Ready to get started with licensing?

- Access the License Server to create an account

- Explore purchasing options to find the right plan

- Learn to activate your license in your Kinesis API instance

For detailed pricing information and plan comparisons, visit our Pricing Page.

Accessing the License Server

The Kinesis API License Server is a separate platform where you manage your licenses, view available plans, and handle billing. This guide will walk you through accessing and using the License Server.

Overview

The License Server provides:

- Account Management: Create and manage your license account

- Plan Browsing: View available plans and their features

- License Management: Track your active licenses and their status

- Billing Control: Manage payments and renewal settings

- Support Access: Get help with licensing issues

Getting Started

Accessing the License Server

- Navigate to https://license.kinesis.world in your web browser

- You’ll see the License Server homepage with options to log in or register

Note: The License Server uses a separate account from your Kinesis API instance. You’ll need to create a new account even if you already have a Kinesis API user account.

Creating Your Account

To register for a License Server account:

- Fill in the registration form with:

- Full Name: Your first and last legal names

- Email Address: Your business or personal email (will be used for license communications)

- Click the “Submit” button at the bottom of the registration section

- Click “Register” to submit your information

First-Time Login

After registering:

- Check your email inbox for a message from the License Server

- The email will contain:

- Your username (typically your email address)

- A temporary password

- Instructions for first login

- Return to https://license.kinesis.world

- Enter your credentials (email address and password) on the login form

- Click the “Submit” button at the bottom of the login section

Security Tip: Change your password and choose a strong password that includes uppercase and lowercase letters, numbers, and special characters.

Setting Up Your Profile

After your first login, it’s important to complete your profile information:

Accessing Profile Settings

- Click on your profile icon or username in the top-right corner

- Select “Edit Profile” from the dropdown menu

Completing Your Profile

Fill in all relevant information:

Personal Information

- Full Name: Your complete legal name

- Email Address: Primary contact email

Business Information

- Company Name: Your organization’s legal name

- Company Address: Complete business address including:

- Street address

- City

- State/Province

- ZIP/Postal code

- Country

Saving Your Profile

- Review all entered information for accuracy

- Click the various “Save” buttons where applicable

- You’ll receive a confirmation message when your profile is updated

Important: Accurate profile information is essential for:

- Proper license activation

- Receiving invoices and receipts

- Tax compliance

- Support communications

Exploring Available Plans

The License Server allows you to browse all available plans before making a purchase:

Viewing Plans

- From the main dashboard, click on “Plans” or “Pricing” in the navigation menu

- You’ll see a comparison of all available plans:

- Community: Free tier with basic features

- Essential: Entry-level paid plan

- Professional: Advanced features for teams

- Enterprise: Custom solutions for large organizations

Plan Details

For each plan, you can view:

Features

- API creation limits

- Monthly request quotas

- Storage allocations (for managed hosting)

- Available blocks and components

- Support level details

Pricing Information

- Monthly pricing

- Annual pricing (if applicable)

- Per-user costs (for Enterprise)

- Any setup fees or minimums

Plan Documentation

Each plan section includes:

- Feature List: Detailed breakdown of included features

- Limitations: Any restrictions or quotas

Navigation

The main dashboard page includes:

- Profile: Account settings

- Plans: Browse available plans

- Licenses: Manage existing licenses

- Activations: View existing license activations

Getting Help

Support Resources

Access help through:

Documentation

- Browse the knowledge base

- Read getting started guides

- View FAQs

Support Tickets

Use the Kinesis API support system.

Security Best Practices

Account Security

To keep your license account secure:

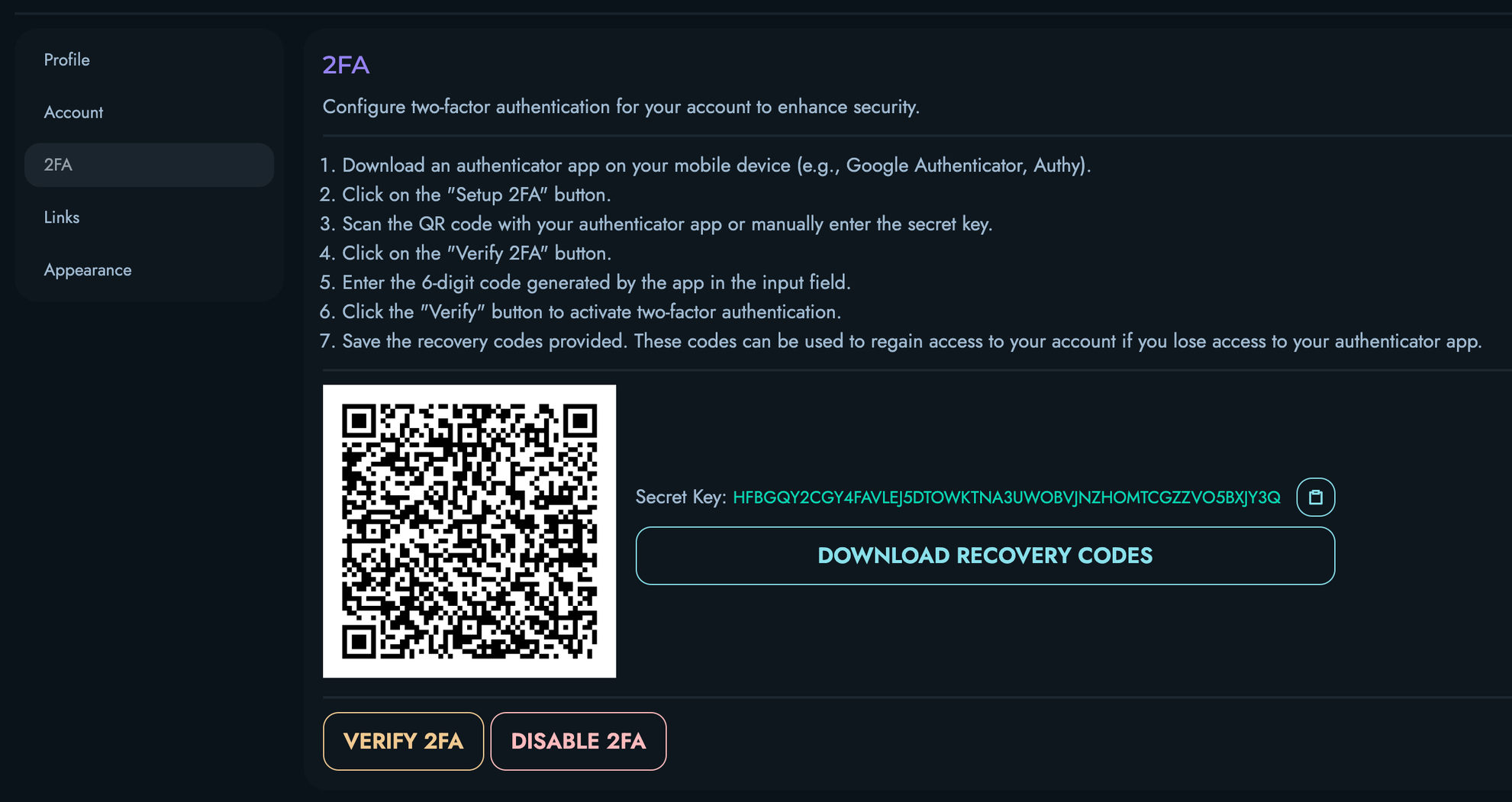

- Use Strong Passwords: Choose unique, complex passwords

- Enable Two-Factor Authentication: If available, activate 2FA

- Regularly Review Activity: Check for unauthorized access

- Keep Profile Updated: Maintain current contact information

- Secure Email Access: Protect the email account associated with your license

License Key Protection

- Never share your license keys publicly

- Store license keys securely

- Rotate keys if compromised

- Report suspicious activity immediately

Next Steps

Now that you have access to the License Server and have set up your profile:

- Explore purchasing a license to get started with a paid plan

- Learn how to activate your license in your Kinesis API instance

- Review the main licensing overview for more information

If you encounter any issues accessing the License Server or setting up your account, please contact support through the Kinesis API ticketing system.

Purchasing Licenses

This guide walks you through the process of purchasing a Kinesis API license through the License Server. Whether you’re upgrading from the Community plan or purchasing your first license, this documentation will help you complete your purchase successfully.

Before You Purchase

Prerequisites

Before purchasing a license, ensure you have:

- Created a License Server Account: See Accessing the License Server

- Completed Your Profile: Updated all business and billing information

- Chosen Your Plan: Reviewed available plans and selected the right tier

- Determined Your Needs: Calculated required instances and users

Planning Your Purchase

Consider these factors when planning your license purchase:

Number of Instances

- Instance: A single installation of Kinesis API

- Self-Hosted: Each server running Kinesis API counts as one instance

- Managed: Each environment (production, staging, etc.) counts as one instance

- Development: Community licenses work for development environments

Number of Users

- Per Instance: User limits apply to each instance separately

- User: Anyone with login credentials to access the instance

- API-Only Users: May not count depending on your use case

- Service Accounts: Typically count as users

Billing Period

- Monthly: Pay month-to-month with flexibility to cancel

- Yearly: Save money with annual commitment (typically 2 months free)

The Purchase Process

Step 1: Select Your Plan

- Log in to the License Server

- Navigate to “Plans” or “Purchase License”

- Review available plans:

- Essential ($99/month): For growing applications

- Professional ($499/month): For professional teams

- Enterprise ($50/user/month): For large organizations

Step 2: Configure Your License

On the license configuration page, click on the “Purchase a new License” button and specify:

Instance Count

- Enter the number of instances you need

- The system will calculate the total based on:

- Plan base price

- Number of instances

- Billing period selection

User Count

- Enter the number of users per instance

- For Enterprise plans, this determines your per-user pricing

- For other plans, ensure you stay within plan limits

Important: User counts should represent your maximum expected users per instance. Exceeding limits may require an upgrade.

License Name

- Give your license a descriptive name

- Examples:

- “Production Environment”

- “Company Name - Main API”

- “Project Name License”

Step 3: Apply Coupons (Optional)

If you have a promotional or discount coupon:

- Enter your coupon code

- Verify the discount is reflected in the total

Coupon types may include:

- Percentage Off: Reduces price by a percentage (e.g., 20% off)

- Fixed Amount: Reduces price by a set amount (e.g., $50 off)

- Free Trial Extension: Adds free months to your subscription

- Feature Unlock: Grants access to specific features

Step 4: Choose Billing Period

Select your preferred billing cycle:

Monthly Billing

- Pros:

- Lower upfront cost

- Month-to-month flexibility

- Easy to cancel or change

- Cons:

- Higher overall yearly cost

- Monthly payment processing

Yearly Billing

- Pros:

- Significant savings (typically 2 months free)

- Single annual payment

- Locked-in pricing for the year

- Cons:

- Higher upfront cost

- Annual commitment

- Refund policies may apply

Step 5: Review Your Order

Before completing your purchase, review:

- Plan Name: Confirm correct plan selected

- Instance Count: Verify number of instances

- User Count: Check user allocation

- Billing Period: Confirm monthly or yearly

- Subtotal: Base price calculation

- Discounts: Applied coupons or promotions

- Total Amount: Final price to be charged

Step 6: Complete Payment

Supported Payment Methods

The License Server doesn’t currently support payment methods but should feature various ones in the future:

- Credit Cards: Visa, MasterCard, American Express

- Debit Cards: Major providers accepted

- Bank Transfer: For Enterprise customers (contact sales)

- PayPal: Available in most regions

- Other Methods: May vary by region

Payment Information

- Select your payment method

- Enter required details:

- Card number

- Expiration date

- CVV/Security code

- Billing address

- Review the payment terms

- Click “Complete Purchase” or “Pay Now”

Payment Confirmation

After successful payment:

- You’ll see a confirmation screen with:

- Order number

- Transaction ID

- License details

- Check your email for:

- Payment receipt

- License key

- Activation instructions

After Purchase

License Activation

Once your payment is confirmed:

- Administrator Review: An administrator will validate your payment

- License Activation: Your license status will change to ACTIVE

- Email Notification: You’ll receive confirmation when activated

- License Key: Available in your License Server dashboard

Timeline: License activation typically occurs within 24 business hours. For urgent activations, contact support@kinesis.world.

Accessing Your License Key

To retrieve your license key:

- Log in to the License Server

- Navigate to “Manage Licenses”

- Find your purchased license

- Copy your License Key (format:

XXXXXX-XXXXXX-XXXXXX-XXXXXX)

Next Steps

After receiving your license key:

- Activate it in your Kinesis API instance

- Verify all features are accessible

- Configure your instance according to your plan

- Review the usage documentation to get started

Managing Your License

Viewing License Details

In your License Server dashboard, you can view:

- License Status: Active, Suspended, Expired, Revoked

- Plan Details: Current plan and features

- Instance/User Counts: Allocated resources

- Billing Information: Next payment date and amount

License Statuses

INACTIVE

- Payment received but not yet validated

- Awaiting administrator approval

- Temporary status during processing

ACTIVE

- License is valid and working

- All features accessible

- Payment current

SUSPENDED

- Payment failure or overdue

- Temporary restriction

- Can be reactivated by updating payment

REVOKED

- Permanent license termination

- Violation of terms or non-payment

- Requires new purchase to restore access

Renewal and Changes

Automatic Renewal

For active licenses:

- Monthly: Automatically renews on the same date each month

- Yearly: Renews on anniversary of purchase date

- Payment Method: Uses saved payment information

- Notifications: Email reminders sent before renewal

Manual Renewal

If automatic renewal fails:

- You’ll receive email notification

- Log in to License Server

- Navigate to your license details

- Click “Renew Now”

- Update payment information if needed

- Complete renewal payment

Modifying Your License

Upgrading

To upgrade your plan:

- Go to license details in License Server

- Click “Upgrade Plan”

- Select new plan tier

- Pay difference for remaining billing period

- Changes apply immediately

Downgrading

To downgrade your plan:

- Go to license details

- Click “Change Plan” or “Downgrade”

- Select lower plan tier

- Changes apply at next billing cycle

- Credit may be applied to next bill

Changing Instance/User Counts

To modify allocations:

- Contact support via the ticketing system

- Specify required changes

- Additional fees may apply

- Changes processed by administrator

Important: Changes to licenses after purchase may incur additional fees based on the modifications requested, and should be requested via a support ticket.

Payment Issues

Failed Payments

If a payment fails:

- Email Notification: Sent to registered email

- Grace Period: Typically 7 days to update payment

- Retry Attempts: Automatic retries every 3 days

- License Suspension: After grace period expires

Updating Payment Method

To update your payment information:

- Log in to License Server

- Go to “Billing” or “Payment Methods”

- Add new payment method or update existing

- Set as default for renewals

- Manually trigger retry if needed

Requesting Refunds

For refund requests:

- Review refund policy in terms of service

- Contact support within applicable window

- Provide reason for refund request

- Administrator will review and respond

Getting Help with Purchases

Pre-Purchase Questions

- Review the pricing page

- Check the main licensing documentation

- Contact sales through License Server

Purchase Issues

- Log a support ticket

- Include order/transaction number

- Describe the issue clearly

- Support team typically responds within 48 hours

Billing Questions

- Check License Server billing section

- Review payment history

- Contact billing support through the ticketing system

Next Steps

After purchasing your license:

- Wait for administrator activation (usually within 24 hours)

- Check email for license key and confirmation

- Learn how to activate your license in Kinesis API

- Start building with your new features!

For questions about the purchasing process or your order, please contact support.

Registering Your License

After purchasing a license from the License Server, you need to activate it in your Kinesis API instance. This guide explains how to register your license and understand how the activation system works.

Overview

License registration connects your Kinesis API instance to your purchased license, enabling features and capabilities based on your plan. The process involves:

- Entering your license key in Kinesis API

- Automatic validation with the License Server

- Feature activation based on your plan

- Ongoing validation to maintain access

Prerequisites

Before registering your license:

- ✅ You must have ROOT user permissions

- ✅ Your license must be ACTIVE in the License Server

- ✅ You have your license key from the License Server

- ✅ Your Kinesis API instance can connect to the internet

Accessing License Management

Via Web Interface

- Log in to your Kinesis API instance with a ROOT account

- Navigate to

/web/licensein your browser, or - Click “License” in the sidebar menu (visible only to ROOT users)

License Page Components

The license management page displays:

- License Key Input: Field for entering your license key

- Show/Hide Key Button: Toggle visibility of your license key

- Submit Button: Activate your license

- Validation Button: Manually validate current license

- Deactivation Button: Remove current license activation

- License Details: Information about your active license (after activation)

Activating Your License

Step 1: Enter Your License Key

- Locate the license key input field on the license page

- Click the eye icon to show the input field (if hidden)

- Paste your license key from the License Server

- Format:

XXXXXX-XXXXXX-XXXXXX-XXXXXX - Ensure no extra spaces or characters

- Format:

Step 2: Submit for Activation

- Click the submit button (save icon) next to the input field

- The system will display a loading indicator

- Wait for the activation process to complete

Step 3: Activation Process

When you submit your license key, Kinesis API:

- Validates Format: Checks if the key is properly formatted

- Contacts License Server: Sends activation request with:

- Your license key

- Instance ID (unique identifier for your installation)

- Current user count

- Receives Response: License Server returns:

- Activation confirmation

- Plan details

- Feature list

- Authentication token

- Stores Locally: Saves activation information securely

- Enables Features: Unlocks capabilities based on your plan

Step 4: Verify Activation

After successful activation:

- Success Message: Green notification appears confirming activation

- License Details Appear: Panel shows:

- Plan name and type

- License status

- Instance and user limits

- Billing information

- Next renewal date

- Feature Buttons: Validation and deactivation buttons become visible

- License Status Indicator: Bottom-right corner shows “Activated: [Plan Name]”

Manual License Management

Validating Your License

To manually validate your license:

- Click the “Validate License” button

- System performs immediate validation with License Server

- Updates displayed license information

- Shows success or error message

Use Cases:

- After purchasing an upgrade

- If features seem unavailable

- After plan changes

- Troubleshooting license issues

Deactivating Your License

To remove your license activation:

- Click the “Deactivate License” button

- Confirm deactivation in the warning dialog

- System:

- Notifies License Server

- Removes local activation

- Resets to Community features

- Clears license displays

Warnng: Deactivating your license immediately restricts access to paid features. Your data remains intact, but capabilities are limited to the Community tier.

Troubleshooting

Common Activation Issues

“License has already been activated”

Cause: This license key is already in use

Solution:

- Check if you’re trying to activate the same license on multiple instances

- Deactivate the license from the other instance first

- Or purchase an additional license for more instances

“License key not found”

Cause: Invalid or mistyped license key

Solution:

- Copy the license key directly from License Server

- Check for extra spaces or characters

- Verify the license status is ACTIVE in License Server

“License is not active”

Cause: License needs administrator activation or payment issue

Solution:

- Check License Server for license status

- Verify payment was processed

- Wait for administrator activation (up to 24 hours)

- Contact support if delayed

“Failed to connect to License Server”

Cause: Network connectivity issue

Solution:

- Verify your instance has internet access

- Check firewall settings allow outbound HTTPS

- Confirm License Server is accessible (https://license.kinesis.world)

- Check proxy settings if applicable

Validation Issues

“Activation token has expired”

Cause: Authentication token needs refresh

Solution:

- Click “Validate License” to get new token

- System automatically reauthenticates

- If that fails, try deactivating and reactivating

“License has expired”

Cause: Subscription period has ended

Solution:

- Renew license in License Server

- Wait for renewal processing

- Click “Validate License” in Kinesis API

- Features should restore after successful renewal

“User count exceeded”

Cause: More users than your plan allows

Solution:

- Upgrade plan to increase user limit

- Or remove inactive users

- Contact support for temporary increase

License Status Indicators

Monitor your license status through the indicator in the bottom-right corner:

- Green “Activated: [Plan]”: License is active and validated

- Red “License Inactive”: No active license or validation failed

Best Practices

Security

- Protect License Keys: Never share publicly or commit to version control

- ROOT Access Only: Only ROOT users can manage licenses

- Monitor Status: Regularly check license status indicator

- Update Promptly: Renew before expiration to avoid interruption

Reliability

- Stable Internet: Ensure consistent connectivity for validation

- Monitor Check-Ins: Watch for validation errors in logs

- Plan Ahead: Renew licenses before expiration

- Test Upgrades: Verify feature access after plan changes

Compliance

- Stay Within Limits: Monitor user and instance counts

- Timely Renewal: Pay invoices promptly

- Accurate Information: Keep License Server profile updated

- Proper Usage: Follow terms of service for your plan

Next Steps

After successfully activating your license:

- Explore features available in your plan

- Review the usage documentation

- Configure your instance for production use

- Start building your APIs

For issues with license activation or validation, please contact support.

Core Components

Kinesis API is built around a set of core components that work together to provide a complete API development and management solution. Understanding these components is essential for effectively using the platform.

Architecture Overview

At a high level, Kinesis API’s architecture consists of:

- User Management System - Controls access and permissions

- Projects & Collections - Organizes your API resources

- Data Management - Stores and manipulates your application data

- API Routes - Exposes functionality through RESTful endpoints

- Supporting Systems - Provides additional functionality like events, media handling, and more

These components interact through the custom Kinesis DB database system and are exposed via both the web interface and API endpoints.

Key Components

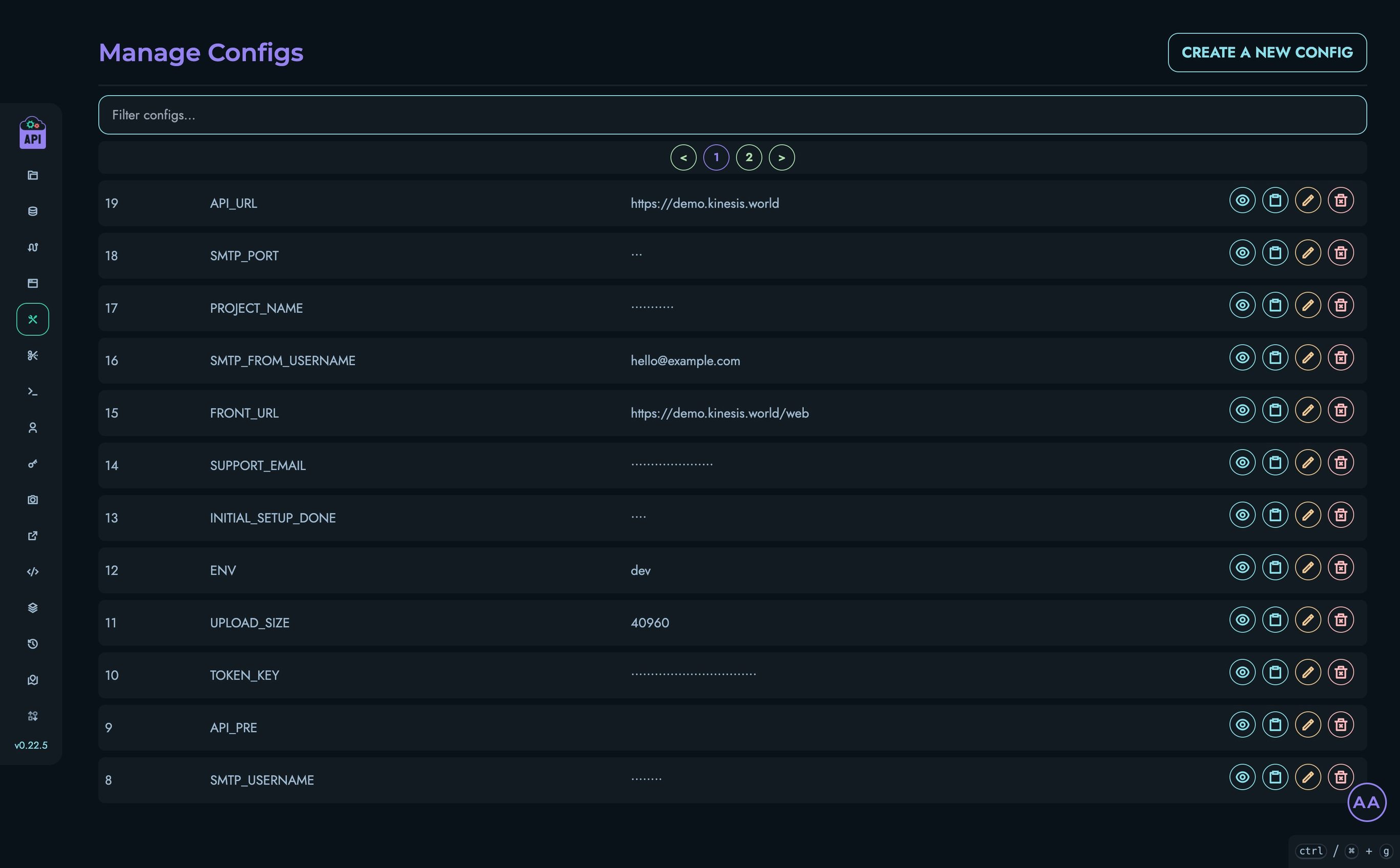

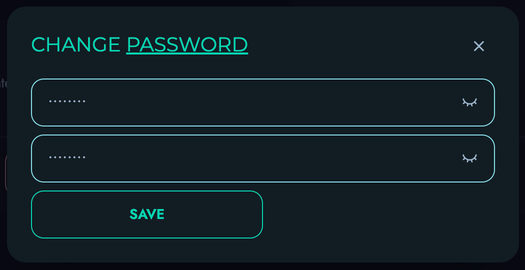

Configs

The configuration system allows you to control how Kinesis API operates. This includes settings for:

- Environment variables

- SMTP settings for email

- Security parameters

- API endpoint behavior

Configs can be set during initial setup and modified later through the admin interface.

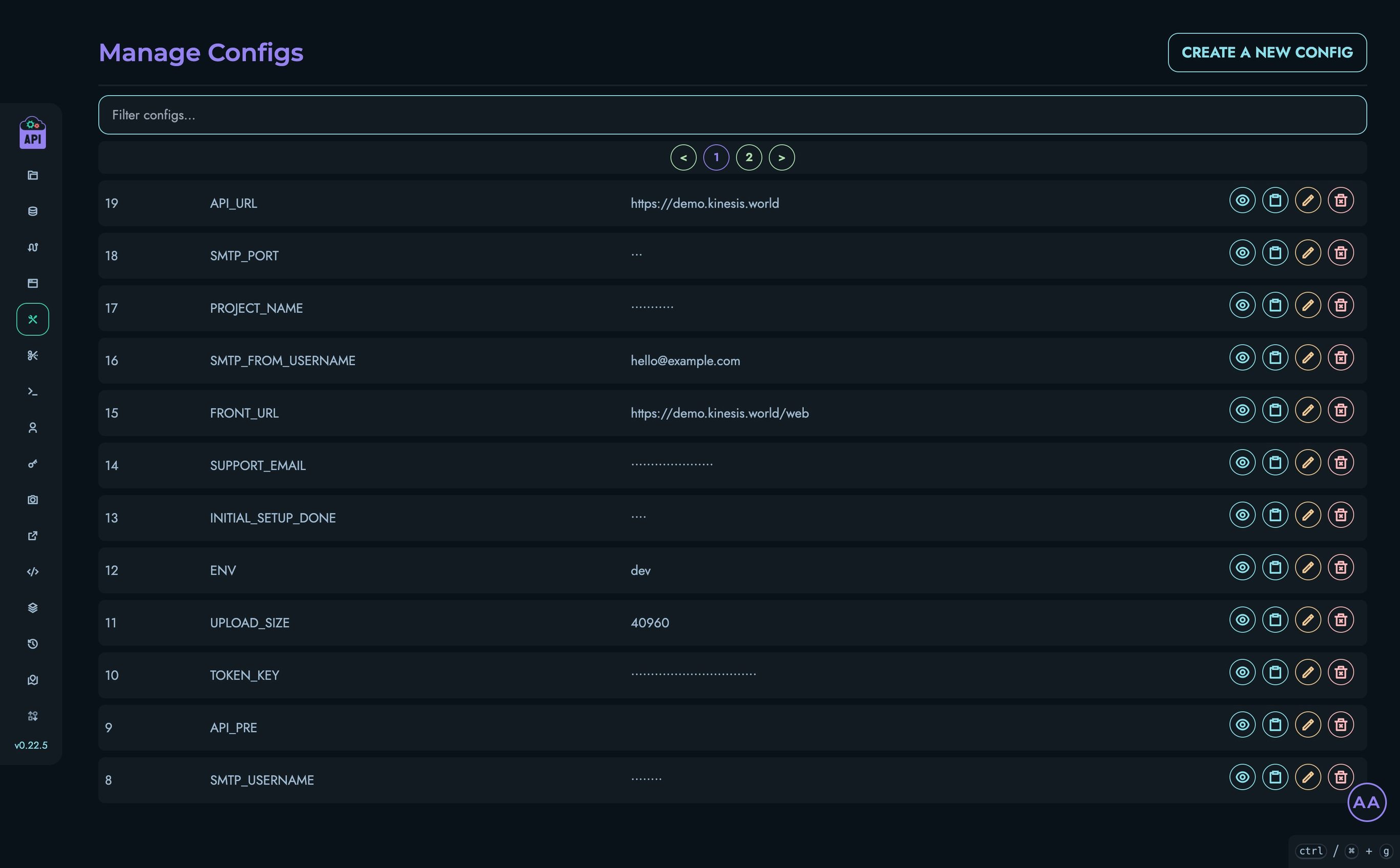

Constraints

Constraints define rules and validations that maintain data integrity across your API. They enforce:

- Data type validations

- Required fields

- Value ranges and patterns

- Relationship integrity

- Business rules

Proper constraint management ensures your API behaves predictably and data remains valid.

Users

The user management system controls access to your Kinesis API instance. It includes:

- User accounts with varying permission levels

- Role-based access control (ROOT, ADMIN, AUTHOR, VIEWER)

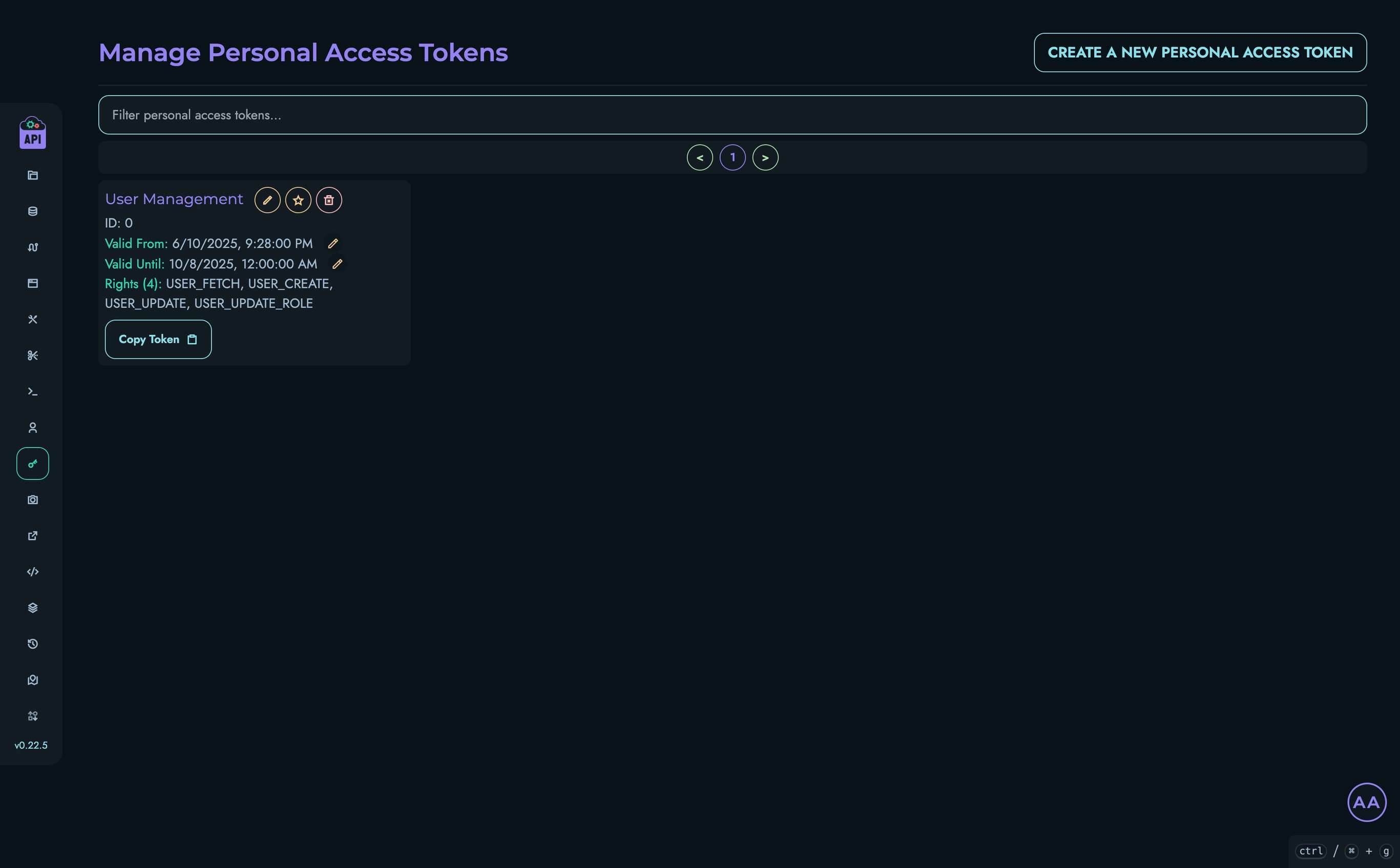

- Personal Access Tokens for API authentication

- User profiles with customizable information

User management is critical for security and collaboration in multi-user environments.

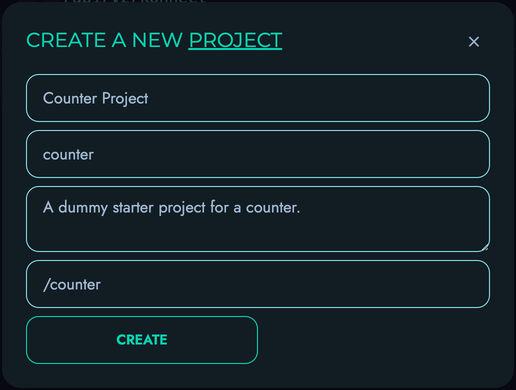

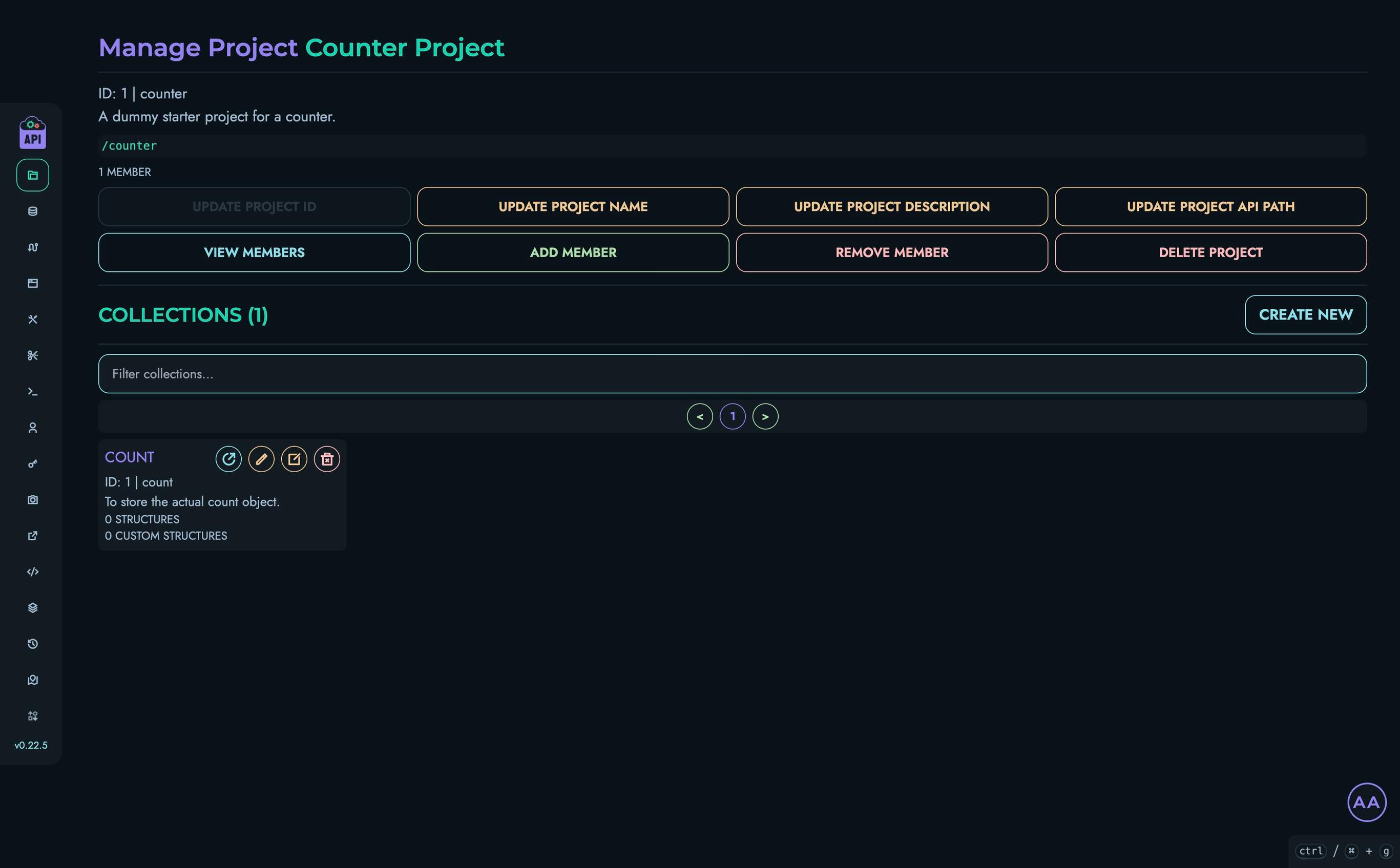

Projects

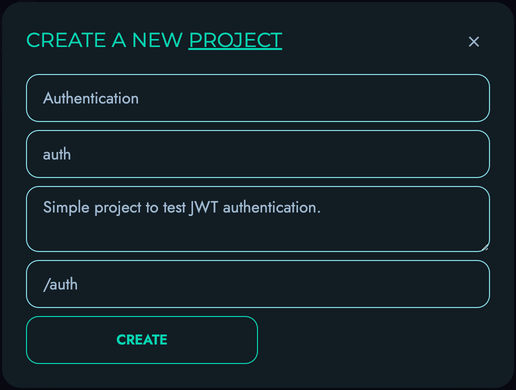

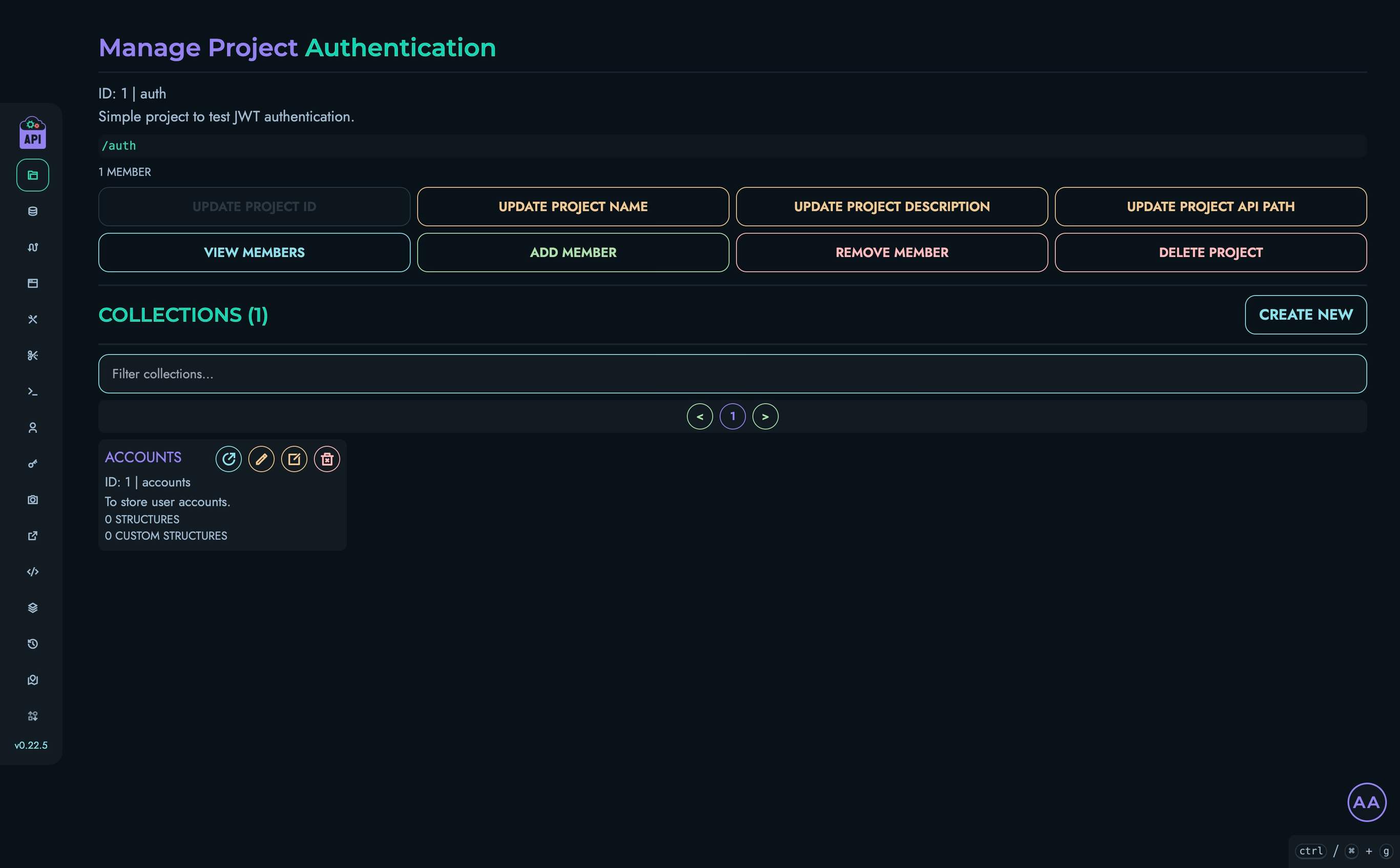

Projects are the top-level organizational units in Kinesis API. They allow you to:

- Group related APIs and resources

- Separate concerns between different applications

- Manage permissions at a high level

- Create logical boundaries between different parts of your system

Each project can contain multiple collections, structures, and routes.

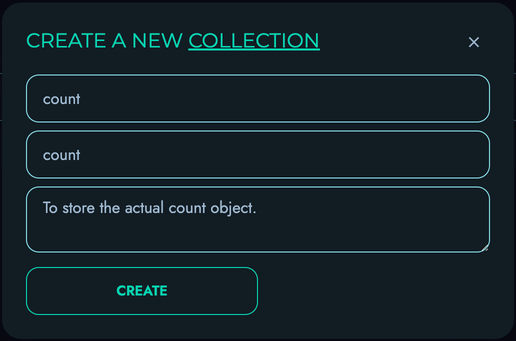

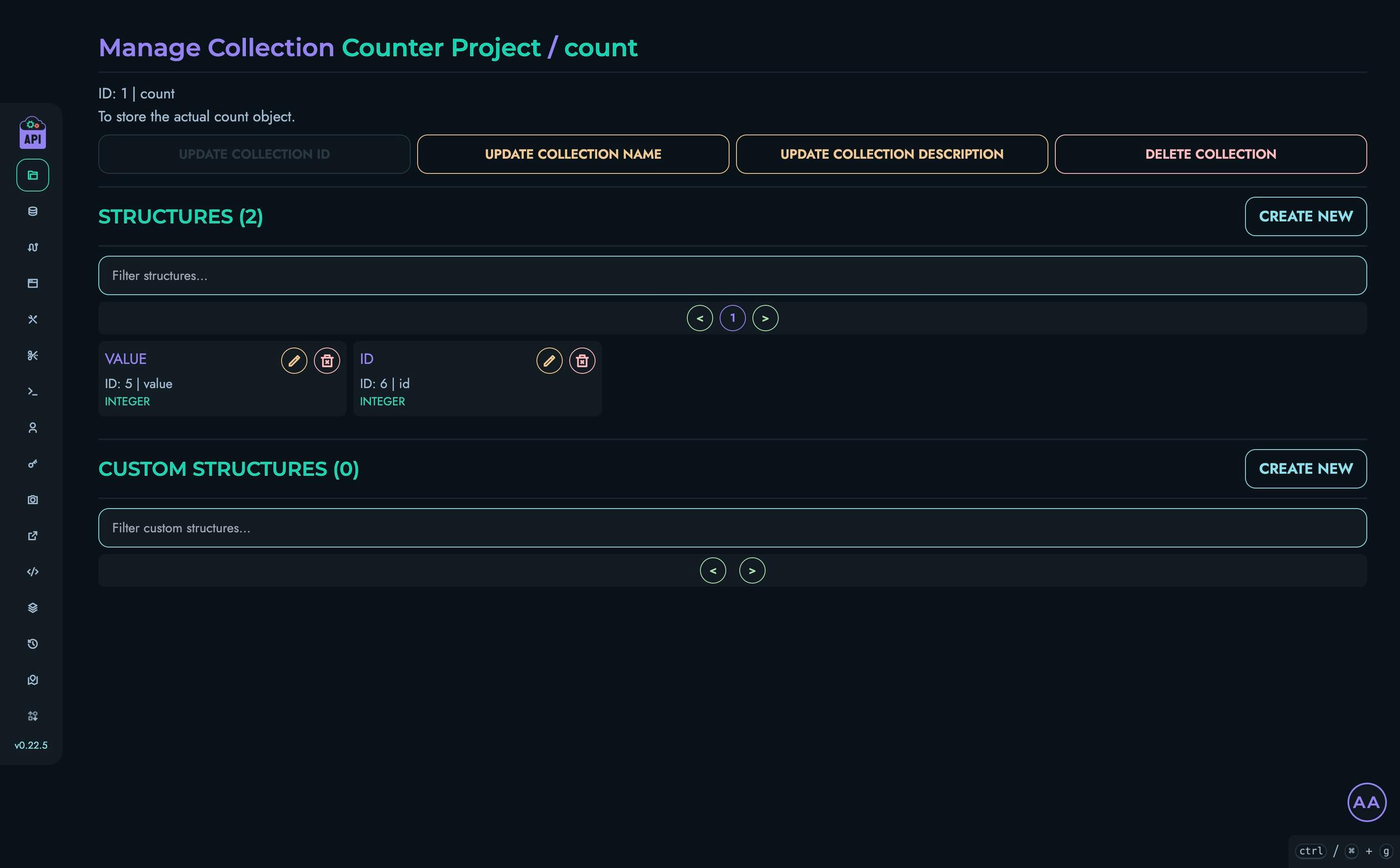

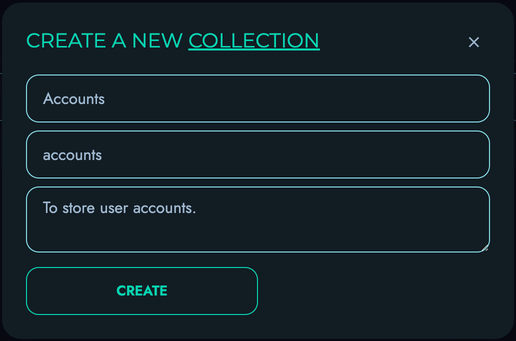

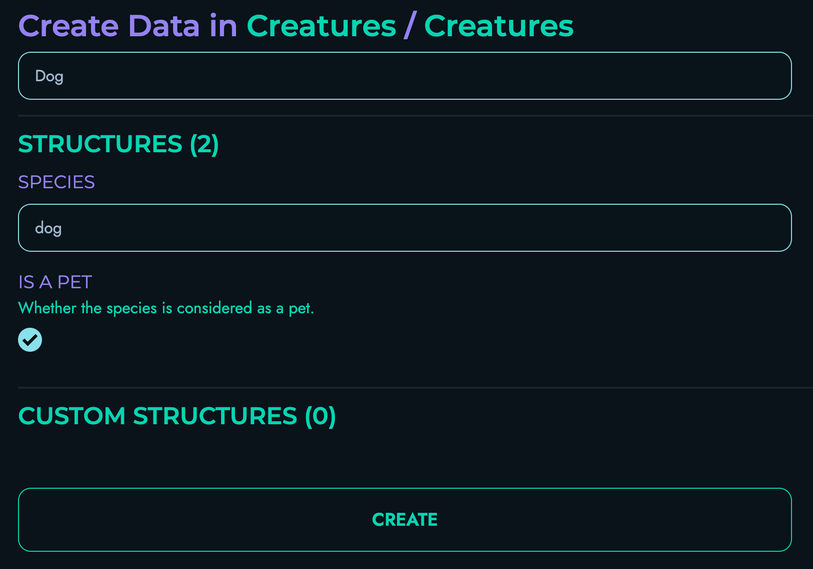

Collections

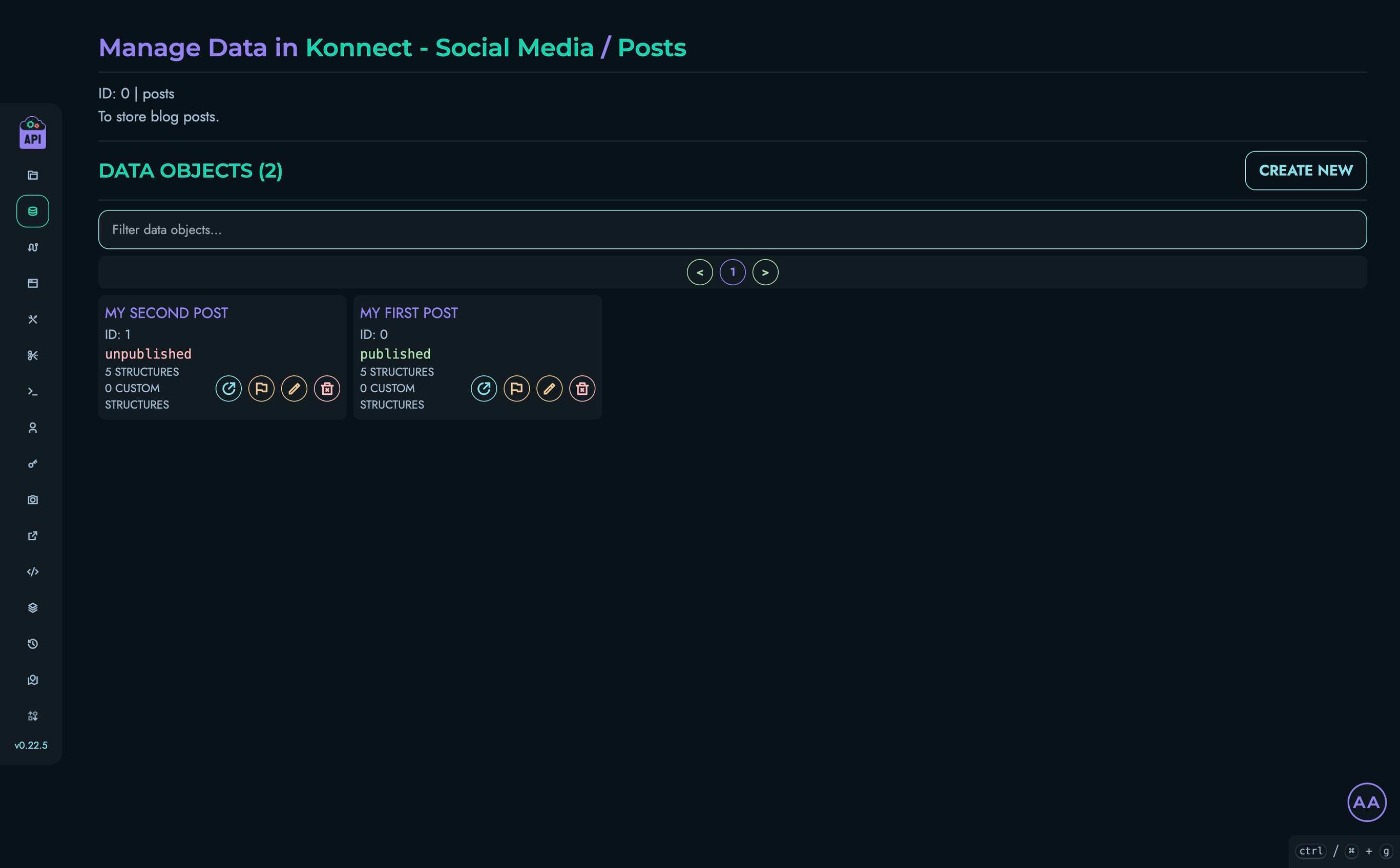

Collections are containers for data records of a specific type. They:

- Organize your data into logical groups

- Provide CRUD operations for data manipulation

- Apply structure definitions to ensure data consistency

- Enable efficient data retrieval and manipulation

Collections are the primary way you’ll interact with data in Kinesis API.

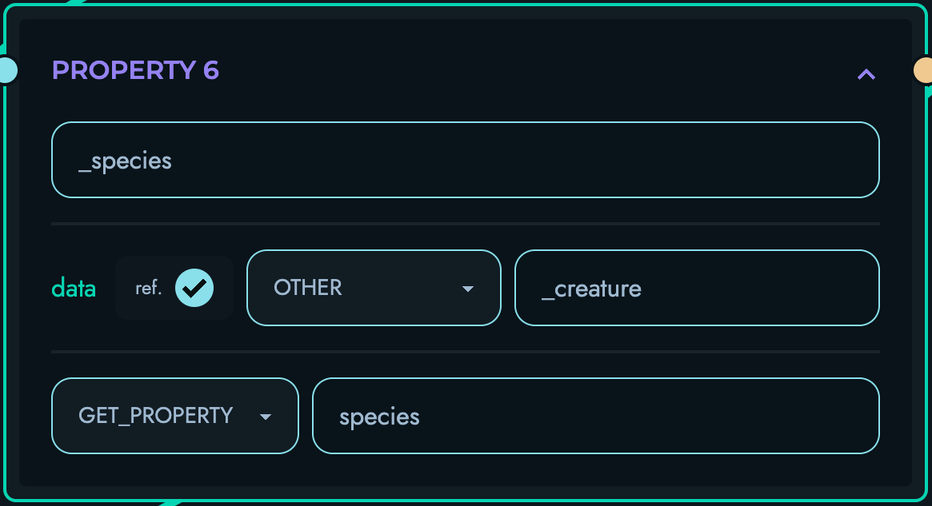

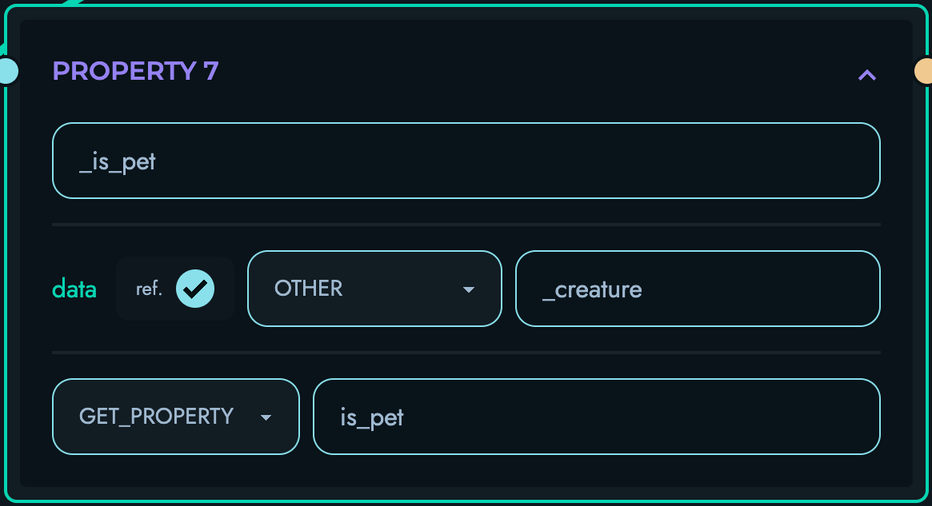

Structures

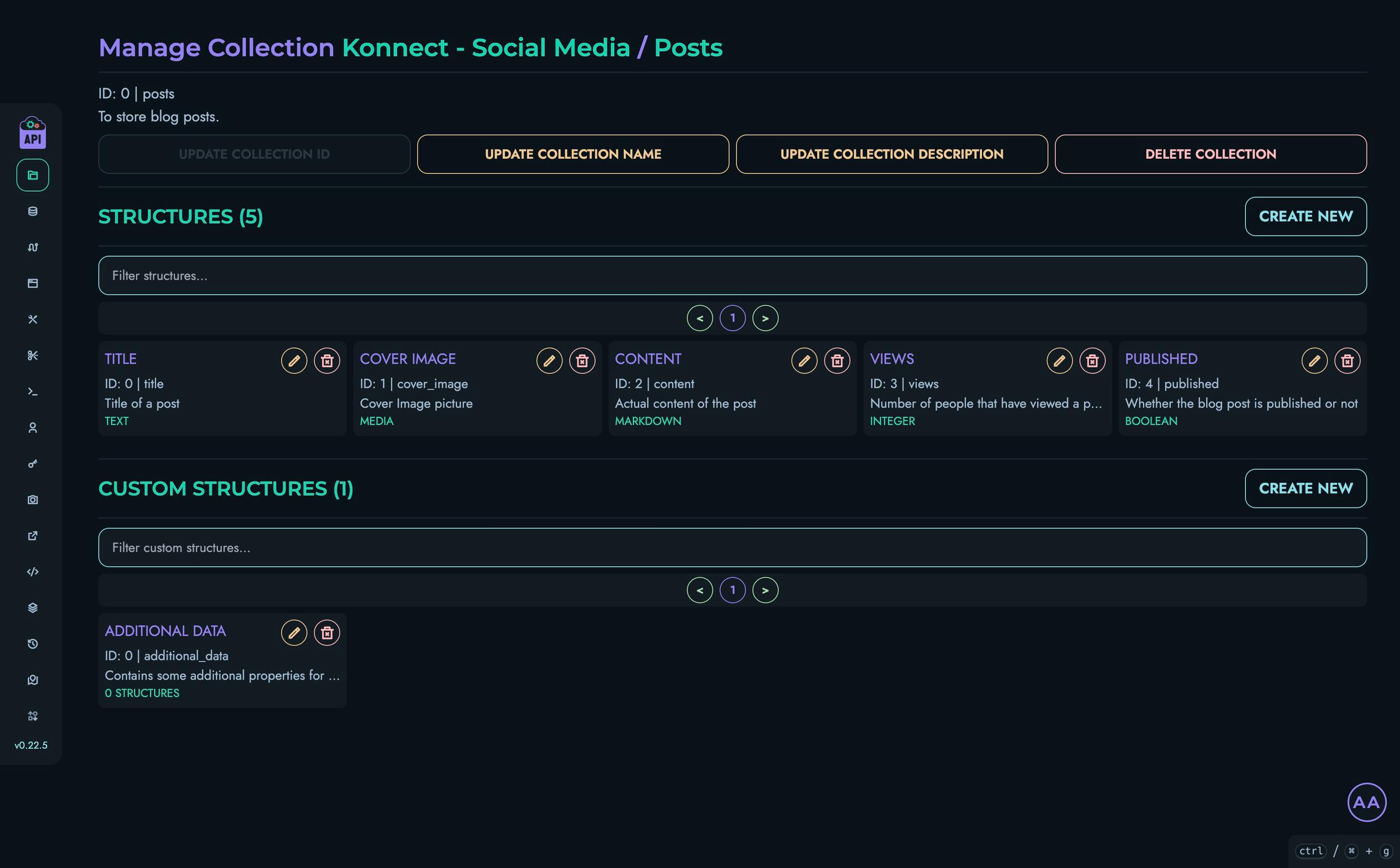

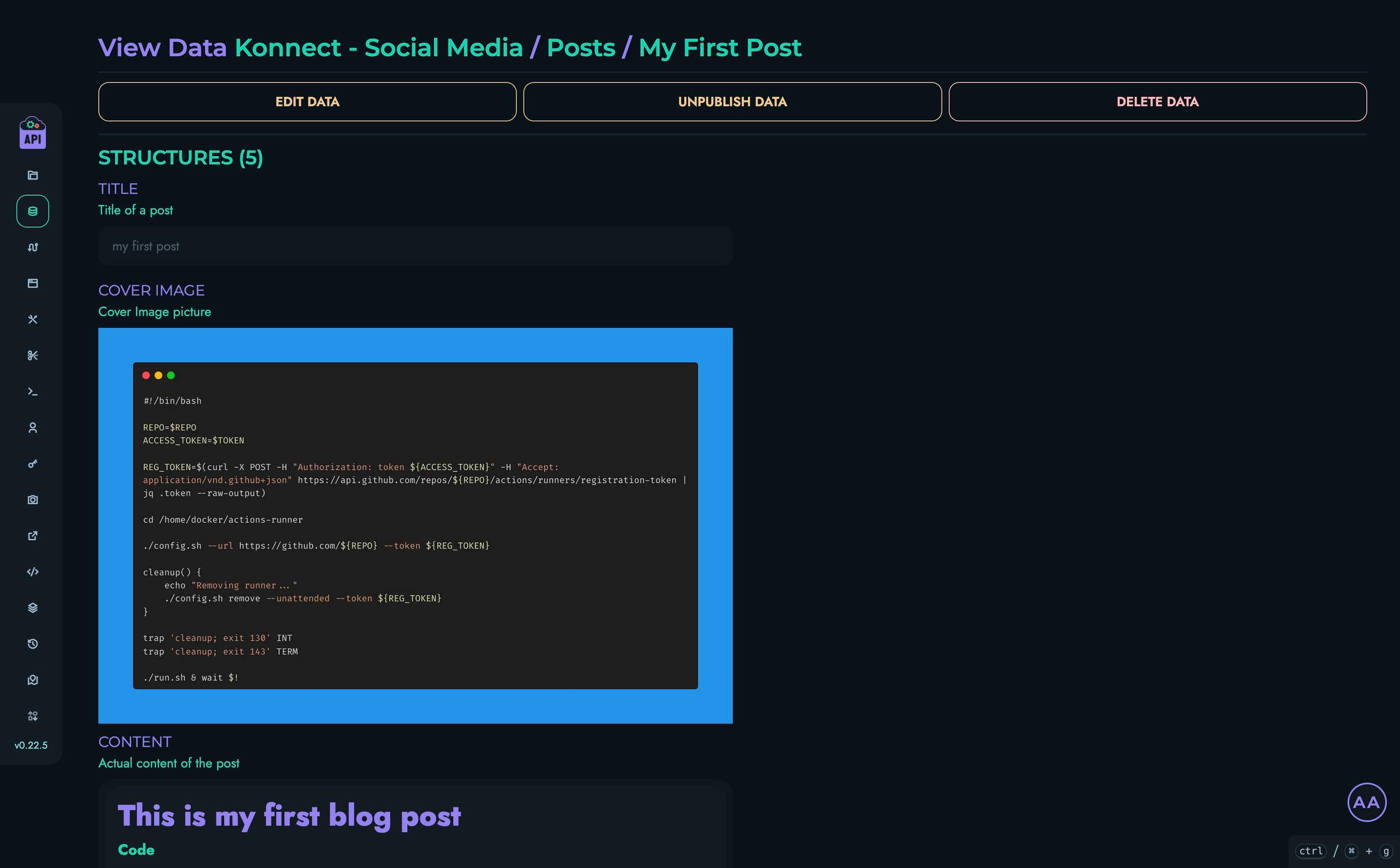

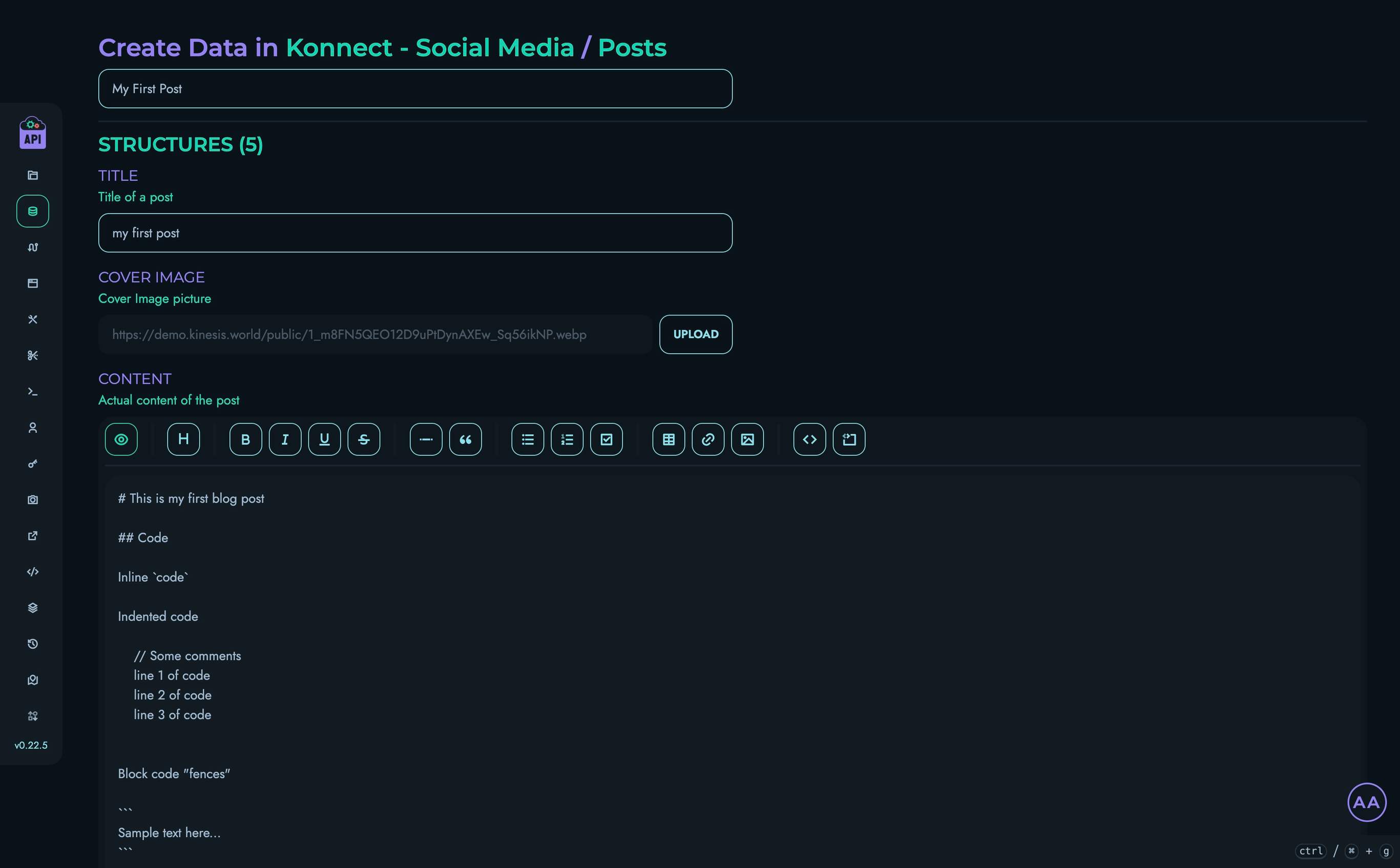

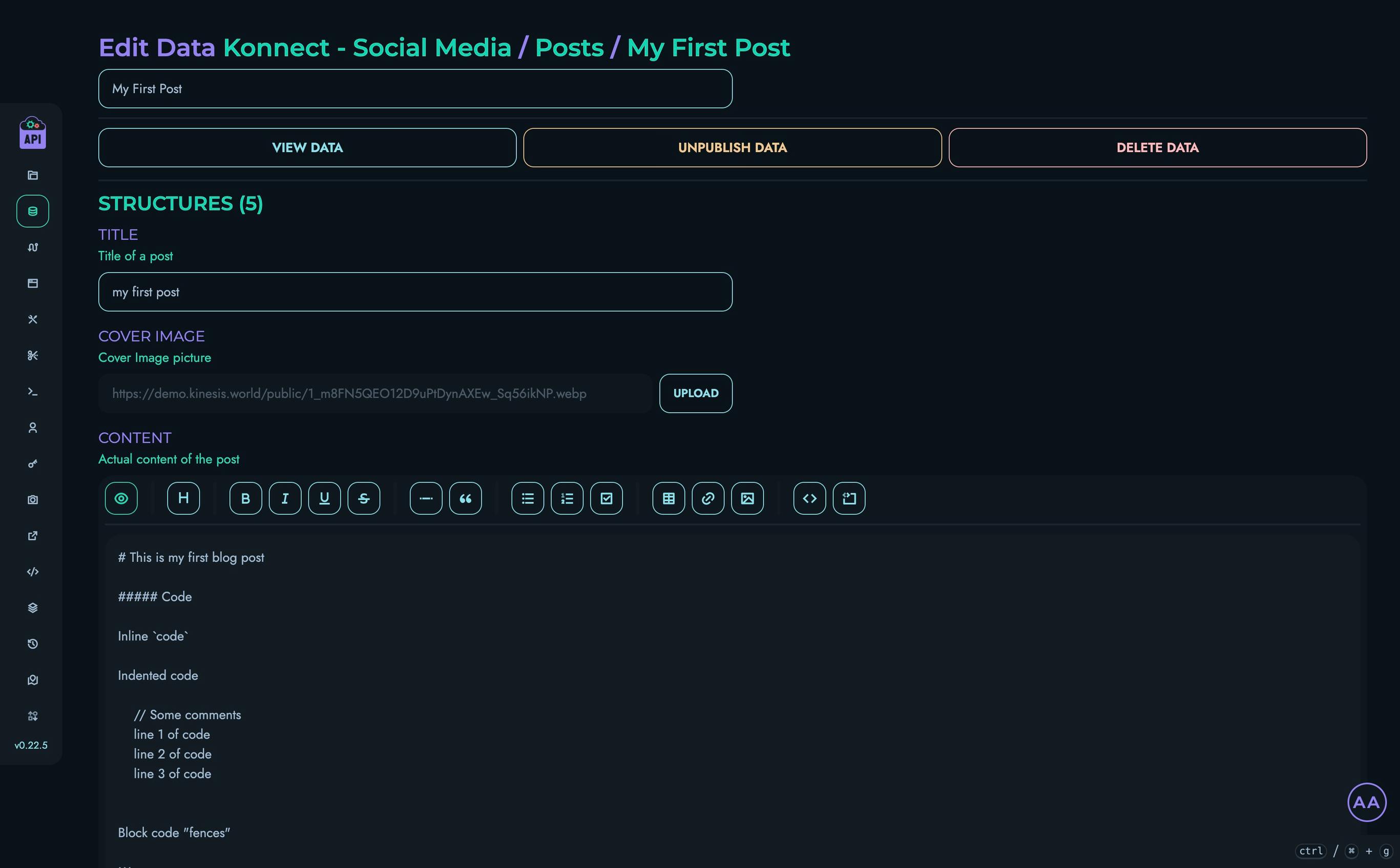

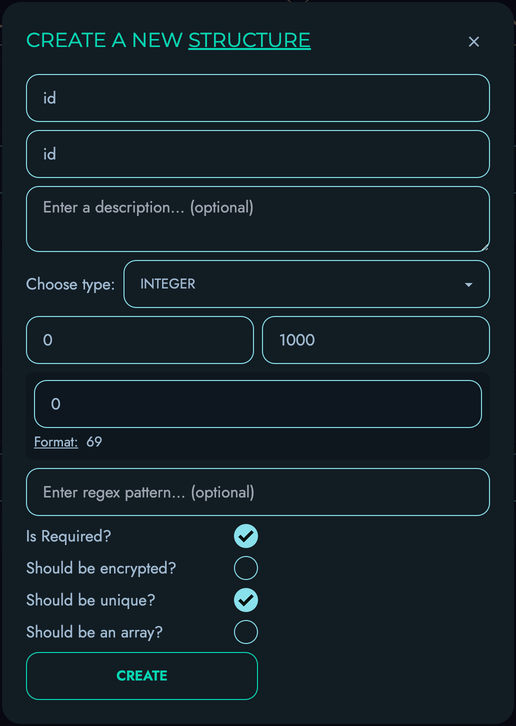

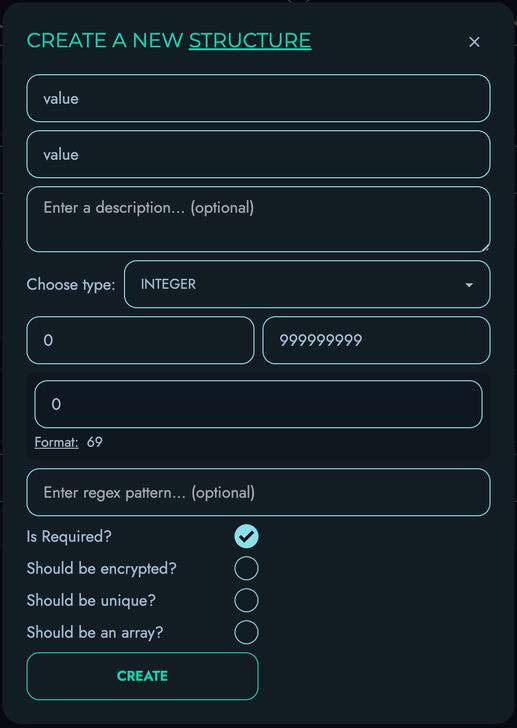

Structures define the schema for your data. They:

- Specify fields and their data types

- Apply validation rules through constraints

- Support nested and complex data models

- Allow for custom structures with specialized behavior

Structures ensure your data follows a consistent format and meets your application’s requirements.

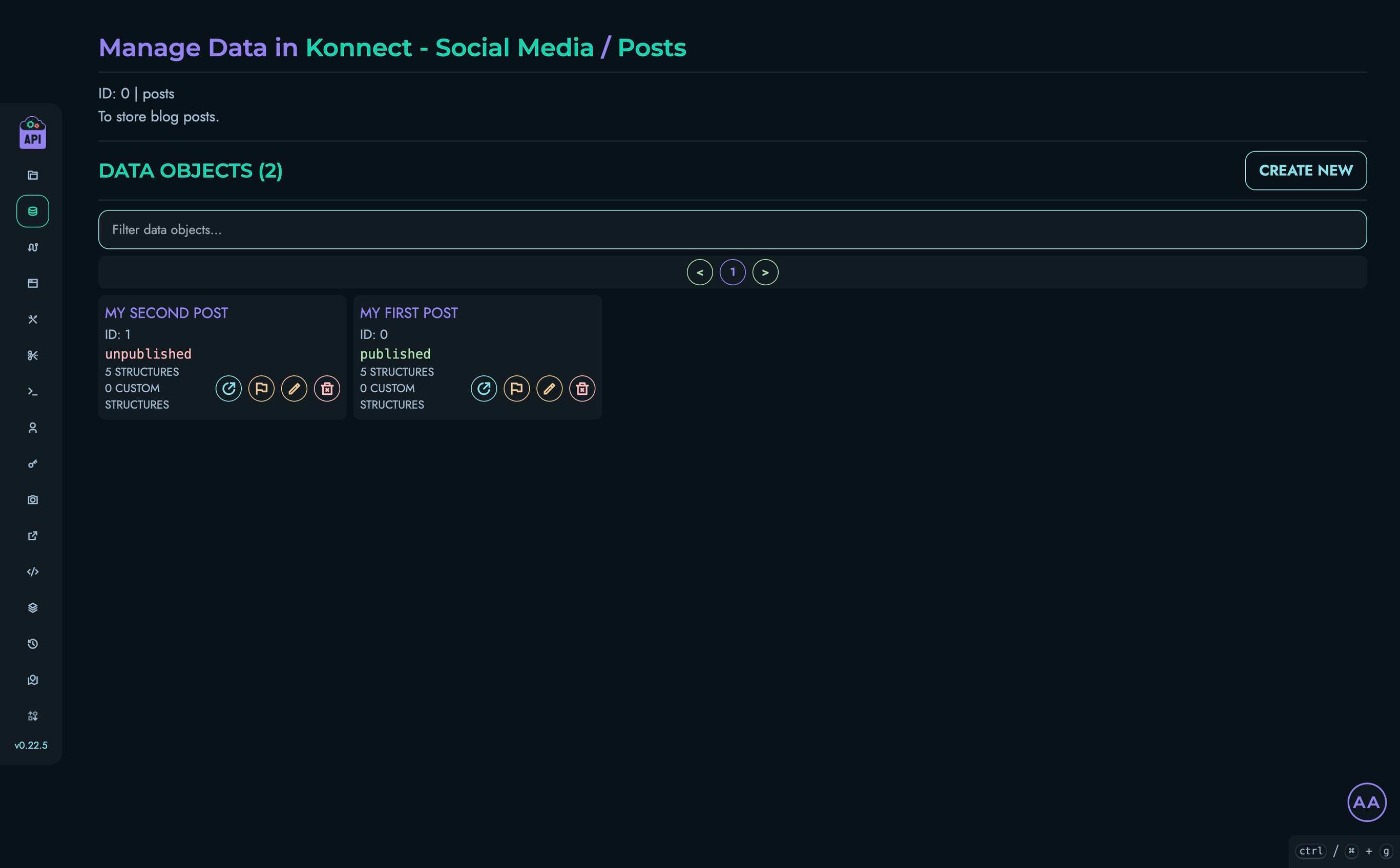

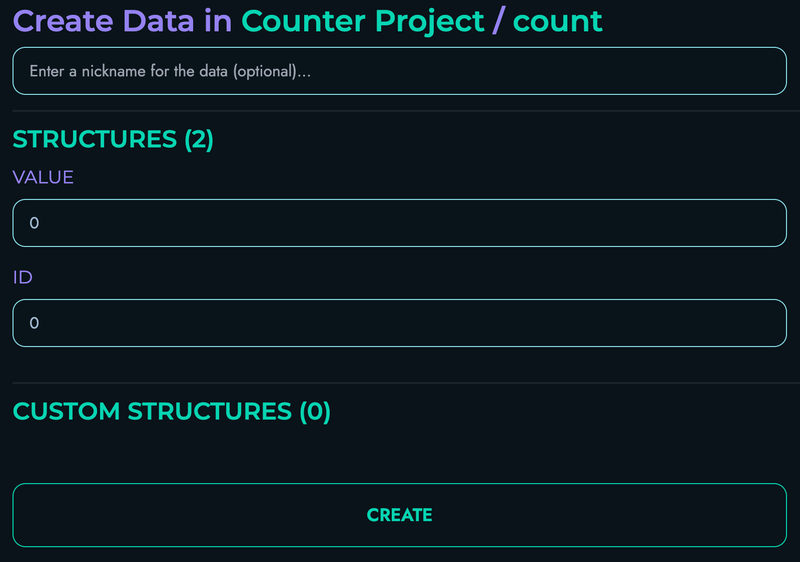

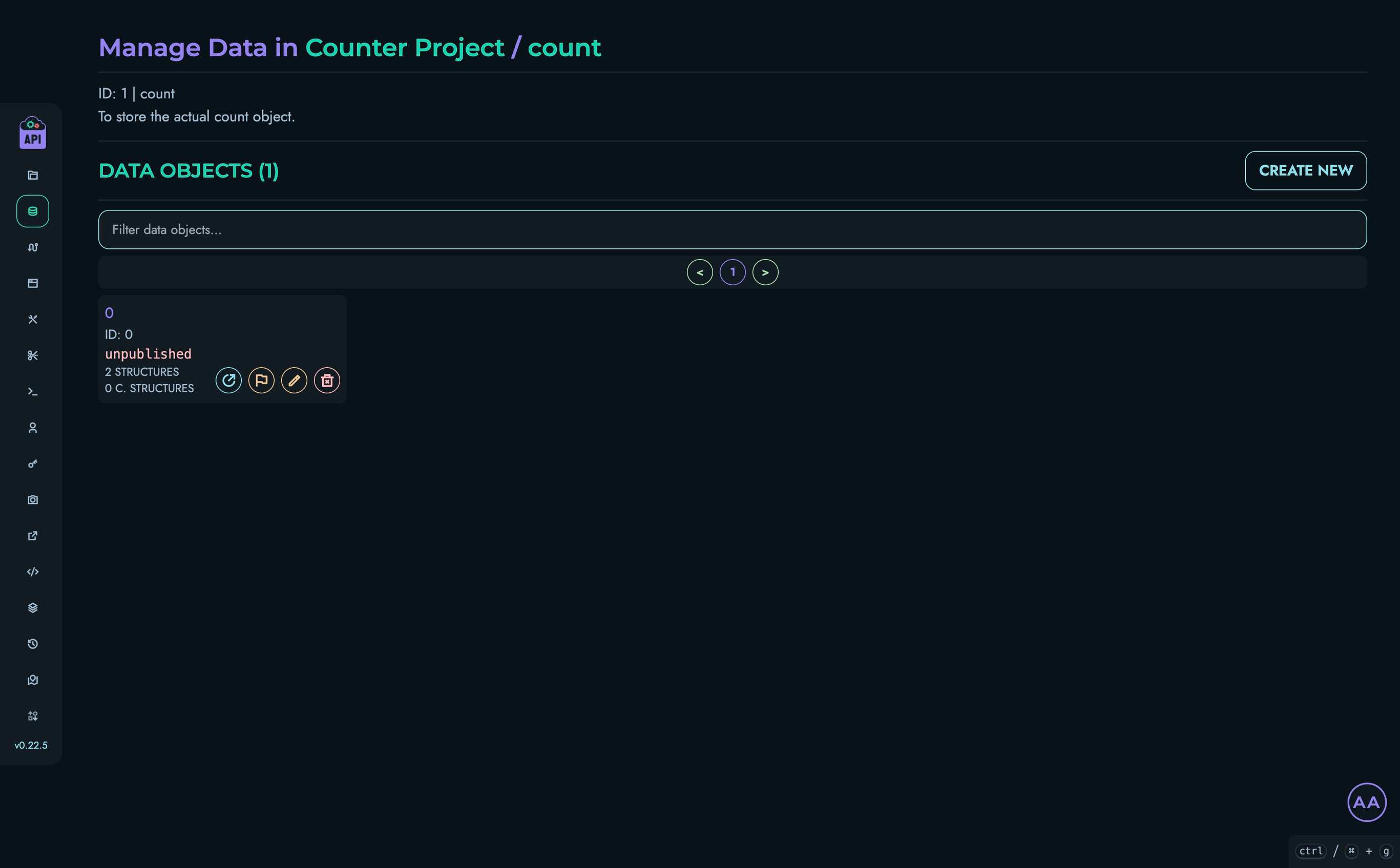

Data

The data component provides interfaces for working with your stored information:

- Creating, reading, updating, and deleting records

- Querying and filtering data

- Importing and exporting datasets

- Managing relationships between records

Efficient data management is essential for building performant APIs.

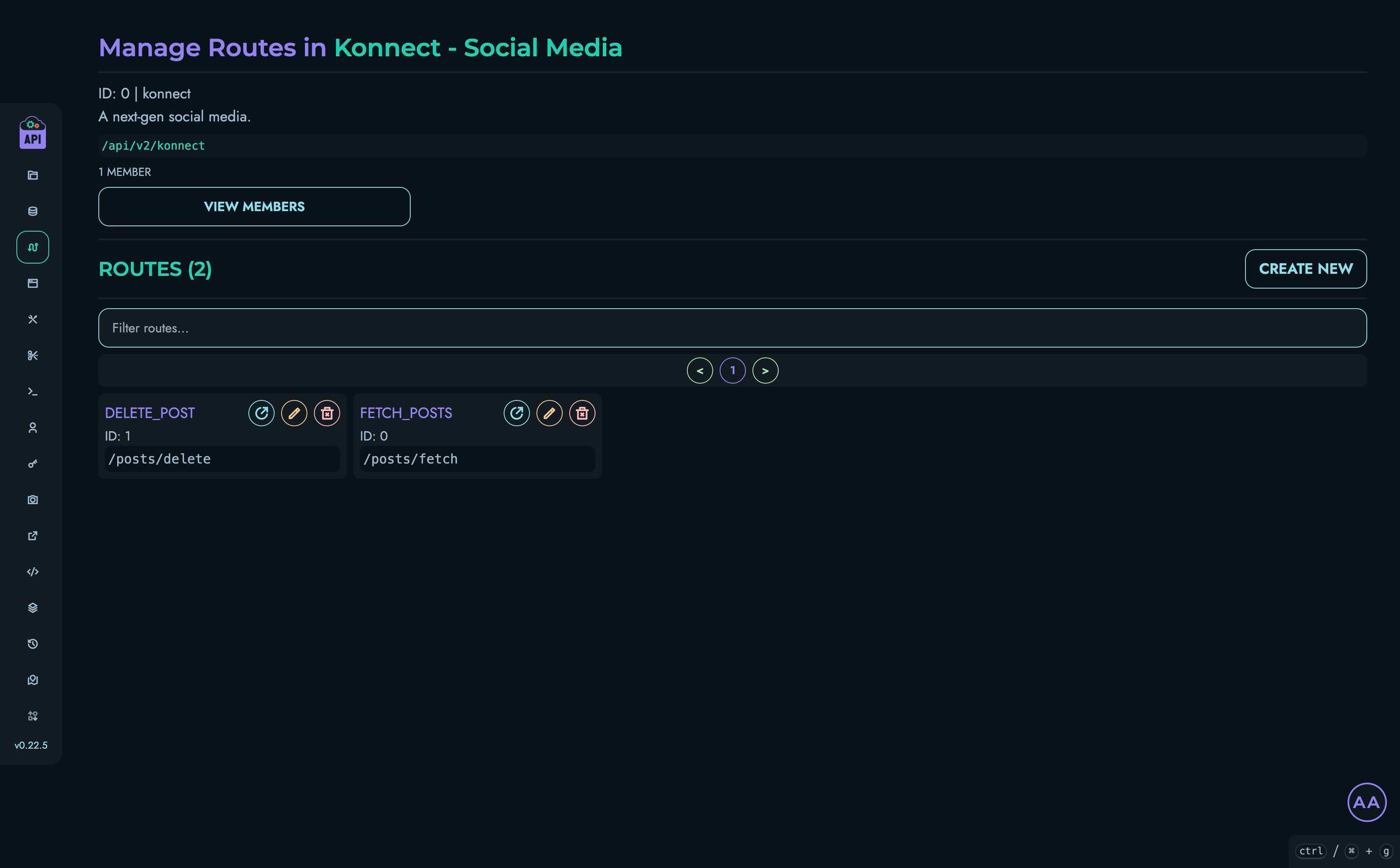

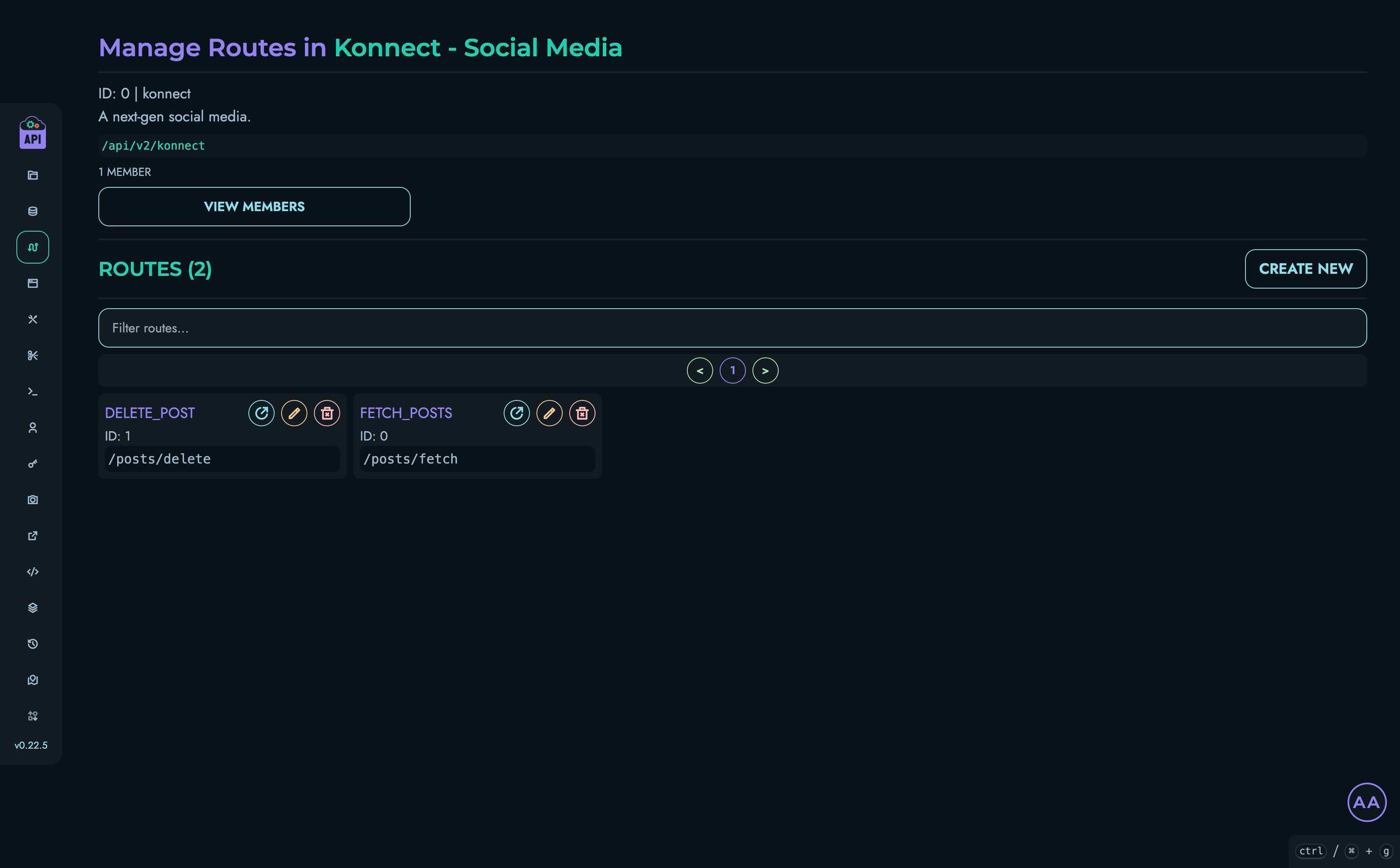

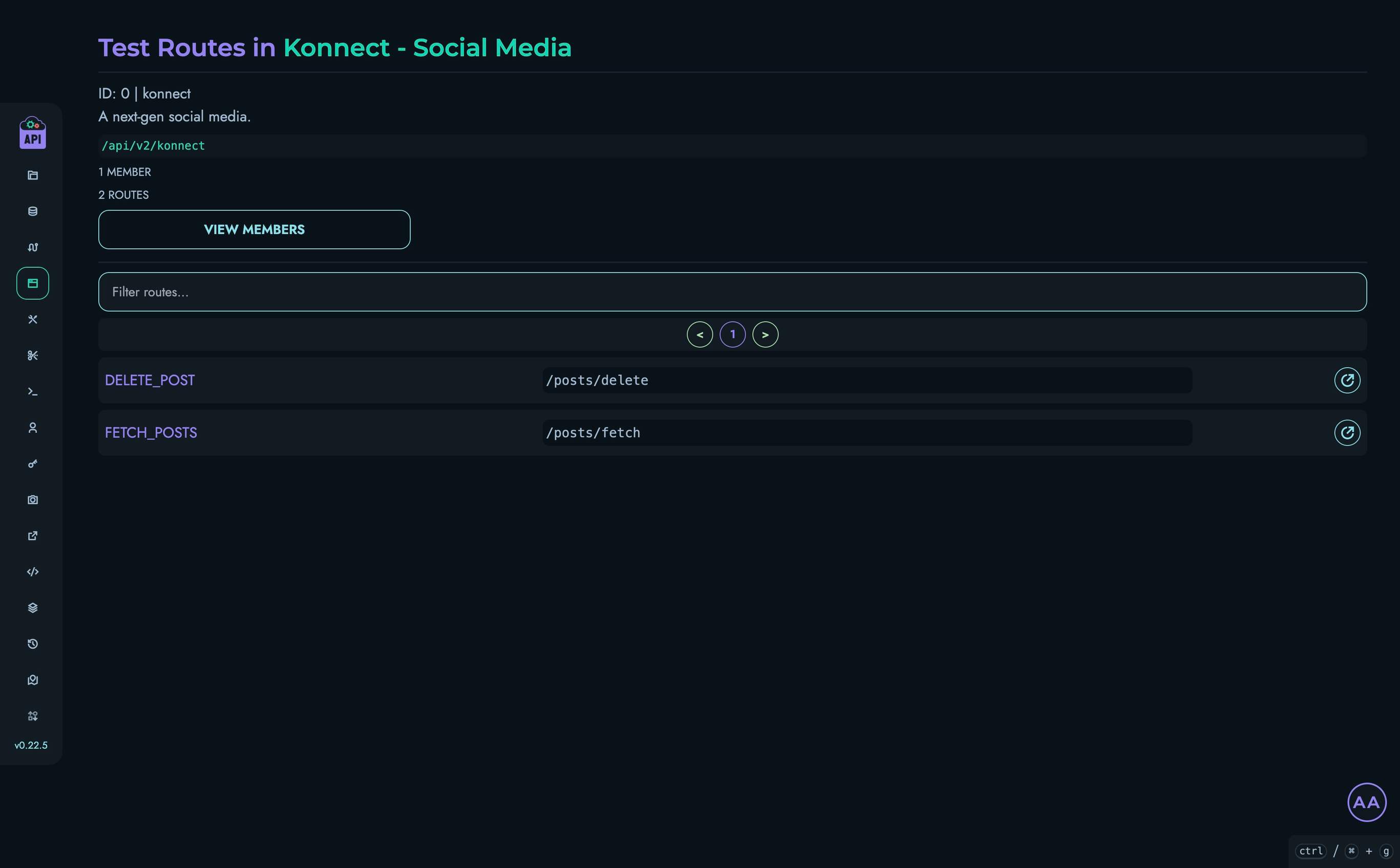

Routes

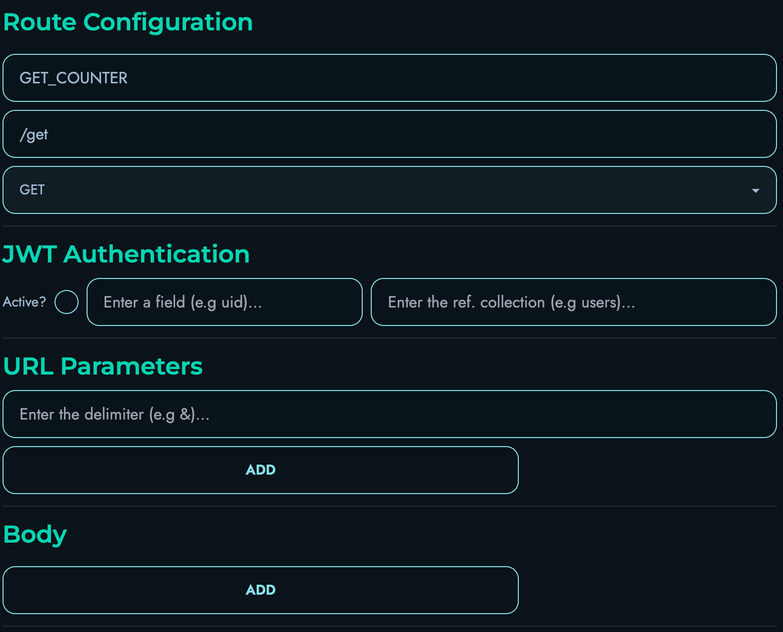

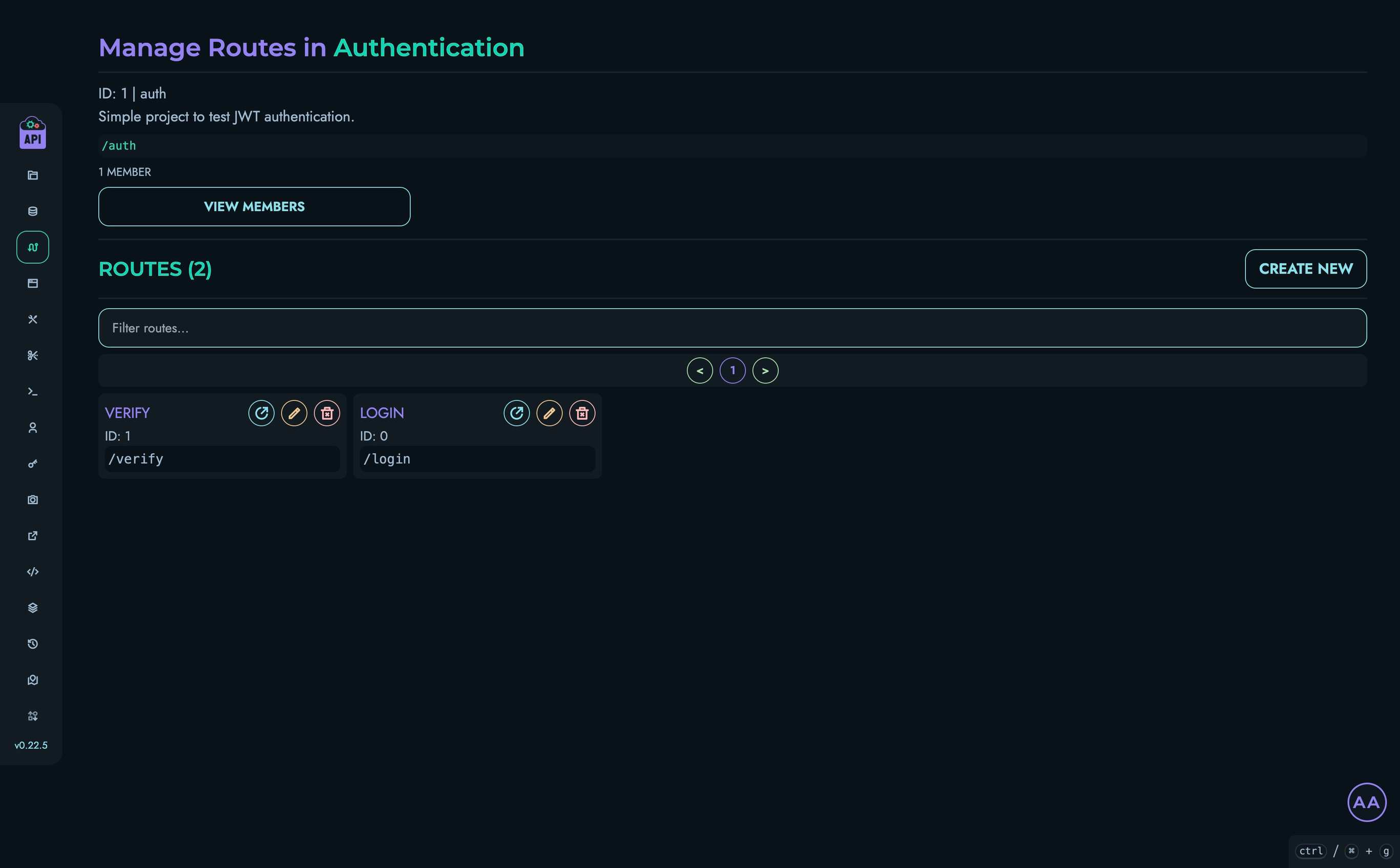

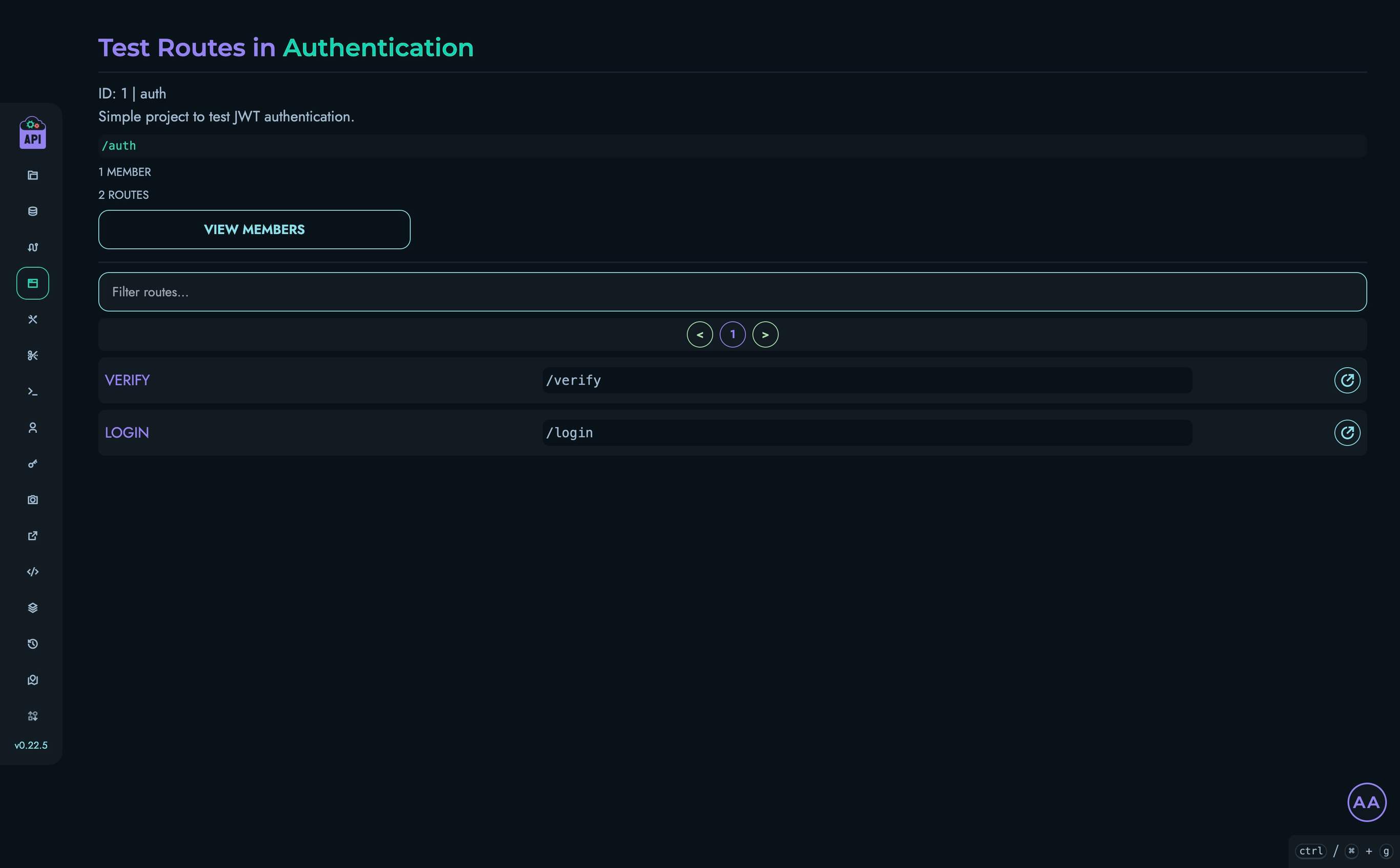

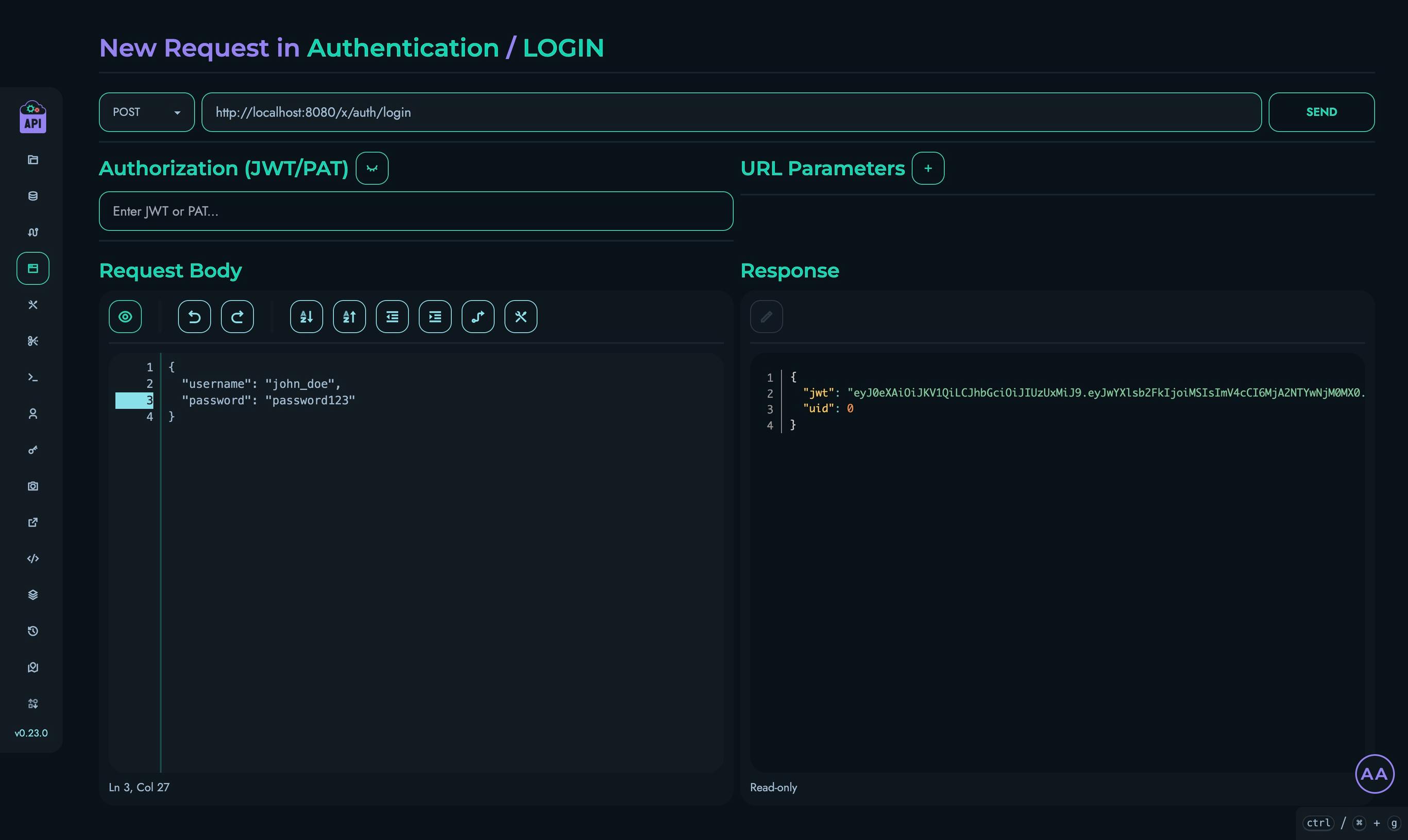

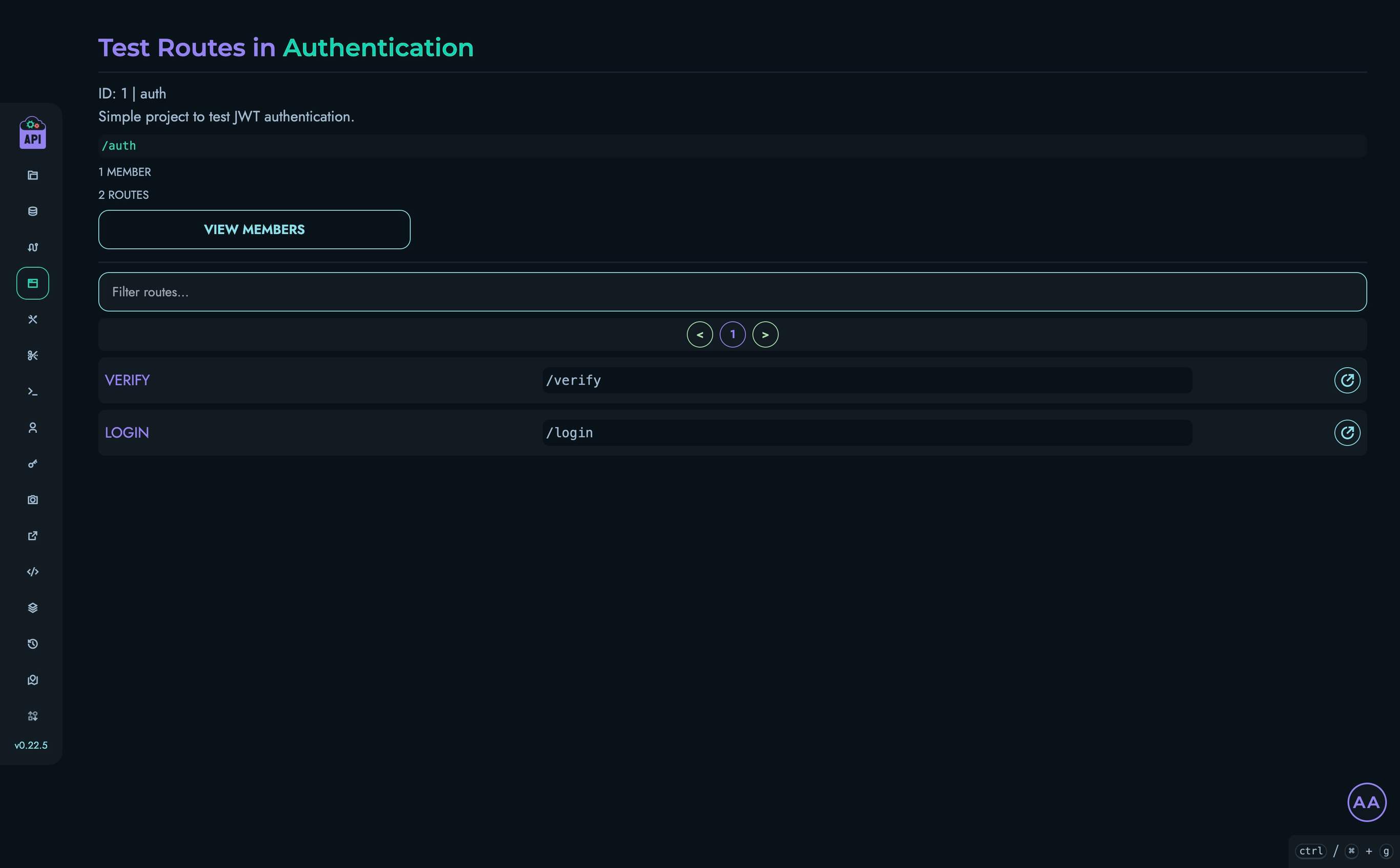

Routes are the endpoints that expose your API functionality:

- Created through the visual X Engine or programmatically

- Define how requests are processed and responses are formed

- Support various HTTP methods (GET, POST, PUT, DELETE, etc.)

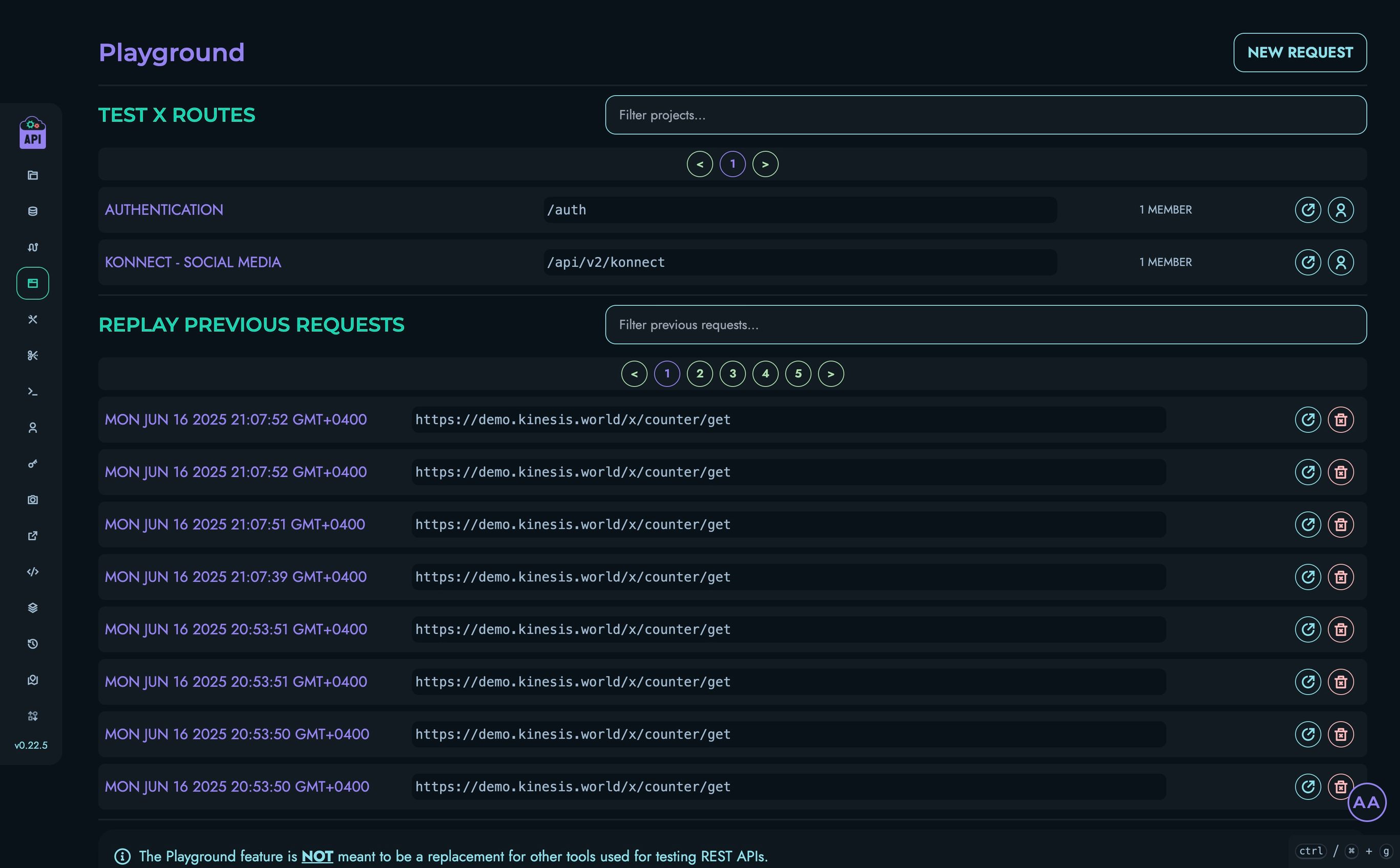

- Include the Playground for testing API behavior

Routes are the primary way external applications interact with your Kinesis API.